Build Earthquake Catalog

In this final notebook, we read the matched-filter database, remove the multiple detections and write a clean earthquake catalog in a csv file.

[1]:

import os

n_CPUs = 12

os.environ["OMP_NUM_THREADS"] = str(n_CPUs)

import BPMF

import glob

import h5py as h5

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import sys

import seisbench.models as sbm

from BPMF.data_reader_examples import data_reader_mseed

from tqdm import tqdm

from time import time as give_time

pd.set_option('display.width', 1000)

[2]:

# PROGRAM PARAMETERS

NETWORK_FILENAME = "network.csv"

TEMPLATE_DB = "template_db"

MATCHED_FILTER_DB = "matched_filter_db"

OUTPUT_CSV_FILENAME = "final_catalog.csv"

OUTPUT_DB_FILENAME = "final_catalog.h5"

BACKPROJ_DB_FILENAME = "reloc_bp.h5"

CHECK_SUMMARY_FILE = False

PATH_MF = os.path.join(BPMF.cfg.OUTPUT_PATH, MATCHED_FILTER_DB)

DATA_FOLDER = "preprocessed_2_12"

[3]:

# read network metadata

net = BPMF.dataset.Network(NETWORK_FILENAME)

net.read()

Read the detected events’ metadata for each template

[4]:

# template filenames

template_filenames = glob.glob(os.path.join(BPMF.cfg.OUTPUT_PATH, TEMPLATE_DB, "template*"))

template_filenames.sort()

# initialize the template group

template_group = BPMF.dataset.TemplateGroup.read_from_files(template_filenames, net)

template_group.read_catalog(

extra_attributes=["cc"],

progress=True,

db_path=PATH_MF,

check_summary_file=CHECK_SUMMARY_FILE,

)

# sometimes, tid is not cast to the correct dtype

template_group.catalog.catalog["tid"] = template_group.catalog.catalog["tid"].astype("int32")

Reading catalog: 0%| | 0/11 [00:00<?, ?it/s]/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

Reading catalog: 55%|█████▍ | 6/11 [00:00<00:00, 49.46it/s]/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

/home/ebeauce/miniconda3/envs/hy8_py312/lib/python3.12/site-packages/pandas/core/series.py:1031: UserWarning: no explicit representation of timezones available for np.datetime64

arr = np.asarray(values, dtype=dtype)

Reading catalog: 100%|██████████| 11/11 [00:00<00:00, 65.25it/s]

The BPMF.dataset.TemplateGroup now has a catalog attribute, which is a BPMF.dataset.Catalog instance.

Remove the multiple detections

Remove multiple detections with the TemplateGroup.remove_multiples method.

[5]:

# DISTANCE_CRITERION_KM: Distance, in km, between two detected events (within uncertainties) below which

# detected events are investigated for equality.

DISTANCE_CRITERION_KM = 15.0

# DT_CRITERION_SEC: Inter-event time, in seconds, between two detected events below which

# detected events are investigated for redundancy.

DT_CRITERION_SEC = 4.0

# SIMILARITY_CRITERION: Inter-template correlation coefficient below which detected events are investigated for equality.

SIMILARITY_CRITERION = 0.10

# N_CLOSEST_STATIONS: When computing the inter-template correlation coefficient, use the N_CLOSEST_STATIONS closest stations

# of a given pair of templates. This parameter is relevant for studies with large seismic networks.

N_CLOSEST_STATIONS = 10

[6]:

# we need to read the waveforms first

template_group.read_waveforms()

template_group.normalize(method="rms")

[7]:

template_group.remove_multiples(

n_closest_stations=N_CLOSEST_STATIONS,

dt_criterion=DT_CRITERION_SEC,

distance_criterion=DISTANCE_CRITERION_KM,

similarity_criterion=SIMILARITY_CRITERION,

progress=True,

)

/home/ebeauce/software/Seismic_BPMF/BPMF/dataset.py:4598: RuntimeWarning: invalid value encountered in divide

unit_direction /= np.sqrt(np.sum(unit_direction**2, axis=1))[:, np.newaxis]

False ../BPMF_outputs/template_db/intertp_cc.h5 False

Computing the similarity matrix...

Computing the inter-template directional errors...

Searching for events detected by multiple templates

All events occurring within 4.0 sec, with uncertainty ellipsoids closer than 15.0 km will and inter-template CC larger than 0.10 be considered the same

Removing multiples: 100%|██████████| 152/152 [00:00<00:00, 2722.39it/s]

0.06s to flag the multiples

The catalog now has three new columns: origin_time_sec (a timestamp of origin_time in seconds), interevent_time_sec (template-wise computation), unique_event.

[8]:

template_group.catalog.catalog.head(10)

[8]:

| longitude | latitude | depth | origin_time | cc | tid | return_time | origin_time_sec | interevent_time_sec | unique_event | |

|---|---|---|---|---|---|---|---|---|---|---|

| event_id | ||||||||||

| 8.0 | 30.345996 | 40.717188 | 9.15 | 2012-07-26 00:58:11.000 | 0.342058 | 8 | NaN | 1.343264e+09 | 0.00 | True |

| 0.0 | 30.340137 | 40.717813 | 9.15 | 2012-07-26 00:58:11.040 | 0.320761 | 0 | NaN | 1.343264e+09 | 0.04 | False |

| 4.0 | 30.322559 | 40.720937 | 8.85 | 2012-07-26 00:58:11.160 | 0.229280 | 4 | NaN | 1.343264e+09 | 0.12 | False |

| 1.0 | 30.283008 | 40.669375 | 10.70 | 2012-07-26 00:58:11.560 | 0.321162 | 1 | NaN | 1.343264e+09 | 0.40 | False |

| 0.1 | 30.340137 | 40.717813 | 9.15 | 2012-07-26 00:58:16.560 | 0.261512 | 0 | 5.52 | 1.343264e+09 | 5.00 | False |

| 8.1 | 30.345996 | 40.717188 | 9.15 | 2012-07-26 00:58:16.560 | 0.267058 | 8 | 5.56 | 1.343264e+09 | 0.00 | True |

| 4.1 | 30.322559 | 40.720937 | 8.85 | 2012-07-26 00:58:16.720 | 0.203529 | 4 | 5.56 | 1.343264e+09 | 0.16 | False |

| 0.2 | 30.340137 | 40.717813 | 9.15 | 2012-07-26 01:02:53.320 | 0.265464 | 0 | 276.76 | 1.343265e+09 | 276.60 | True |

| 4.2 | 30.322559 | 40.720937 | 8.85 | 2012-07-26 01:02:53.480 | 0.194087 | 4 | 276.76 | 1.343265e+09 | 0.16 | False |

| 8.2 | 30.345996 | 40.717188 | 9.15 | 2012-07-26 01:03:46.920 | 0.243864 | 8 | 330.36 | 1.343265e+09 | 53.44 | False |

The final catalog is made of the unique events only.

[9]:

template_group.catalog.catalog = template_group.catalog.catalog[template_group.catalog.catalog["unique_event"]]

initial_catalog = template_group.catalog.catalog.copy()

[10]:

print(f"There are {len(template_group.catalog.catalog)} events in our template matching catalog!")

template_group.catalog.catalog

There are 55 events in our template matching catalog!

[10]:

| longitude | latitude | depth | origin_time | cc | tid | return_time | origin_time_sec | interevent_time_sec | unique_event | |

|---|---|---|---|---|---|---|---|---|---|---|

| event_id | ||||||||||

| 8.0 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 00:58:11.000 | 0.342058 | 8 | NaN | 1.343264e+09 | 0.00 | True |

| 8.1 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 00:58:16.560 | 0.267058 | 8 | 5.56 | 1.343264e+09 | 0.00 | True |

| 0.2 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:02:53.320 | 0.265464 | 0 | 276.76 | 1.343265e+09 | 276.60 | True |

| 0.3 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:03:46.960 | 0.405439 | 0 | 53.64 | 1.343265e+09 | 0.04 | True |

| 8.3 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 01:09:25.880 | 0.289025 | 8 | 338.96 | 1.343265e+09 | 338.92 | True |

| 0.5 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:10:22.200 | 0.717180 | 0 | 56.28 | 1.343265e+09 | 0.00 | True |

| 8.5 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 01:10:43.960 | 0.589896 | 8 | 21.76 | 1.343265e+09 | 0.04 | True |

| 0.7 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:12:32.920 | 0.315861 | 0 | 108.92 | 1.343265e+09 | 0.04 | True |

| 0.8 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:14.800 | 0.581885 | 0 | 161.88 | 1.343265e+09 | 0.04 | True |

| 0.9 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:22.360 | 0.332758 | 0 | 7.56 | 1.343265e+09 | 0.04 | True |

| 0.10 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:35.120 | 0.286241 | 0 | 12.76 | 1.343265e+09 | 12.64 | True |

| 0.11 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:54.200 | 1.000000 | 0 | 19.08 | 1.343265e+09 | 0.04 | True |

| 0.12 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:16:30.040 | 0.332573 | 0 | 35.84 | 1.343265e+09 | 35.28 | True |

| 0.13 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:18:32.920 | 0.260217 | 0 | 122.88 | 1.343266e+09 | 122.32 | True |

| 1.8 | 30.283008 | 40.669375 | 10.7000 | 2012-07-26 01:35:15.200 | 0.262023 | 1 | 1124.60 | 1.343267e+09 | 0.56 | True |

| 0.14 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:39:53.720 | 0.230063 | 0 | 1280.80 | 1.343267e+09 | 278.52 | True |

| 4.12 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 01:52:28.040 | 0.206217 | 4 | 2034.96 | 1.343268e+09 | 754.32 | True |

| 0.15 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:52:36.480 | 0.394923 | 0 | 762.76 | 1.343268e+09 | 0.04 | True |

| 8.13 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 02:22:49.840 | 0.235595 | 8 | 1813.40 | 1.343269e+09 | 1812.84 | True |

| 0.17 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 02:24:34.960 | 0.469003 | 0 | 105.12 | 1.343269e+09 | 0.04 | True |

| 1.11 | 30.283008 | 40.669375 | 10.7000 | 2012-07-26 02:35:01.640 | 1.000000 | 1 | 626.12 | 1.343270e+09 | 0.40 | True |

| 0.19 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 03:00:38.920 | 0.515621 | 0 | 1537.84 | 1.343272e+09 | 0.04 | True |

| 4.18 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 03:08:33.640 | 0.411452 | 4 | 474.56 | 1.343272e+09 | 0.12 | True |

| 4.19 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 03:10:55.160 | 0.325523 | 4 | 141.52 | 1.343272e+09 | 0.16 | True |

| 4.20 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 04:30:33.640 | 0.190512 | 4 | 4778.48 | 1.343277e+09 | 4778.48 | True |

| 2.4 | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 04:43:38.320 | 1.000000 | 2 | 5704.84 | 1.343278e+09 | 0.04 | True |

| 8.19 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 04:45:03.880 | 0.274806 | 8 | 5648.88 | 1.343278e+09 | 85.36 | True |

| 2.5 | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 04:46:49.240 | 0.324501 | 2 | 190.92 | 1.343278e+09 | 0.04 | True |

| 3.5 | 30.345996 | 40.720312 | 9.3500 | 2012-07-26 04:48:38.520 | 1.000000 | 3 | 109.32 | 1.343278e+09 | 109.28 | True |

| 2.7 | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 04:51:06.600 | 0.347202 | 2 | 148.04 | 1.343278e+09 | 0.04 | True |

| 4.23 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 05:22:04.560 | 0.284128 | 4 | 2005.80 | 1.343280e+09 | 1857.96 | True |

| 4.24 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 05:45:46.120 | 0.183825 | 4 | 1421.56 | 1.343282e+09 | 1421.56 | True |

| 4.25 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 05:46:59.360 | 0.268304 | 4 | 73.24 | 1.343282e+09 | 73.24 | True |

| 4.26 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 05:57:16.560 | 0.216216 | 4 | 617.20 | 1.343282e+09 | 617.20 | True |

| 4.27 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 08:08:25.760 | 1.000000 | 4 | 7869.20 | 1.343290e+09 | 0.12 | True |

| 5.0 | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 09:21:39.360 | 0.206347 | 5 | NaN | 1.343294e+09 | 4393.20 | True |

| 5.1 | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 09:28:48.320 | 0.174713 | 5 | 428.96 | 1.343295e+09 | 428.96 | True |

| 5.2 | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 10:07:23.680 | 1.000000 | 5 | 2315.36 | 1.343297e+09 | 2315.36 | True |

| 4.28 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 10:16:45.880 | 0.358521 | 4 | 7700.12 | 1.343298e+09 | 0.16 | True |

| 4.29 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 10:53:46.680 | 0.239580 | 4 | 2220.80 | 1.343300e+09 | 2220.80 | True |

| 4.30 | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 11:02:23.680 | 0.350599 | 4 | 517.00 | 1.343301e+09 | 0.12 | True |

| 6.0 | 30.287402 | 40.619687 | -0.7500 | 2012-07-26 11:55:36.000 | 1.000000 | 6 | NaN | 1.343304e+09 | 3192.32 | True |

| 7.0 | 30.294238 | 40.794063 | -1.9500 | 2012-07-26 13:35:26.520 | 1.000000 | 7 | NaN | 1.343310e+09 | 5990.52 | True |

| 0.25 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 13:48:32.720 | 0.643463 | 0 | 9969.16 | 1.343311e+09 | 0.04 | True |

| 0.26 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 13:50:18.520 | 0.325642 | 0 | 105.80 | 1.343311e+09 | 0.04 | True |

| 8.24 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 13:51:58.560 | 0.536551 | 8 | 100.08 | 1.343311e+09 | 0.00 | True |

| 0.28 | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 13:53:31.600 | 0.685687 | 0 | 93.04 | 1.343311e+09 | 0.04 | True |

| 8.26 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 13:54:54.160 | 0.409128 | 8 | 82.60 | 1.343311e+09 | 82.04 | True |

| 8.27 | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 13:56:54.200 | 1.000000 | 8 | 120.04 | 1.343311e+09 | 119.48 | True |

| 2.12 | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 14:38:50.520 | 0.244041 | 2 | 2718.96 | 1.343314e+09 | 0.08 | True |

| 3.12 | 30.345996 | 40.720312 | 9.3500 | 2012-07-26 14:49:28.440 | 0.233022 | 3 | 638.00 | 1.343314e+09 | 637.92 | True |

| 9.0 | 30.328052 | 40.600078 | -1.9875 | 2012-07-26 15:06:20.080 | 1.000000 | 9 | NaN | 1.343315e+09 | 1011.64 | True |

| 5.3 | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 16:26:52.520 | 0.252150 | 5 | 22768.84 | 1.343320e+09 | 4832.44 | True |

| 5.4 | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 16:33:57.640 | 0.170999 | 5 | 425.12 | 1.343320e+09 | 425.12 | True |

| 10.0 | 30.310352 | 40.654375 | -0.5000 | 2012-07-26 17:28:21.640 | 1.000000 | 10 | NaN | 1.343324e+09 | 3264.00 | True |

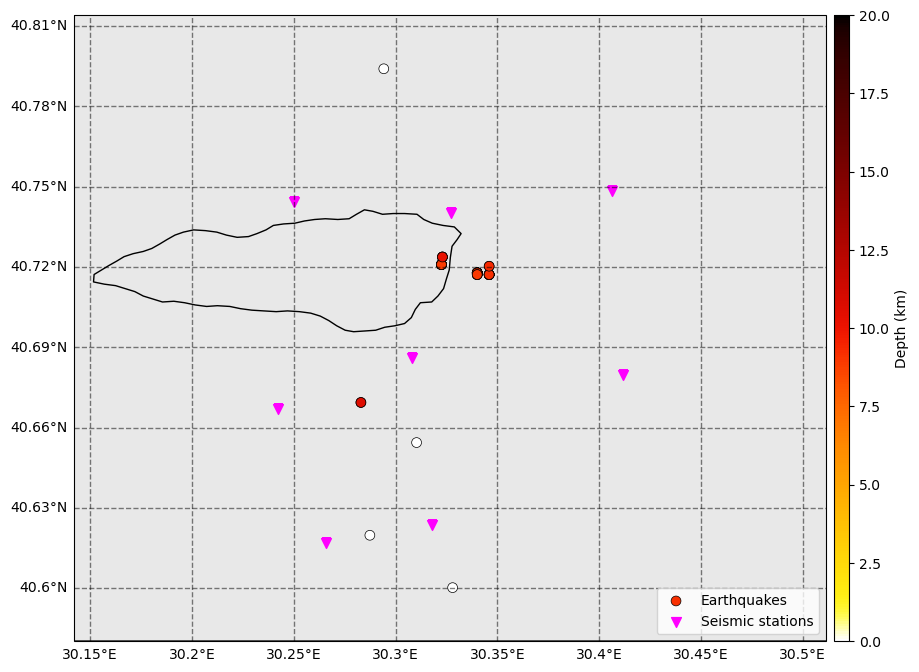

Let’s plot these events on a map. You will see that there are far fewer dots on the map than the total number of earthquakes in our catalog… This is because all newly detected events are attributed their template locations. Therefore, most events are plotted at the exact same location.

[11]:

fig = template_group.catalog.plot_map(

figsize=(10, 10), network=net, s=50, markersize_station=50, lat_margin=0.02, plot_uncertainties=False

)

ax = fig.get_axes()[0]

ax.set_facecolor("dimgrey")

ax.patch.set_alpha(0.15)

Bonus: Relocate each events

In your workflow, you might be ok with the approximate locations of the template matching catalog. However, if you decide to keep refining the catalog, here are some suggestions to get started.

One possibility to start refining the locations of all events is to re-run the same process as in notebook 6, namely PhaseNet and NonLinLoc.

[12]:

# PhaseNet picking parameters

# PhaseNet was trained for 100Hz data. Even if we saw that running PhaseNet on 25Hz data

# was good for backprojection, here, picking benefits from running PhaseNet on 100Hz data.

# Thus, we will upsample the waveforms before running PhaseNet.

PHASENET_SAMPLING_RATE_HZ = 100.

UPSAMPLING_BEFORE_PN_RELOCATION = int(PHASENET_SAMPLING_RATE_HZ/BPMF.cfg.SAMPLING_RATE_HZ)

DOWNSAMPLING_BEFORE_PN_RELOCATION = 1

# DURATION_SEC: the duration, in seconds, of the data stream starting at the detection time

# defined by Event.origin_time. This data stream is used for picking the P/S waves.

DURATION_SEC = 60.0

# THRESHOLD_P: probability of P-wave arrival above which we declare a pick. If several picks are

# declared during the DURATION_SEC data stream, we only keep the best one. We can

# afford using a low probability threshold since we already know with some confidence

# that an earthquake is in the data stream.

THRESHOLD_P = 0.10

# THRESHOLD_S: probability of S-wave arrival above which we declare a pick.

THRESHOLD_S = 0.10

# DATA_FOLDER: name of the folder where the waveforms we want to use for picking are stored

DATA_FOLDER = "preprocessed_2_12"

# COMPONENT_ALIASES: A dictionary that defines the possible channel names to search for

# for example, the seismometer might not be oriented and the horizontal channels

# might be named 1 and 2, in which case we arbitrarily decide to take 1 as the "N" channel

# and 2 as the "E" channel. This doesn't matter for picking P- and S-wave arrival times.

COMPONENT_ALIASES = {"N": ["N", "1"], "E": ["E", "2"], "Z": ["Z"]}

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "P"}

# USE_APRIORI_PICKS: boolean. This option is IMPORTANT when running BPMF in HIGH SEISMICITY CONTEXTS, like

# during the aftershock sequence of a large earthquake. If there are many events happening

# close to each other in time, we need to guide PhaseNet to pick the right set of picks.

# For that, we use the predicted P- and S-wave times from backprojection to add extra weight to

# the picks closer to those times and make it more likely to identify them as the "best" picks.

# WARNING: If there are truly many events, even this trick might fail. It's because "phase association"

# is an intrinsically hard problem in this case, and the picking might be hard to do automatically.

USE_APRIORI_PICKS = True

#SEARCH_WIN_SEC: When `use_apriori_picks=True`, the P/S picks in the `DURATION_SEC`-long window are weighted

# using a gaussian centered on the predicted P/S wave arrivals and with standard deviation

# equal to `SEARCH_WIN_SEC`. Thus, the picks outside of +/- `3*SEARCH_WIN_SEC` are essentially

# given a weight of 0.

SEARCH_WIN_SEC = 1.0

# MAX_HORIZONTAL_UNC_KM: Horizontal location uncertainty, in km, above which we keep the template location

MAX_HORIZONTAL_UNC_KM = 10.

# location parameters

LOCATION_ROUTINE = "NLLoc"

# NLLOC_METHOD: string that defines what loss function is used by NLLoc, see http://alomax.free.fr/nlloc/ for more info.

# Using some flavor of 'EDT' is important to obtain robust locations that are not sensitive to pick outliers.

NLLOC_METHOD = "EDT_OT_WT_ML"

# MINIMUM_NUM_STATIONS_W_PICKS: minimum number of stations with picks to even try relocation.

MINIMUM_NUM_STATIONS_W_PICKS = 3

# we set a maximum tolerable difference, in percentage, between the picked time and the predicted travel time

MAX_TIME_DIFFERENT_PICKS_PREDICTED_PERCENT = 25

[13]:

def ml_picker(x, chunk_size=3000, device="cpu"):

"""ML-based seismic phase picker using PhaseNet.

For maximum flexibility, all interfacing with phase pickers is done through

wrapper functions like this one. This function must behave like:

`y = f(x)`,

where `y` are the time series of P- and S-wave probabilities. This function must

include all preprocessing steps that may be required, like chunking and normalization.

Parameters

----------

x : np.ndarray

Seismograms, shape (n_stations, n_channels=3, n_times).

chunk_size : int, optional

Size of the seismograms, in samples, used in the training of the ML model.

Default is 3000 (30 seconds at 100 Hz).

device : str, optional

Device to use to run the ML model, either "cpu" or "gpu". Default is

Returns

-------

ps_proba_time_series : np.ndarray

Time series of P- and S-wave probabilities, shape (n_stations, n_phases, n_times).

The first channel, `ps_proba_time_series[:, 0, :]` is the P-wave probability,

and the second, `ps_proba_time_series[:, 1, :]`, is the S-wave probability.

"""

from torch import no_grad, from_numpy

_ml_detector = sbm.PhaseNet.from_pretrained("original")

_ml_detector.eval()

if device == "gpu":

_ml_detector.to("cuda")

x = BPMF.utils.normalize_batch(x, normalization_window_sample=chunk_size)

with no_grad():

x = from_numpy(x).float().to("cuda" if device == "gpu" else "cpu")

ps_proba_time_series = _ml_detector(x)

# remove channel 0, which is probability of noise

return ps_proba_time_series[:, 1:, :].cpu().detach().numpy()

[14]:

events = {}

for i in tqdm(range(len(template_group.catalog.catalog)), desc="Relocating each individual event"):

row = template_group.catalog.catalog.iloc[i]

tid, evidx = row.name.split(".")

# get the template instance from template_group

template = template_group.templates[template_group.tindexes.loc[int(tid)]]

# this is the filename of the database where template tid's detected events were stored

detection_db_filename = f"detections_template{tid}.h5"

db_path = os.path.join(BPMF.cfg.OUTPUT_PATH, MATCHED_FILTER_DB)

with h5.File(os.path.join(db_path, detection_db_filename), mode="r") as fdet:

keys = list(fdet.keys())

event = BPMF.dataset.Event.read_from_file(

hdf5_file=fdet[keys[int(evidx)]], data_reader=data_reader_mseed

)

# set arrival_times attribute to re-pick P-/S-wave arrivals with prior information

event.set_arrival_times_from_moveouts(verbose=0)

# # attach data reader this way (note: conflict with data_reader argument in phasenet's wrapper module)

# event.data_reader = data_reader_mseed

# pick P-/S-wave arrivals

event.pick_PS_phases(

ml_picker,

DURATION_SEC,

threshold_P=THRESHOLD_P,

threshold_S=THRESHOLD_S,

component_aliases=COMPONENT_ALIASES,

data_folder=DATA_FOLDER,

upsampling=UPSAMPLING_BEFORE_PN_RELOCATION,

downsampling=DOWNSAMPLING_BEFORE_PN_RELOCATION,

use_apriori_picks=USE_APRIORI_PICKS,

keep_probability_time_series=True,

)

if len(event.picks.dropna(how="any")) >= MINIMUM_NUM_STATIONS_W_PICKS:

# first relocation, insensitive to outliers

event.relocate(

stations=net.stations, routine=LOCATION_ROUTINE, method=NLLOC_METHOD,

)

if "NLLoc_success" in event.aux_data:

# this variable was inserted into ev.aux_data if NLLoc successfully located the event

# use predicted times to remove outlier picks

event.remove_outlier_picks(max_diff_percent=MAX_TIME_DIFFERENT_PICKS_PREDICTED_PERCENT)

if len(event.picks.dropna(how="any")) >= MINIMUM_NUM_STATIONS_W_PICKS:

# first relocation, insensitive to outliers

event.relocate(

stations=net.stations, routine=LOCATION_ROUTINE, method=NLLOC_METHOD,

)

else:

del event.aux_data["NLLoc_success"]

events[row.name] = event

if ("NLLoc_success" in event.aux_data) and event.aux_data["NLLoc_success"] and (event.hmax_unc) < MAX_HORIZONTAL_UNC_KM:

pass

else:

# relocation was not successful, inherit location from template

tid = template_group.catalog.catalog.loc[row.name, "tid"]

event.inherit_location(template_group.templates[template_group.tindexes.loc[tid]])

template_group.catalog.catalog.loc[row.name, "longitude"] = event.longitude

template_group.catalog.catalog.loc[row.name, "latitude"] = event.latitude

template_group.catalog.catalog.loc[row.name, "depth"] = event.depth

template_group.catalog.catalog.loc[row.name, "origin_time"] = event.origin_time

template_group.catalog.catalog.loc[row.name, "hmax_unc"] = event.hmax_unc

template_group.catalog.catalog.loc[row.name, "hmin_unc"] = event.hmin_unc

template_group.catalog.catalog.loc[row.name, "vmax_unc"] = event.vmax_unc

template_group.catalog.catalog.loc[row.name, "az_hmax_unc"] = event.az_hmax_unc

Relocating each individual event: 0%| | 0/55 [00:00<?, ?it/s]/tmp/ipykernel_2939694/1648401400.py:58: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '2012-07-26T00:58:11.000000Z' has dtype incompatible with datetime64[ms], please explicitly cast to a compatible dtype first.

template_group.catalog.catalog.loc[row.name, "origin_time"] = event.origin_time

Relocating each individual event: 100%|██████████| 55/55 [00:23<00:00, 2.38it/s]

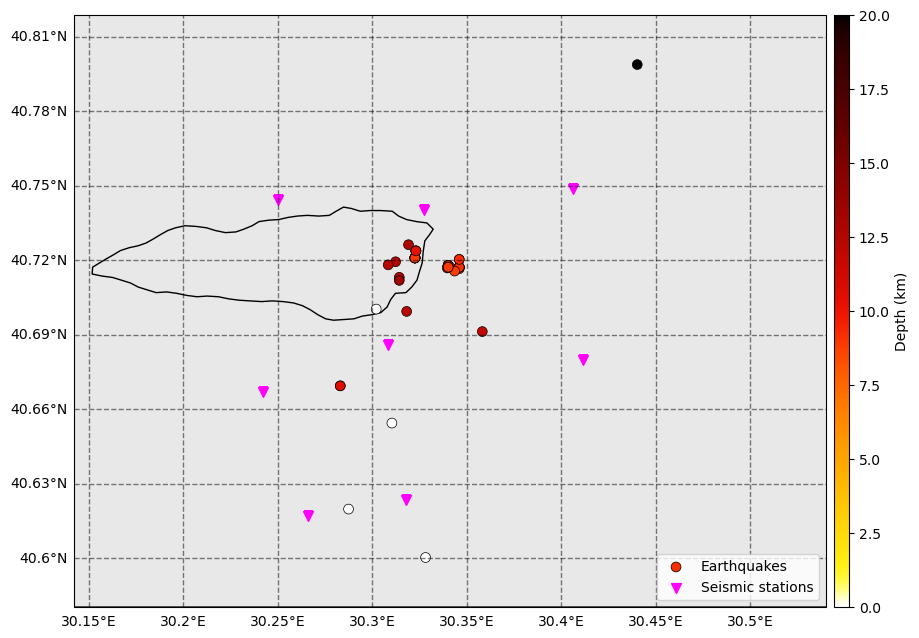

[15]:

fig = template_group.catalog.plot_map(

figsize=(10, 10), network=net, s=50, markersize_station=50, lat_margin=0.02, plot_uncertainties=False

)

ax = fig.get_axes()[0]

ax.set_facecolor("dimgrey")

ax.patch.set_alpha(0.15)

Assemble the backprojection and template matching catalog

When selecting the template events from the backprojection catalog, we imposed some quality criteria that might have thrown out some events that we still want in our final catalog. Here, we make a simple comparison of the backprojection and template matching catalogs to find these missing events and add them to the final catalog.

[16]:

BACKPROJECTION_CATALOG_FILENAME = "backprojection_catalog.csv"

[17]:

tm_catalog = template_group.catalog.catalog.copy()

tm_catalog.reset_index(inplace=True)

for i in range(len(tm_catalog)):

tm_catalog.loc[i, "event_id"] = f"tm_{tm_catalog.loc[i, 'event_id']}"

tm_catalog

[17]:

| event_id | longitude | latitude | depth | origin_time | cc | tid | return_time | origin_time_sec | interevent_time_sec | unique_event | hmax_unc | hmin_unc | vmax_unc | az_hmax_unc | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | tm_8.0 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T00:58:11.000000Z | 0.342058 | 8 | NaN | 1.343264e+09 | 0.00 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 |

| 1 | tm_8.1 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T00:58:16.560000Z | 0.267058 | 8 | 5.56 | 1.343264e+09 | 0.00 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 |

| 2 | tm_0.2 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:02:53.320000Z | 0.265464 | 0 | 276.76 | 1.343265e+09 | 276.60 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 3 | tm_0.3 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:03:46.960000Z | 0.405439 | 0 | 53.64 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 4 | tm_8.3 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T01:09:25.880000Z | 0.289025 | 8 | 338.96 | 1.343265e+09 | 338.92 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 |

| 5 | tm_0.5 | 30.319141 | 40.726250 | 12.600 | 2012-07-26T01:10:21.760000Z | 0.717180 | 0 | 56.28 | 1.343265e+09 | 0.00 | True | 1.715140 | 1.493157 | 2.479309 | 146.441208 |

| 6 | tm_8.5 | 30.318164 | 40.699375 | 12.300 | 2012-07-26T01:10:43.920000Z | 0.589896 | 8 | 21.76 | 1.343265e+09 | 0.04 | True | 3.210000 | 1.431238 | 3.338822 | 164.680389 |

| 7 | tm_0.7 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:12:32.920000Z | 0.315861 | 0 | 108.92 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 8 | tm_0.8 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:15:14.040000Z | 0.581885 | 0 | 161.88 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 9 | tm_0.9 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:15:14.000000Z | 0.332758 | 0 | 7.56 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 10 | tm_0.10 | 30.345996 | 40.716563 | 9.050 | 2012-07-26T01:15:54.200000Z | 0.286241 | 0 | 12.76 | 1.343265e+09 | 12.64 | True | 1.196124 | 0.865575 | 2.519593 | 77.244270 |

| 11 | tm_0.11 | 30.339648 | 40.716875 | 9.100 | 2012-07-26T01:15:54.240000Z | 1.000000 | 0 | 19.08 | 1.343265e+09 | 0.04 | True | 2.027819 | 1.159840 | 2.352297 | 89.593399 |

| 12 | tm_0.12 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:16:30.040000Z | 0.332573 | 0 | 35.84 | 1.343265e+09 | 35.28 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 13 | tm_0.13 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:18:32.920000Z | 0.260217 | 0 | 122.88 | 1.343266e+09 | 122.32 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 14 | tm_1.8 | 30.283008 | 40.669375 | 10.700 | 2012-07-26T01:35:15.200000Z | 0.262023 | 1 | 1124.60 | 1.343267e+09 | 0.56 | True | 5.237969 | 2.169321 | 3.042312 | 46.649498 |

| 15 | tm_0.14 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:39:55.520000Z | 0.230063 | 0 | 1280.80 | 1.343267e+09 | 278.52 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 16 | tm_4.12 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T01:52:28.040000Z | 0.206217 | 4 | 2034.96 | 1.343268e+09 | 754.32 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 17 | tm_0.15 | 30.358203 | 40.691250 | 11.800 | 2012-07-26T01:52:36.480000Z | 0.394923 | 0 | 762.76 | 1.343268e+09 | 0.04 | True | 6.283857 | 2.552138 | 5.081644 | 110.047188 |

| 18 | tm_8.13 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T02:22:49.840000Z | 0.235595 | 8 | 1813.40 | 1.343269e+09 | 1812.84 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 |

| 19 | tm_0.17 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T02:24:36.560000Z | 0.469003 | 0 | 105.12 | 1.343269e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 20 | tm_1.11 | 30.283008 | 40.669375 | 10.700 | 2012-07-26T02:35:01.200000Z | 1.000000 | 1 | 626.12 | 1.343270e+09 | 0.40 | True | 5.237969 | 2.169321 | 3.042312 | 46.649498 |

| 21 | tm_0.19 | 30.314258 | 40.713125 | 12.100 | 2012-07-26T03:00:38.720000Z | 0.515621 | 0 | 1537.84 | 1.343272e+09 | 0.04 | True | 1.815854 | 1.211303 | 3.207473 | 88.635719 |

| 22 | tm_4.18 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T03:08:33.640000Z | 0.411452 | 4 | 474.56 | 1.343272e+09 | 0.12 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 23 | tm_4.19 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T03:10:55.160000Z | 0.325523 | 4 | 141.52 | 1.343272e+09 | 0.16 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 24 | tm_4.20 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T04:30:33.640000Z | 0.190512 | 4 | 4778.48 | 1.343277e+09 | 4778.48 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 25 | tm_2.4 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:43:38.320000Z | 1.000000 | 2 | 5704.84 | 1.343278e+09 | 0.04 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 |

| 26 | tm_8.19 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T04:45:03.880000Z | 0.274806 | 8 | 5648.88 | 1.343278e+09 | 85.36 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 |

| 27 | tm_2.5 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:46:49.240000Z | 0.324501 | 2 | 190.92 | 1.343278e+09 | 0.04 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 |

| 28 | tm_3.5 | 30.345996 | 40.720312 | 9.350 | 2012-07-26T04:48:38.480000Z | 1.000000 | 3 | 109.32 | 1.343278e+09 | 109.28 | True | 1.817742 | 0.925883 | 2.592613 | 94.536827 |

| 29 | tm_2.7 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:51:06.600000Z | 0.347202 | 2 | 148.04 | 1.343278e+09 | 0.04 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 |

| 30 | tm_4.23 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:22:04.560000Z | 0.284128 | 4 | 2005.80 | 1.343280e+09 | 1857.96 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 31 | tm_4.24 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:45:46.120000Z | 0.183825 | 4 | 1421.56 | 1.343282e+09 | 1421.56 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 32 | tm_4.25 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:46:59.360000Z | 0.268304 | 4 | 73.24 | 1.343282e+09 | 73.24 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 33 | tm_4.26 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:57:16.560000Z | 0.216216 | 4 | 617.20 | 1.343282e+09 | 617.20 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 34 | tm_4.27 | 30.302051 | 40.700313 | -1.950 | 2012-07-26T08:08:25.600000Z | 1.000000 | 4 | 7869.20 | 1.343290e+09 | 0.12 | True | 3.270674 | 1.723631 | 8.185627 | 82.300278 |

| 35 | tm_5.0 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T09:21:39.360000Z | 0.206347 | 5 | NaN | 1.343294e+09 | 4393.20 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 |

| 36 | tm_5.1 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T09:28:48.320000Z | 0.174713 | 5 | 428.96 | 1.343295e+09 | 428.96 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 |

| 37 | tm_5.2 | 30.341113 | 40.717188 | 8.850 | 2012-07-26T10:07:23.680000Z | 1.000000 | 5 | 2315.36 | 1.343297e+09 | 2315.36 | True | 1.659967 | 0.930441 | 2.463023 | 89.344856 |

| 38 | tm_4.28 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T10:16:45.880000Z | 0.358521 | 4 | 7700.12 | 1.343298e+09 | 0.16 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 39 | tm_4.29 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T10:53:46.680000Z | 0.239580 | 4 | 2220.80 | 1.343300e+09 | 2220.80 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 40 | tm_4.30 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T11:02:23.680000Z | 0.350599 | 4 | 517.00 | 1.343301e+09 | 0.12 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 |

| 41 | tm_6.0 | 30.287402 | 40.619687 | -0.750 | 2012-07-26T11:55:35.560000Z | 1.000000 | 6 | NaN | 1.343304e+09 | 3192.32 | True | 4.696620 | 1.133235 | 4.342819 | 144.824448 |

| 42 | tm_7.0 | 30.440234 | 40.798750 | 28.600 | 2012-07-26T13:35:24.400000Z | 1.000000 | 7 | NaN | 1.343310e+09 | 5990.52 | True | 4.682024 | 1.871417 | 20.819151 | 102.730064 |

| 43 | tm_0.25 | 30.312305 | 40.719375 | 12.300 | 2012-07-26T13:48:32.440000Z | 0.643463 | 0 | 9969.16 | 1.343311e+09 | 0.04 | True | 1.613540 | 1.379402 | 2.785293 | -158.947372 |

| 44 | tm_0.26 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T13:50:18.520000Z | 0.325642 | 0 | 105.80 | 1.343311e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 |

| 45 | tm_8.24 | 30.314258 | 40.711875 | 13.500 | 2012-07-26T13:51:58.200000Z | 0.536551 | 8 | 100.08 | 1.343311e+09 | 0.00 | True | 1.860965 | 1.810982 | 3.401975 | 139.211029 |

| 46 | tm_0.28 | 30.308398 | 40.718125 | 12.300 | 2012-07-26T13:53:31.360000Z | 0.685687 | 0 | 93.04 | 1.343311e+09 | 0.04 | True | 1.603626 | 1.453808 | 2.882052 | -142.896729 |

| 47 | tm_8.26 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T13:54:54.160000Z | 0.409128 | 8 | 82.60 | 1.343311e+09 | 82.04 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 |

| 48 | tm_8.27 | 30.343555 | 40.715625 | 8.900 | 2012-07-26T13:56:54.280000Z | 1.000000 | 8 | 120.04 | 1.343311e+09 | 119.48 | True | 1.992835 | 1.122762 | 3.628948 | 95.300972 |

| 49 | tm_2.12 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T14:38:50.520000Z | 0.244041 | 2 | 2718.96 | 1.343314e+09 | 0.08 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 |

| 50 | tm_3.12 | 30.345996 | 40.720312 | 9.350 | 2012-07-26T14:49:28.440000Z | 0.233022 | 3 | 638.00 | 1.343314e+09 | 637.92 | True | 1.817742 | 0.925883 | 2.592613 | 94.536827 |

| 51 | tm_9.0 | 30.328174 | 40.600156 | -1.975 | 2012-07-26T15:06:20.120000Z | 1.000000 | 9 | NaN | 1.343315e+09 | 1011.64 | True | 2.859252 | 1.706538 | 2.957412 | 145.703659 |

| 52 | tm_5.3 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T16:26:52.520000Z | 0.252150 | 5 | 22768.84 | 1.343320e+09 | 4832.44 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 |

| 53 | tm_5.4 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T16:33:57.640000Z | 0.170999 | 5 | 425.12 | 1.343320e+09 | 425.12 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 |

| 54 | tm_10.0 | 30.310352 | 40.654375 | -0.500 | 2012-07-26T17:28:20.200000Z | 1.000000 | 10 | NaN | 1.343324e+09 | 3264.00 | True | 4.602456 | 1.586548 | 5.752606 | 63.806900 |

[18]:

bp_catalog = pd.read_csv(

os.path.join(BPMF.cfg.OUTPUT_PATH, BACKPROJECTION_CATALOG_FILENAME),

index_col=0

)

# convert origin times from string to pandas.Timestamp

bp_catalog["origin_time"] = pd.to_datetime(bp_catalog["origin_time"])

bp_catalog

[18]:

| longitude | latitude | depth | origin_time | hmax_unc | hmin_unc | az_hmax_unc | vmax_unc | event_id.1 | |

|---|---|---|---|---|---|---|---|---|---|

| event_id | |||||||||

| 2012-07-26T01:10:21.720000Z | 30.319141 | 40.728750 | 12.6000 | 2012-07-26 01:10:21.720 | 1.713889 | 1.438382 | 150.388474 | 2.443677 | bp_0 |

| 2012-07-26T01:15:54.200000Z | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:54.200 | 1.766185 | 0.957067 | 93.146858 | 2.177404 | bp_1 |

| 2012-07-26T01:57:57.600000Z | 30.210000 | 40.600000 | -1.5000 | 2012-07-26 01:57:57.600 | 15.000000 | 15.000000 | 0.000000 | 15.000000 | bp_2 |

| 2012-07-26T02:35:01.640000Z | 30.283008 | 40.669375 | 10.7000 | 2012-07-26 02:35:01.640 | 5.237969 | 2.169321 | 46.649498 | 3.042312 | bp_3 |

| 2012-07-26T03:00:38.760000Z | 30.314258 | 40.711875 | 11.9000 | 2012-07-26 03:00:38.760 | 1.722674 | 1.167362 | 88.518181 | 3.007942 | bp_4 |

| 2012-07-26T04:43:38.320000Z | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 04:43:38.320 | 5.539433 | 2.653419 | 131.163864 | 4.183529 | bp_5 |

| 2012-07-26T04:48:38.520000Z | 30.345996 | 40.720312 | 9.3500 | 2012-07-26 04:48:38.520 | 1.817742 | 0.925883 | 94.536827 | 2.592613 | bp_6 |

| 2012-07-26T08:08:25.760000Z | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 08:08:25.760 | 1.209022 | 0.942840 | 127.960640 | 2.648956 | bp_7 |

| 2012-07-26T10:07:23.680000Z | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 10:07:23.680 | 1.501076 | 0.950018 | 91.866226 | 2.387271 | bp_8 |

| 2012-07-26T11:55:36.000000Z | 30.287402 | 40.619687 | -0.7500 | 2012-07-26 11:55:36.000 | 4.696620 | 1.133235 | 144.824448 | 4.342819 | bp_9 |

| 2012-07-26T13:35:26.520000Z | 30.294238 | 40.794063 | -1.9500 | 2012-07-26 13:35:26.520 | 2.955352 | 0.966323 | 87.058800 | 3.966837 | bp_10 |

| 2012-07-26T13:48:32.400000Z | 30.312305 | 40.720625 | 12.3000 | 2012-07-26 13:48:32.400 | 1.546550 | 1.414732 | 21.012421 | 2.729270 | bp_11 |

| 2012-07-26T13:51:58.200000Z | 30.314258 | 40.711875 | 13.5000 | 2012-07-26 13:51:58.200 | 1.741413 | 1.491587 | 166.295975 | 3.198883 | bp_12 |

| 2012-07-26T13:53:31.360000Z | 30.312305 | 40.720625 | 12.1000 | 2012-07-26 13:53:31.360 | 1.578368 | 1.425255 | 31.847794 | 2.887139 | bp_13 |

| 2012-07-26T13:56:54.200000Z | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 13:56:54.200 | 2.007848 | 0.880852 | 86.483894 | 3.057234 | bp_14 |

| 2012-07-26T15:06:20.120000Z | 30.328052 | 40.600078 | -1.9875 | 2012-07-26 15:06:20.120 | 0.870097 | 0.518110 | 94.459953 | 0.358991 | bp_15 |

| 2012-07-26T17:28:21.640000Z | 30.310352 | 40.654375 | -0.5000 | 2012-07-26 17:28:21.640 | 4.602456 | 1.586548 | 63.806900 | 5.752606 | bp_16 |

[19]:

# catalog merging parameters

dt_criterion_pd = pd.Timedelta(DT_CRITERION_SEC, "s")

HMAX_UNC_CRITERION_KM = 10.

[20]:

combined_catalog = tm_catalog.copy()

missing_event = np.zeros(len(bp_catalog), dtype=bool)

for i in tqdm(range(len(bp_catalog)), desc="Loop through BP cat"):

if bp_catalog.iloc[i]["hmax_unc"] > HMAX_UNC_CRITERION_KM:

continue

t_min = bp_catalog.iloc[i]["origin_time"] - dt_criterion_pd

t_max = bp_catalog.iloc[i]["origin_time"] + dt_criterion_pd

subset_tm = (tm_catalog["origin_time"] > t_min) & (tm_catalog["origin_time"] < t_max)

if np.sum(subset_tm) == 0:

missing_event[i] = True

combined_catalog = pd.concat(

(tm_catalog, bp_catalog[missing_event]),

ignore_index=True

)

combined_catalog.sort_values(

"origin_time", ascending=True, inplace=True

)

Loop through BP cat: 0%| | 0/17 [00:00<?, ?it/s]Loop through BP cat: 100%|██████████| 17/17 [00:00<00:00, 664.47it/s]

/tmp/ipykernel_2939694/2661912240.py:13: FutureWarning: The behavior of DataFrame concatenation with empty or all-NA entries is deprecated. In a future version, this will no longer exclude empty or all-NA columns when determining the result dtypes. To retain the old behavior, exclude the relevant entries before the concat operation.

combined_catalog = pd.concat(

And here is the combined catalog! In this simple example, it appears that all events in the backprojection catalog were re-detected in the template matching catalog so that the final catalog has no extra events with respect to the template matching catalog. However, it may not be always like that. Some events in the backprojection catalog may not have been well enough located for you to use them as templates, and they may not have been subsequently re-detected in the template matching catalog.

[21]:

combined_catalog

[21]:

| event_id | longitude | latitude | depth | origin_time | cc | tid | return_time | origin_time_sec | interevent_time_sec | unique_event | hmax_unc | hmin_unc | vmax_unc | az_hmax_unc | event_id.1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | tm_8.0 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T00:58:11.000000Z | 0.342058 | 8.0 | NaN | 1.343264e+09 | 0.00 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| 1 | tm_8.1 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T00:58:16.560000Z | 0.267058 | 8.0 | 5.56 | 1.343264e+09 | 0.00 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| 2 | tm_0.2 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:02:53.320000Z | 0.265464 | 0.0 | 276.76 | 1.343265e+09 | 276.60 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 3 | tm_0.3 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:03:46.960000Z | 0.405439 | 0.0 | 53.64 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 4 | tm_8.3 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T01:09:25.880000Z | 0.289025 | 8.0 | 338.96 | 1.343265e+09 | 338.92 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| 5 | tm_0.5 | 30.319141 | 40.726250 | 12.600 | 2012-07-26T01:10:21.760000Z | 0.717180 | 0.0 | 56.28 | 1.343265e+09 | 0.00 | True | 1.715140 | 1.493157 | 2.479309 | 146.441208 | NaN |

| 6 | tm_8.5 | 30.318164 | 40.699375 | 12.300 | 2012-07-26T01:10:43.920000Z | 0.589896 | 8.0 | 21.76 | 1.343265e+09 | 0.04 | True | 3.210000 | 1.431238 | 3.338822 | 164.680389 | NaN |

| 7 | tm_0.7 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:12:32.920000Z | 0.315861 | 0.0 | 108.92 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 9 | tm_0.9 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:15:14.000000Z | 0.332758 | 0.0 | 7.56 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 8 | tm_0.8 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:15:14.040000Z | 0.581885 | 0.0 | 161.88 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 10 | tm_0.10 | 30.345996 | 40.716563 | 9.050 | 2012-07-26T01:15:54.200000Z | 0.286241 | 0.0 | 12.76 | 1.343265e+09 | 12.64 | True | 1.196124 | 0.865575 | 2.519593 | 77.244270 | NaN |

| 11 | tm_0.11 | 30.339648 | 40.716875 | 9.100 | 2012-07-26T01:15:54.240000Z | 1.000000 | 0.0 | 19.08 | 1.343265e+09 | 0.04 | True | 2.027819 | 1.159840 | 2.352297 | 89.593399 | NaN |

| 12 | tm_0.12 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:16:30.040000Z | 0.332573 | 0.0 | 35.84 | 1.343265e+09 | 35.28 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 13 | tm_0.13 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:18:32.920000Z | 0.260217 | 0.0 | 122.88 | 1.343266e+09 | 122.32 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 14 | tm_1.8 | 30.283008 | 40.669375 | 10.700 | 2012-07-26T01:35:15.200000Z | 0.262023 | 1.0 | 1124.60 | 1.343267e+09 | 0.56 | True | 5.237969 | 2.169321 | 3.042312 | 46.649498 | NaN |

| 15 | tm_0.14 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:39:55.520000Z | 0.230063 | 0.0 | 1280.80 | 1.343267e+09 | 278.52 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 16 | tm_4.12 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T01:52:28.040000Z | 0.206217 | 4.0 | 2034.96 | 1.343268e+09 | 754.32 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 17 | tm_0.15 | 30.358203 | 40.691250 | 11.800 | 2012-07-26T01:52:36.480000Z | 0.394923 | 0.0 | 762.76 | 1.343268e+09 | 0.04 | True | 6.283857 | 2.552138 | 5.081644 | 110.047188 | NaN |

| 18 | tm_8.13 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T02:22:49.840000Z | 0.235595 | 8.0 | 1813.40 | 1.343269e+09 | 1812.84 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| 19 | tm_0.17 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T02:24:36.560000Z | 0.469003 | 0.0 | 105.12 | 1.343269e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 20 | tm_1.11 | 30.283008 | 40.669375 | 10.700 | 2012-07-26T02:35:01.200000Z | 1.000000 | 1.0 | 626.12 | 1.343270e+09 | 0.40 | True | 5.237969 | 2.169321 | 3.042312 | 46.649498 | NaN |

| 21 | tm_0.19 | 30.314258 | 40.713125 | 12.100 | 2012-07-26T03:00:38.720000Z | 0.515621 | 0.0 | 1537.84 | 1.343272e+09 | 0.04 | True | 1.815854 | 1.211303 | 3.207473 | 88.635719 | NaN |

| 22 | tm_4.18 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T03:08:33.640000Z | 0.411452 | 4.0 | 474.56 | 1.343272e+09 | 0.12 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 23 | tm_4.19 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T03:10:55.160000Z | 0.325523 | 4.0 | 141.52 | 1.343272e+09 | 0.16 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 24 | tm_4.20 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T04:30:33.640000Z | 0.190512 | 4.0 | 4778.48 | 1.343277e+09 | 4778.48 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 25 | tm_2.4 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:43:38.320000Z | 1.000000 | 2.0 | 5704.84 | 1.343278e+09 | 0.04 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| 26 | tm_8.19 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T04:45:03.880000Z | 0.274806 | 8.0 | 5648.88 | 1.343278e+09 | 85.36 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| 27 | tm_2.5 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:46:49.240000Z | 0.324501 | 2.0 | 190.92 | 1.343278e+09 | 0.04 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| 28 | tm_3.5 | 30.345996 | 40.720312 | 9.350 | 2012-07-26T04:48:38.480000Z | 1.000000 | 3.0 | 109.32 | 1.343278e+09 | 109.28 | True | 1.817742 | 0.925883 | 2.592613 | 94.536827 | NaN |

| 29 | tm_2.7 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:51:06.600000Z | 0.347202 | 2.0 | 148.04 | 1.343278e+09 | 0.04 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| 30 | tm_4.23 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:22:04.560000Z | 0.284128 | 4.0 | 2005.80 | 1.343280e+09 | 1857.96 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 31 | tm_4.24 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:45:46.120000Z | 0.183825 | 4.0 | 1421.56 | 1.343282e+09 | 1421.56 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 32 | tm_4.25 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:46:59.360000Z | 0.268304 | 4.0 | 73.24 | 1.343282e+09 | 73.24 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 33 | tm_4.26 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:57:16.560000Z | 0.216216 | 4.0 | 617.20 | 1.343282e+09 | 617.20 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 34 | tm_4.27 | 30.302051 | 40.700313 | -1.950 | 2012-07-26T08:08:25.600000Z | 1.000000 | 4.0 | 7869.20 | 1.343290e+09 | 0.12 | True | 3.270674 | 1.723631 | 8.185627 | 82.300278 | NaN |

| 35 | tm_5.0 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T09:21:39.360000Z | 0.206347 | 5.0 | NaN | 1.343294e+09 | 4393.20 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| 36 | tm_5.1 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T09:28:48.320000Z | 0.174713 | 5.0 | 428.96 | 1.343295e+09 | 428.96 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| 37 | tm_5.2 | 30.341113 | 40.717188 | 8.850 | 2012-07-26T10:07:23.680000Z | 1.000000 | 5.0 | 2315.36 | 1.343297e+09 | 2315.36 | True | 1.659967 | 0.930441 | 2.463023 | 89.344856 | NaN |

| 38 | tm_4.28 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T10:16:45.880000Z | 0.358521 | 4.0 | 7700.12 | 1.343298e+09 | 0.16 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 39 | tm_4.29 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T10:53:46.680000Z | 0.239580 | 4.0 | 2220.80 | 1.343300e+09 | 2220.80 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 40 | tm_4.30 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T11:02:23.680000Z | 0.350599 | 4.0 | 517.00 | 1.343301e+09 | 0.12 | True | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| 41 | tm_6.0 | 30.287402 | 40.619687 | -0.750 | 2012-07-26T11:55:35.560000Z | 1.000000 | 6.0 | NaN | 1.343304e+09 | 3192.32 | True | 4.696620 | 1.133235 | 4.342819 | 144.824448 | NaN |

| 42 | tm_7.0 | 30.440234 | 40.798750 | 28.600 | 2012-07-26T13:35:24.400000Z | 1.000000 | 7.0 | NaN | 1.343310e+09 | 5990.52 | True | 4.682024 | 1.871417 | 20.819151 | 102.730064 | NaN |

| 43 | tm_0.25 | 30.312305 | 40.719375 | 12.300 | 2012-07-26T13:48:32.440000Z | 0.643463 | 0.0 | 9969.16 | 1.343311e+09 | 0.04 | True | 1.613540 | 1.379402 | 2.785293 | -158.947372 | NaN |

| 44 | tm_0.26 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T13:50:18.520000Z | 0.325642 | 0.0 | 105.80 | 1.343311e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 45 | tm_8.24 | 30.314258 | 40.711875 | 13.500 | 2012-07-26T13:51:58.200000Z | 0.536551 | 8.0 | 100.08 | 1.343311e+09 | 0.00 | True | 1.860965 | 1.810982 | 3.401975 | 139.211029 | NaN |

| 46 | tm_0.28 | 30.308398 | 40.718125 | 12.300 | 2012-07-26T13:53:31.360000Z | 0.685687 | 0.0 | 93.04 | 1.343311e+09 | 0.04 | True | 1.603626 | 1.453808 | 2.882052 | -142.896729 | NaN |

| 47 | tm_8.26 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T13:54:54.160000Z | 0.409128 | 8.0 | 82.60 | 1.343311e+09 | 82.04 | True | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| 48 | tm_8.27 | 30.343555 | 40.715625 | 8.900 | 2012-07-26T13:56:54.280000Z | 1.000000 | 8.0 | 120.04 | 1.343311e+09 | 119.48 | True | 1.992835 | 1.122762 | 3.628948 | 95.300972 | NaN |

| 49 | tm_2.12 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T14:38:50.520000Z | 0.244041 | 2.0 | 2718.96 | 1.343314e+09 | 0.08 | True | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| 50 | tm_3.12 | 30.345996 | 40.720312 | 9.350 | 2012-07-26T14:49:28.440000Z | 0.233022 | 3.0 | 638.00 | 1.343314e+09 | 637.92 | True | 1.817742 | 0.925883 | 2.592613 | 94.536827 | NaN |

| 51 | tm_9.0 | 30.328174 | 40.600156 | -1.975 | 2012-07-26T15:06:20.120000Z | 1.000000 | 9.0 | NaN | 1.343315e+09 | 1011.64 | True | 2.859252 | 1.706538 | 2.957412 | 145.703659 | NaN |

| 52 | tm_5.3 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T16:26:52.520000Z | 0.252150 | 5.0 | 22768.84 | 1.343320e+09 | 4832.44 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| 53 | tm_5.4 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T16:33:57.640000Z | 0.170999 | 5.0 | 425.12 | 1.343320e+09 | 425.12 | True | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| 54 | tm_10.0 | 30.310352 | 40.654375 | -0.500 | 2012-07-26T17:28:20.200000Z | 1.000000 | 10.0 | NaN | 1.343324e+09 | 3264.00 | True | 4.602456 | 1.586548 | 5.752606 | 63.806900 | NaN |

In this example, we see that two entries of the catalog end up pointing to the same event (see below):

[30]:

combined_catalog[

(combined_catalog["origin_time"] >= pd.Timestamp("2012-07-26T01:15:00"))

&

(combined_catalog["origin_time"] <= pd.Timestamp("2012-07-26T01:15:30"))

]

[30]:

| event_id | longitude | latitude | depth | origin_time | cc | tid | return_time | origin_time_sec | interevent_time_sec | unique_event | hmax_unc | hmin_unc | vmax_unc | az_hmax_unc | event_id.1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 9 | tm_0.9 | 30.340137 | 40.717813 | 9.15 | 2012-07-26T01:15:14.000000Z | 0.332758 | 0.0 | 7.56 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| 8 | tm_0.8 | 30.340137 | 40.717813 | 9.15 | 2012-07-26T01:15:14.040000Z | 0.581885 | 0.0 | 161.88 | 1.343265e+09 | 0.04 | True | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

versus (before relocating each event individually)

[31]:

initial_catalog[

(initial_catalog["origin_time"] >= pd.Timestamp("2012-07-26T01:15:00"))

&

(initial_catalog["origin_time"] <= pd.Timestamp("2012-07-26T01:15:30"))

]

[31]:

| longitude | latitude | depth | origin_time | cc | tid | return_time | origin_time_sec | interevent_time_sec | unique_event | |

|---|---|---|---|---|---|---|---|---|---|---|

| event_id | ||||||||||

| 0.8 | 30.340137 | 40.717813 | 9.15 | 2012-07-26 01:15:14.800 | 0.581885 | 0 | 161.88 | 1.343265e+09 | 0.04 | True |

| 0.9 | 30.340137 | 40.717813 | 9.15 | 2012-07-26 01:15:22.360 | 0.332758 | 0 | 7.56 | 1.343265e+09 | 0.04 | True |

This is because, despite using the use_apriori_picks=True, the automatic picking ended up picking the event before tm_0.9. So, before I release a fix to this issue, we need to clean up these redundant catalog entries! And keep in mind that the initial template matching catalog might have better temporal resolution.

Last clean up

[24]:

final_cat = combined_catalog.copy()

final_cat["origin_time_sec"] = (

final_cat["origin_time"].values.astype("datetime64[ms]").astype("float64") / 1000.

)

num_removed = -1

iteration = 0

while num_removed != 0:

print(f"------ Iteration {iteration} --------")

interevent_time_sec = (

final_cat["origin_time_sec"].values[1:] - final_cat["origin_time_sec"].values[:-1]

)

interevent_time_sec = np.hstack( (1000., interevent_time_sec) )

remove = np.zeros(len(final_cat), dtype=bool)

for i in np.where(interevent_time_sec < DT_CRITERION_SEC)[0]:

dist = BPMF.utils.two_point_epicentral_distance(

final_cat.iloc[i-1].longitude,

final_cat.iloc[i-1].latitude,

final_cat.iloc[i].longitude,

final_cat.iloc[i].latitude,

)

if dist < DISTANCE_CRITERION_KM:

if final_cat.iloc[i-1].hmax_unc < final_cat.iloc[i].hmax_unc:

remove[i] = True

else:

remove[i-1] = True

num_removed = np.sum(remove)

print(f"Removed {num_removed} events. Examples:")

if num_removed > 1:

removed_events = np.where(remove)[0]

print(final_cat[["origin_time", "event_id", "longitude", "latitude"]].iloc[removed_events[0]])

if interevent_time_sec[removed_events[0]] < DT_CRITERION_SEC:

print(final_cat[["origin_time", "event_id", "longitude",

"latitude"]].iloc[removed_events[0] - 1])

else:

print(final_cat[["origin_time", "event_id", "longitude",

"latitude"]].iloc[removed_events[0] + 1])

final_cat = final_cat[~remove]

iteration += 1

------ Iteration 0 --------

Removed 2 events. Examples:

origin_time 2012-07-26T01:15:14.000000Z

event_id tm_0.9

longitude 30.340137

latitude 40.717813

Name: 9, dtype: object

origin_time 2012-07-26T01:15:14.040000Z

event_id tm_0.8

longitude 30.340137

latitude 40.717813

Name: 8, dtype: object

------ Iteration 1 --------

Removed 0 events. Examples:

[25]:

final_cat.set_index("event_id", drop=False, inplace=True)

final_cat.drop(columns=["interevent_time_sec", "unique_event", "origin_time_sec"], inplace=True)

final_cat.sort_values("origin_time", ascending=True, inplace=True)

final_cat

[25]:

| event_id | longitude | latitude | depth | origin_time | cc | tid | return_time | hmax_unc | hmin_unc | vmax_unc | az_hmax_unc | event_id.1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| event_id | |||||||||||||

| tm_8.0 | tm_8.0 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T00:58:11.000000Z | 0.342058 | 8.0 | NaN | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| tm_8.1 | tm_8.1 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T00:58:16.560000Z | 0.267058 | 8.0 | 5.56 | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| tm_0.2 | tm_0.2 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:02:53.320000Z | 0.265464 | 0.0 | 276.76 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_0.3 | tm_0.3 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:03:46.960000Z | 0.405439 | 0.0 | 53.64 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_8.3 | tm_8.3 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T01:09:25.880000Z | 0.289025 | 8.0 | 338.96 | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| tm_0.5 | tm_0.5 | 30.319141 | 40.726250 | 12.600 | 2012-07-26T01:10:21.760000Z | 0.717180 | 0.0 | 56.28 | 1.715140 | 1.493157 | 2.479309 | 146.441208 | NaN |

| tm_8.5 | tm_8.5 | 30.318164 | 40.699375 | 12.300 | 2012-07-26T01:10:43.920000Z | 0.589896 | 8.0 | 21.76 | 3.210000 | 1.431238 | 3.338822 | 164.680389 | NaN |

| tm_0.7 | tm_0.7 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:12:32.920000Z | 0.315861 | 0.0 | 108.92 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_0.8 | tm_0.8 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:15:14.040000Z | 0.581885 | 0.0 | 161.88 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_0.10 | tm_0.10 | 30.345996 | 40.716563 | 9.050 | 2012-07-26T01:15:54.200000Z | 0.286241 | 0.0 | 12.76 | 1.196124 | 0.865575 | 2.519593 | 77.244270 | NaN |

| tm_0.12 | tm_0.12 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:16:30.040000Z | 0.332573 | 0.0 | 35.84 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_0.13 | tm_0.13 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:18:32.920000Z | 0.260217 | 0.0 | 122.88 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_1.8 | tm_1.8 | 30.283008 | 40.669375 | 10.700 | 2012-07-26T01:35:15.200000Z | 0.262023 | 1.0 | 1124.60 | 5.237969 | 2.169321 | 3.042312 | 46.649498 | NaN |

| tm_0.14 | tm_0.14 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T01:39:55.520000Z | 0.230063 | 0.0 | 1280.80 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_4.12 | tm_4.12 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T01:52:28.040000Z | 0.206217 | 4.0 | 2034.96 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_0.15 | tm_0.15 | 30.358203 | 40.691250 | 11.800 | 2012-07-26T01:52:36.480000Z | 0.394923 | 0.0 | 762.76 | 6.283857 | 2.552138 | 5.081644 | 110.047188 | NaN |

| tm_8.13 | tm_8.13 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T02:22:49.840000Z | 0.235595 | 8.0 | 1813.40 | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| tm_0.17 | tm_0.17 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T02:24:36.560000Z | 0.469003 | 0.0 | 105.12 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_1.11 | tm_1.11 | 30.283008 | 40.669375 | 10.700 | 2012-07-26T02:35:01.200000Z | 1.000000 | 1.0 | 626.12 | 5.237969 | 2.169321 | 3.042312 | 46.649498 | NaN |

| tm_0.19 | tm_0.19 | 30.314258 | 40.713125 | 12.100 | 2012-07-26T03:00:38.720000Z | 0.515621 | 0.0 | 1537.84 | 1.815854 | 1.211303 | 3.207473 | 88.635719 | NaN |

| tm_4.18 | tm_4.18 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T03:08:33.640000Z | 0.411452 | 4.0 | 474.56 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.19 | tm_4.19 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T03:10:55.160000Z | 0.325523 | 4.0 | 141.52 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.20 | tm_4.20 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T04:30:33.640000Z | 0.190512 | 4.0 | 4778.48 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_2.4 | tm_2.4 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:43:38.320000Z | 1.000000 | 2.0 | 5704.84 | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| tm_8.19 | tm_8.19 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T04:45:03.880000Z | 0.274806 | 8.0 | 5648.88 | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| tm_2.5 | tm_2.5 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:46:49.240000Z | 0.324501 | 2.0 | 190.92 | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| tm_3.5 | tm_3.5 | 30.345996 | 40.720312 | 9.350 | 2012-07-26T04:48:38.480000Z | 1.000000 | 3.0 | 109.32 | 1.817742 | 0.925883 | 2.592613 | 94.536827 | NaN |

| tm_2.7 | tm_2.7 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T04:51:06.600000Z | 0.347202 | 2.0 | 148.04 | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| tm_4.23 | tm_4.23 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:22:04.560000Z | 0.284128 | 4.0 | 2005.80 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.24 | tm_4.24 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:45:46.120000Z | 0.183825 | 4.0 | 1421.56 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.25 | tm_4.25 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:46:59.360000Z | 0.268304 | 4.0 | 73.24 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.26 | tm_4.26 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T05:57:16.560000Z | 0.216216 | 4.0 | 617.20 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.27 | tm_4.27 | 30.302051 | 40.700313 | -1.950 | 2012-07-26T08:08:25.600000Z | 1.000000 | 4.0 | 7869.20 | 3.270674 | 1.723631 | 8.185627 | 82.300278 | NaN |

| tm_5.0 | tm_5.0 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T09:21:39.360000Z | 0.206347 | 5.0 | NaN | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| tm_5.1 | tm_5.1 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T09:28:48.320000Z | 0.174713 | 5.0 | 428.96 | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| tm_5.2 | tm_5.2 | 30.341113 | 40.717188 | 8.850 | 2012-07-26T10:07:23.680000Z | 1.000000 | 5.0 | 2315.36 | 1.659967 | 0.930441 | 2.463023 | 89.344856 | NaN |

| tm_4.28 | tm_4.28 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T10:16:45.880000Z | 0.358521 | 4.0 | 7700.12 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.29 | tm_4.29 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T10:53:46.680000Z | 0.239580 | 4.0 | 2220.80 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_4.30 | tm_4.30 | 30.322559 | 40.720937 | 8.850 | 2012-07-26T11:02:23.680000Z | 0.350599 | 4.0 | 517.00 | 1.209022 | 0.942840 | 2.648956 | 127.960640 | NaN |

| tm_6.0 | tm_6.0 | 30.287402 | 40.619687 | -0.750 | 2012-07-26T11:55:35.560000Z | 1.000000 | 6.0 | NaN | 4.696620 | 1.133235 | 4.342819 | 144.824448 | NaN |

| tm_7.0 | tm_7.0 | 30.440234 | 40.798750 | 28.600 | 2012-07-26T13:35:24.400000Z | 1.000000 | 7.0 | NaN | 4.682024 | 1.871417 | 20.819151 | 102.730064 | NaN |

| tm_0.25 | tm_0.25 | 30.312305 | 40.719375 | 12.300 | 2012-07-26T13:48:32.440000Z | 0.643463 | 0.0 | 9969.16 | 1.613540 | 1.379402 | 2.785293 | -158.947372 | NaN |

| tm_0.26 | tm_0.26 | 30.340137 | 40.717813 | 9.150 | 2012-07-26T13:50:18.520000Z | 0.325642 | 0.0 | 105.80 | 1.766185 | 0.957067 | 2.177404 | 93.146858 | NaN |

| tm_8.24 | tm_8.24 | 30.314258 | 40.711875 | 13.500 | 2012-07-26T13:51:58.200000Z | 0.536551 | 8.0 | 100.08 | 1.860965 | 1.810982 | 3.401975 | 139.211029 | NaN |

| tm_0.28 | tm_0.28 | 30.308398 | 40.718125 | 12.300 | 2012-07-26T13:53:31.360000Z | 0.685687 | 0.0 | 93.04 | 1.603626 | 1.453808 | 2.882052 | -142.896729 | NaN |

| tm_8.26 | tm_8.26 | 30.345996 | 40.717188 | 9.150 | 2012-07-26T13:54:54.160000Z | 0.409128 | 8.0 | 82.60 | 2.007848 | 0.880852 | 3.057234 | 86.483894 | NaN |

| tm_8.27 | tm_8.27 | 30.343555 | 40.715625 | 8.900 | 2012-07-26T13:56:54.280000Z | 1.000000 | 8.0 | 120.04 | 1.992835 | 1.122762 | 3.628948 | 95.300972 | NaN |

| tm_2.12 | tm_2.12 | 30.323047 | 40.723750 | 10.200 | 2012-07-26T14:38:50.520000Z | 0.244041 | 2.0 | 2718.96 | 5.539433 | 2.653419 | 4.183529 | 131.163864 | NaN |

| tm_3.12 | tm_3.12 | 30.345996 | 40.720312 | 9.350 | 2012-07-26T14:49:28.440000Z | 0.233022 | 3.0 | 638.00 | 1.817742 | 0.925883 | 2.592613 | 94.536827 | NaN |

| tm_9.0 | tm_9.0 | 30.328174 | 40.600156 | -1.975 | 2012-07-26T15:06:20.120000Z | 1.000000 | 9.0 | NaN | 2.859252 | 1.706538 | 2.957412 | 145.703659 | NaN |

| tm_5.3 | tm_5.3 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T16:26:52.520000Z | 0.252150 | 5.0 | 22768.84 | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| tm_5.4 | tm_5.4 | 30.340137 | 40.717188 | 8.950 | 2012-07-26T16:33:57.640000Z | 0.170999 | 5.0 | 425.12 | 1.501076 | 0.950018 | 2.387271 | 91.866226 | NaN |

| tm_10.0 | tm_10.0 | 30.310352 | 40.654375 | -0.500 | 2012-07-26T17:28:20.200000Z | 1.000000 | 10.0 | NaN | 4.602456 | 1.586548 | 5.752606 | 63.806900 | NaN |

Save final catalog

[26]:

# csv format

final_cat.to_csv(

os.path.join(BPMF.cfg.OUTPUT_PATH, OUTPUT_CSV_FILENAME)

)

[27]:

# hdf5 / BPMF format

for i in range(len(final_cat)):

evid = final_cat.iloc[i]["event_id"]

if evid[:2] == "tm":

events[evid.split("_")[1]].write(

OUTPUT_DB_FILENAME,

db_path=BPMF.cfg.OUTPUT_PATH,

gid=evid

)

elif evid[:2] == "bp":

with h5.File(os.path.join(BPMF.cfg.OUTPUT_PATH, OUTPUT_DB_FILENAME), mode="a") as fout:

with h5.File(os.path.join(BPMF.cfg.OUTPUT_PATH, BACKPROJ_DB_FILENAME), mode="r") as fin:

# copy the corresponding hdf5 group into fout

fin.copy(fin[evid], fout)

[28]:

# The final cat!

BPMF.utils.donefun()

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⠀⢀⡤⣤⣀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⣀⡀⠀⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⢀⡏⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⣀⠴⠋⠉⠉⡆⠀⠀⠀⠀⠀

⠀⠀⠀⠀⠀⢸⠀⠀⠀⠀⠀⠈⠉⠉⠙⠓⠚⠁⠀⠀⠀⠀⣿⠀⠀⠀⠀⠀

⠀⠀⠀⠀⢀⠞⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠹⣄⠀⠀⠀⠀

⠀⠀⠀⠀⡞⠀⠀⠀⠀⠀⠶⠀⠀⠀⠀⠀⠀⠦⠀⠀⠀⠀⠀⠸⡆⠀⠀⠀

⢠⣤⣶⣾⣧⣤⣤⣀⡀⠀⠀⠀⠀⠈⠀⠀⠀⢀⡤⠴⠶⠤⢤⡀⣧⣀⣀⠀

⠻⠶⣾⠁⠀⠀⠀⠀⠙⣆⠀⠀⠀⠀⠀⠀⣰⠋⠀⠀⠀⠀⠀⢹⣿⣭⣽⠇

⠀⠀⠙⠤⠴⢤⡤⠤⠤⠋⠉⠉⠉⠉⠉⠉⠉⠳⠖⠦⠤⠶⠦⠞⠁⠀⠀⠀

⠀ALL DONE!⠀⠀⠀