Relocation

In the previous notebook, 5_backprojection, we built a database of events detected with the backprojection technique. Here, we will improve the location of each of these events by using a more elaborated location technique.

[31]:

import os

# choose the number of threads you want to limit the computation to

n_CPUs = 12

os.environ["OMP_NUM_THREADS"] = str(n_CPUs)

import copy

import BPMF

import h5py as h5

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import string

import seisbench.models as sbm

from BPMF.data_reader_examples import data_reader_mseed

from time import time as give_time

# %config InlineBackend.figure_formats = ["svg"]

plt.rcParams["svg.fonttype"] = "none"

[32]:

INPUT_DB_FILENAME = "raw_bp.h5"

OUTPUT_DB_FILENAME = "reloc_bp.h5"

OUTPUT_CSV_FILENAME = "backprojection_catalog.csv"

NETWORK_FILENAME = "network.csv"

[33]:

net = BPMF.dataset.Network(NETWORK_FILENAME)

net.read()

Load the metadata of the events previously detected with backprojection

In the previous notebook, we used BPMF.dataset.Event.write to save the metadata of each detected event to a hdf5 database. Here, we will use BPMF.dataset.Event.read_from_file to read the metadata and build a list of BPMF.dataset.Event instances.

[34]:

backprojection_events = []

with h5.File(os.path.join(BPMF.cfg.OUTPUT_PATH, INPUT_DB_FILENAME), mode="r") as fin:

print(f"Each event's metadata is stored at a 'subfolder'. The subfolders are: {fin.keys()}")

print("In hdf5, 'subfolders' are called 'groups'.")

for group_id in fin.keys():

backprojection_events.append(

BPMF.dataset.Event.read_from_file(

INPUT_DB_FILENAME,

db_path=BPMF.cfg.OUTPUT_PATH,

gid=group_id,

data_reader=data_reader_mseed

)

)

Each event's metadata is stored at a 'subfolder'. The subfolders are: <KeysViewHDF5 ['20120726_011024.280000', '20120726_011555.880000', '20120726_015757.600000', '20120726_023502.160000', '20120726_030040.880000', '20120726_044340.160000', '20120726_044840.160000', '20120726_080827.360000', '20120726_100725.640000', '20120726_115536.760000', '20120726_133527.760000', '20120726_134834.840000', '20120726_135200.480000', '20120726_135333.600000', '20120726_135656.000000', '20120726_150621.480000', '20120726_172821.680000']>

In hdf5, 'subfolders' are called 'groups'.

[35]:

initial_catalog = pd.DataFrame(columns=["origin_time", "longitude", "latitude", "depth"])

for i, ev in enumerate(backprojection_events):

initial_catalog.loc[i, "origin_time"] = ev.origin_time

initial_catalog.loc[i, "longitude"] = ev.longitude

initial_catalog.loc[i, "latitude"] = ev.latitude

initial_catalog.loc[i, "depth"] = ev.depth

initial_catalog["origin_time"] = initial_catalog["origin_time"].values.astype("datetime64[ms]")

initial_catalog["longitude"] = initial_catalog["longitude"].values.astype("float32")

initial_catalog["latitude"] = initial_catalog["latitude"].values.astype("float32")

initial_catalog["depth"] = initial_catalog["depth"].values.astype("float32")

initial_catalog

[35]:

| origin_time | longitude | latitude | depth | |

|---|---|---|---|---|

| 0 | 2012-07-26 01:10:24.280 | 30.309999 | 40.720001 | 12.0 |

| 1 | 2012-07-26 01:15:55.880 | 30.379999 | 40.709999 | 8.0 |

| 2 | 2012-07-26 01:57:57.600 | 30.209999 | 40.599998 | -1.5 |

| 3 | 2012-07-26 02:35:02.160 | 30.330000 | 40.700001 | -1.5 |

| 4 | 2012-07-26 03:00:40.880 | 30.350000 | 40.709999 | 9.0 |

| 5 | 2012-07-26 04:43:40.160 | 30.350000 | 40.720001 | 8.5 |

| 6 | 2012-07-26 04:48:40.160 | 30.389999 | 40.709999 | 8.0 |

| 7 | 2012-07-26 08:08:27.360 | 30.379999 | 40.709999 | 8.0 |

| 8 | 2012-07-26 10:07:25.640 | 30.330000 | 40.720001 | 9.5 |

| 9 | 2012-07-26 11:55:36.760 | 30.270000 | 40.599998 | 3.0 |

| 10 | 2012-07-26 13:35:27.760 | 30.450001 | 40.799999 | -2.0 |

| 11 | 2012-07-26 13:48:34.840 | 30.309999 | 40.720001 | 12.0 |

| 12 | 2012-07-26 13:52:00.480 | 30.360001 | 40.709999 | 7.5 |

| 13 | 2012-07-26 13:53:33.600 | 30.330000 | 40.720001 | 12.0 |

| 14 | 2012-07-26 13:56:56.000 | 30.330000 | 40.730000 | 10.0 |

| 15 | 2012-07-26 15:06:21.480 | 30.330000 | 40.599998 | -2.0 |

| 16 | 2012-07-26 17:28:21.680 | 30.430000 | 40.720001 | 10.0 |

Build a BPMF.dataset.Catalog instance from initial_catalog in order to benefit from the BPMF.dataset.Catalog plotting methods.

[36]:

initial_catalog = BPMF.dataset.Catalog.read_from_dataframe(

initial_catalog,

)

[37]:

initial_catalog.catalog

[37]:

| longitude | latitude | depth | origin_time | |

|---|---|---|---|---|

| 0 | 30.309999 | 40.720001 | 12.0 | 2012-07-26 01:10:24.280 |

| 1 | 30.379999 | 40.709999 | 8.0 | 2012-07-26 01:15:55.880 |

| 2 | 30.209999 | 40.599998 | -1.5 | 2012-07-26 01:57:57.600 |

| 3 | 30.330000 | 40.700001 | -1.5 | 2012-07-26 02:35:02.160 |

| 4 | 30.350000 | 40.709999 | 9.0 | 2012-07-26 03:00:40.880 |

| 5 | 30.350000 | 40.720001 | 8.5 | 2012-07-26 04:43:40.160 |

| 6 | 30.389999 | 40.709999 | 8.0 | 2012-07-26 04:48:40.160 |

| 7 | 30.379999 | 40.709999 | 8.0 | 2012-07-26 08:08:27.360 |

| 8 | 30.330000 | 40.720001 | 9.5 | 2012-07-26 10:07:25.640 |

| 9 | 30.270000 | 40.599998 | 3.0 | 2012-07-26 11:55:36.760 |

| 10 | 30.450001 | 40.799999 | -2.0 | 2012-07-26 13:35:27.760 |

| 11 | 30.309999 | 40.720001 | 12.0 | 2012-07-26 13:48:34.840 |

| 12 | 30.360001 | 40.709999 | 7.5 | 2012-07-26 13:52:00.480 |

| 13 | 30.330000 | 40.720001 | 12.0 | 2012-07-26 13:53:33.600 |

| 14 | 30.330000 | 40.730000 | 10.0 | 2012-07-26 13:56:56.000 |

| 15 | 30.330000 | 40.599998 | -2.0 | 2012-07-26 15:06:21.480 |

| 16 | 30.430000 | 40.720001 | 10.0 | 2012-07-26 17:28:21.680 |

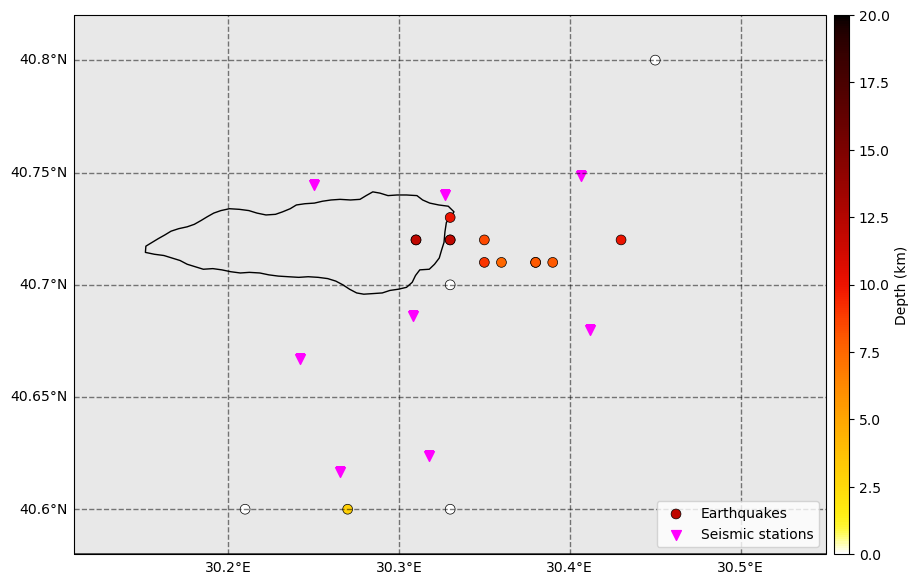

Let’s plot the locations that the backprojection method produced.

[38]:

fig = initial_catalog.plot_map(figsize=(10, 10), network=net, s=50, markersize_station=50, lat_margin=0.02)

ax = fig.get_axes()[0]

ax.set_facecolor("dimgrey")

ax.patch.set_alpha(0.15)

The backprojection method offers several advantages for locating earthquakes:

Locations come for free in addition to detecting events.

The “picking” of P and S waves does not involve any single-channel threshold.

Backprojection effectively solves the detection and phase association problems at the same time.

However, there is a number of cons:

The backprojection locations are just as good as the grid is. If a downsampled grid was used for computation efficiency, then the locations definitely need to be refined.

It is hard and computationally expensive to estimate uncertainties (as we will see later in this notebook).

Thus, in the following, we will explore two ways to refine the initial locations.

Relocation 1: PhaseNet picks and NonLinLoc

If you have installed PhaseNet and NonLinLoc, you can use BPMF.dataset.Event methods to easily relocate events with automatic picking and earthquake location. This is a two-step procedure. We first need to pick the P- and S-wave arrivals with PhaseNet before giving these data to NonLinLoc.

We will explore, step by step, what happens to the BPMF.dataset.Event instance when picking the phase arrivals and when relocating with NonLinLoc. First, we read short windows around the P- and S-wave arrivals, as determined by the backprojection locations.

Automated picking and relocation

[39]:

# event extraction parameters

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "P"}

# OFFSET_PHASE: dictionary defining the time offset taken before a given phase

# for example OFFSET_PHASE["P"] = 1.0 means that we extract the window

# 1 second before the predicted P arrival time

OFFSET_PHASE = {"P": 1.0, "S": 4.0}

# TIME_SHIFTED: boolean, if True, use moveouts to extract relevant windows

TIME_SHIFTED = True

# DATA_FOLDER: name of the folder where the waveforms we want to use for picking are stored

DATA_FOLDER = "preprocessed_2_12"

[40]:

t1 = give_time()

for i in range(len(backprojection_events)):

backprojection_events[i].read_waveforms(

BPMF.cfg.TEMPLATE_LEN_SEC,

phase_on_comp=PHASE_ON_COMP,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

)

t2 = give_time()

print(

f"{t2-t1:.2f}sec to read the waveforms for all {len(backprojection_events):d} detections."

)

2.54sec to read the waveforms for all 17 detections.

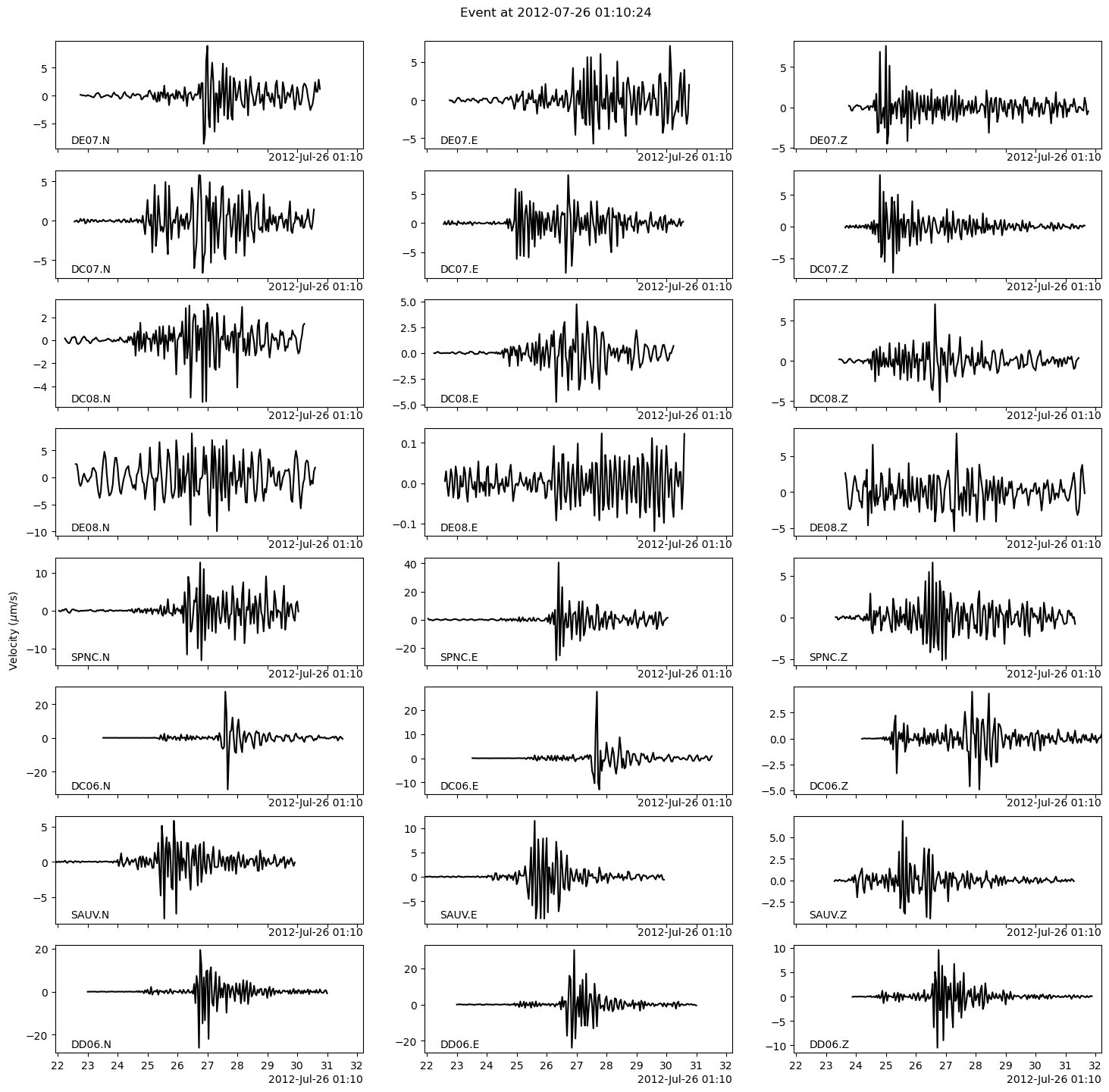

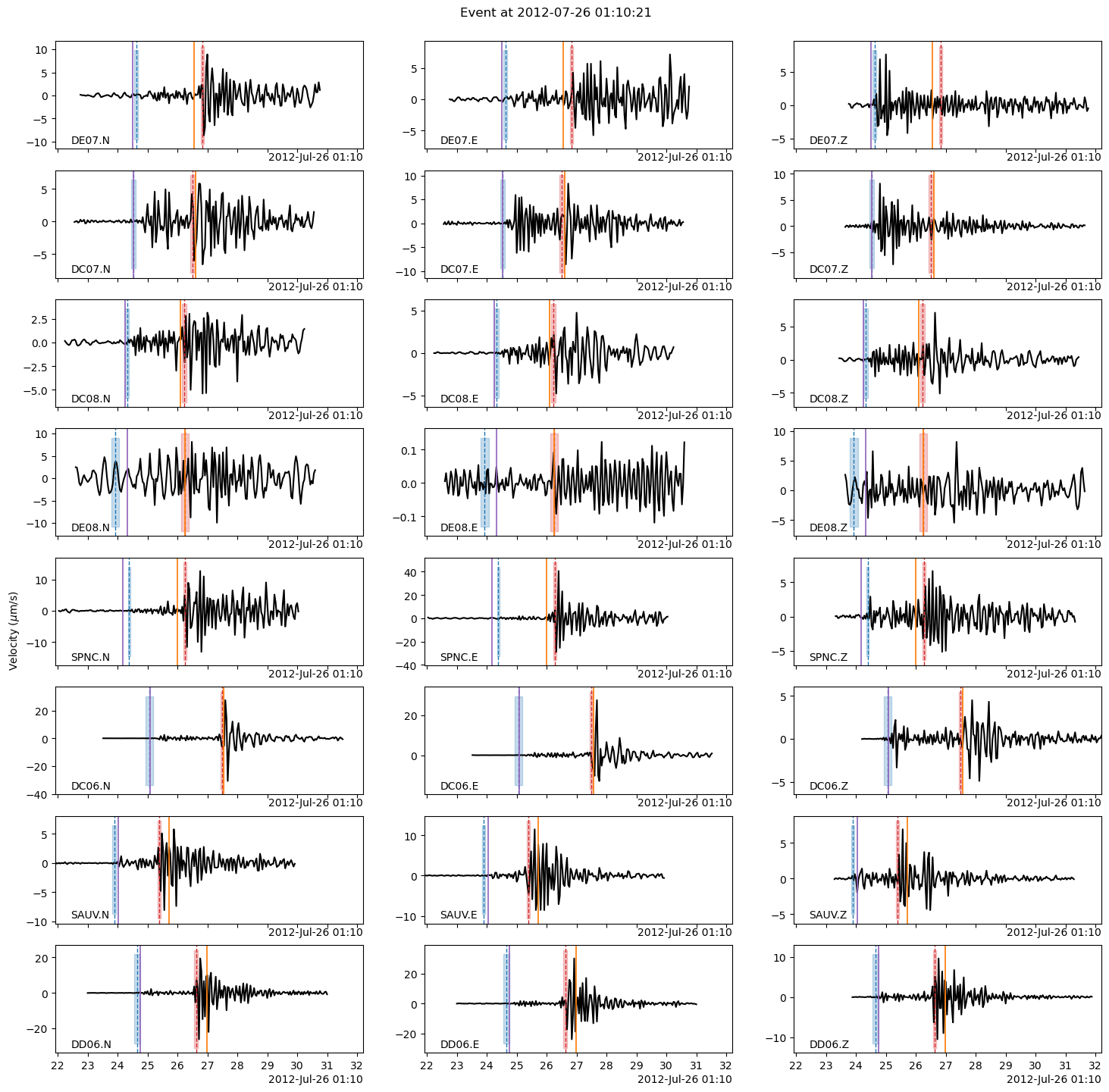

Let’s plot these short windows with BPMF.dataset.Event.plot.

[41]:

# play with EVENT_IDX to plot different events

EVENT_IDX = 0

fig = backprojection_events[EVENT_IDX].plot(figsize=(15, 15))

Now, we will use PhaseNet to pick the P- and S-wave arrivals. This is done by defining a wrapper function to plug PhaseNet into the BPMF workflow with the BPMF.dataset.Event.pick_PS_phases method.

[42]:

def ml_picker(x, chunk_size=3000, device="cpu"):

"""ML-based seismic phase picker using PhaseNet.

For maximum flexibility, all interfacing with phase pickers is done through

wrapper functions like this one. This function must behave like:

`y = f(x)`,

where `y` are the time series of P- and S-wave probabilities. This function must

include all preprocessing steps that may be required, like chunking and normalization.

Parameters

----------

x : np.ndarray

Seismograms, shape (n_stations, n_channels=3, n_times).

chunk_size : int, optional

Size of the seismograms, in samples, used in the training of the ML model.

Default is 3000 (30 seconds at 100 Hz).

device : str, optional

Device to use to run the ML model, either "cpu" or "gpu". Default is

Returns

-------

ps_proba_time_series : np.ndarray

Time series of P- and S-wave probabilities, shape (n_stations, n_phases, n_times).

The first channel, `ps_proba_time_series[:, 0, :]` is the P-wave probability,

and the second, `ps_proba_time_series[:, 1, :]`, is the S-wave probability.

"""

from torch import no_grad, from_numpy

_ml_detector = sbm.PhaseNet.from_pretrained("original")

_ml_detector.eval()

if device == "gpu":

_ml_detector.to("cuda")

x = BPMF.utils.normalize_batch(x, normalization_window_sample=chunk_size)

with no_grad():

x = from_numpy(x).float().to("cuda" if device == "gpu" else "cpu")

ps_proba_time_series = _ml_detector(x)

# remove channel 0, which is probability of noise

return ps_proba_time_series[:, 1:, :].cpu().detach().numpy()

[43]:

# PhaseNet picking parameters

# PhaseNet was trained for 100Hz data. Even if we saw that running PhaseNet on 25Hz data

# was good for backprojection, here, picking benefits from running PhaseNet on 100Hz data.

# Thus, we will upsample the waveforms before running PhaseNet.

PHASENET_SAMPLING_RATE_HZ = 100.

UPSAMPLING_BEFORE_PN_RELOCATION = int(PHASENET_SAMPLING_RATE_HZ/BPMF.cfg.SAMPLING_RATE_HZ)

DOWNSAMPLING_BEFORE_PN_RELOCATION = 1

# DURATION_SEC: the duration, in seconds, of the data stream starting at the detection time

# defined by Event.origin_time. This data stream is used for picking the P/S waves.

DURATION_SEC = 60.0

# THRESHOLD_P: probability of P-wave arrival above which we declare a pick. If several picks are

# declared during the DURATION_SEC data stream, we only keep the best one. We can

# afford using a low probability threshold since we already know with some confidence

# that an earthquake is in the data stream.

THRESHOLD_P = 0.10

# THRESHOLD_S: probability of S-wave arrival above which we declare a pick.

THRESHOLD_S = 0.10

# DATA_FOLDER: name of the folder where the waveforms we want to use for picking are stored

DATA_FOLDER = "preprocessed_2_12"

# COMPONENT_ALIASES: A dictionary that defines the possible channel names to search for

# for example, the seismometer might not be oriented and the horizontal channels

# might be named 1 and 2, in which case we arbitrarily decide to take 1 as the "N" channel

# and 2 as the "E" channel. This doesn't matter for picking P- and S-wave arrival times.

COMPONENT_ALIASES = {"N": ["N", "1"], "E": ["E", "2"], "Z": ["Z"]}

# USE_APRIORI_PICKS: boolean. This option is IMPORTANT when running BPMF in HIGH SEISMICITY CONTEXTS, like

# during the aftershock sequence of a large earthquake. If there are many events happening

# close to each other in time, we need to guide PhaseNet to pick the right set of picks.

# For that, we use the predicted P- and S-wave times from backprojection to add extra weight to

# the picks closer to those times and make it more likely to identify them as the "best" picks.

# WARNING: If there are truly many events, even this trick might fail. It's because "phase association"

# is an intrinsically hard problem in this case, and the picking might be hard to do automatically.

USE_APRIORI_PICKS = True

[44]:

for i in range(len(backprojection_events)):

print(f"Picking P and S wave arrivals on detection {i:d}")

# make sure the event's station list has all the stations at which

# we eventually want travel times after NLLoc's location

backprojection_events[i].stations = net.stations.astype("U")

# the following line should only be used in a similar context as here

# it uses the moveouts from the backprojection location to define the

# P/S arrival times that we use a "prior information"

backprojection_events[i].set_arrival_times_from_moveouts(verbose=0)

backprojection_events[i].pick_PS_phases(

ml_picker,

DURATION_SEC,

threshold_P=THRESHOLD_P,

threshold_S=THRESHOLD_S,

component_aliases=COMPONENT_ALIASES,

data_folder=DATA_FOLDER,

upsampling=UPSAMPLING_BEFORE_PN_RELOCATION,

downsampling=DOWNSAMPLING_BEFORE_PN_RELOCATION,

use_apriori_picks=USE_APRIORI_PICKS,

keep_probability_time_series=True,

)

Picking P and S wave arrivals on detection 0

Picking P and S wave arrivals on detection 1

Picking P and S wave arrivals on detection 2

Picking P and S wave arrivals on detection 3

Picking P and S wave arrivals on detection 4

Picking P and S wave arrivals on detection 5

Picking P and S wave arrivals on detection 6

Picking P and S wave arrivals on detection 7

Picking P and S wave arrivals on detection 8

Picking P and S wave arrivals on detection 9

Picking P and S wave arrivals on detection 10

Picking P and S wave arrivals on detection 11

Picking P and S wave arrivals on detection 12

Picking P and S wave arrivals on detection 13

Picking P and S wave arrivals on detection 14

Picking P and S wave arrivals on detection 15

Picking P and S wave arrivals on detection 16

We are done with the automatic picking! Every instance of BPMF.dataset.Event should now have a new attribute picks.

[45]:

EVENT_IDX = 0

backprojection_events[EVENT_IDX].picks

[45]:

| P_probas | P_picks_sec | P_unc_sec | S_probas | S_picks_sec | S_unc_sec | P_abs_picks | S_abs_picks | |

|---|---|---|---|---|---|---|---|---|

| stations | ||||||||

| DE07 | 0.750513 | 20.343641 | 0.121599 | 0.842435 | 22.558384 | 0.106735 | 2012-07-26T01:10:24.623641Z | 2012-07-26T01:10:26.838384Z |

| DC07 | 0.641994 | 20.245676 | 0.156870 | 0.613393 | 22.223633 | 0.136231 | 2012-07-26T01:10:24.525676Z | 2012-07-26T01:10:26.503633Z |

| DC08 | 0.676526 | 20.038641 | 0.141339 | 0.617997 | 21.945808 | 0.137248 | 2012-07-26T01:10:24.318641Z | 2012-07-26T01:10:26.225808Z |

| DE08 | 0.142298 | 19.640827 | 0.275764 | 0.294153 | 21.971857 | 0.254681 | 2012-07-26T01:10:23.920827Z | 2012-07-26T01:10:26.251857Z |

| SPNC | 0.918656 | 20.107607 | 0.094761 | 0.852687 | 21.982595 | 0.101620 | 2012-07-26T01:10:24.387607Z | 2012-07-26T01:10:26.262595Z |

| DC06 | 0.575691 | 20.782576 | 0.250012 | 0.845824 | 23.215721 | 0.106698 | 2012-07-26T01:10:25.062576Z | 2012-07-26T01:10:27.495721Z |

| SAUV | 0.770925 | 19.604368 | 0.106771 | 0.845173 | 21.107708 | 0.105764 | 2012-07-26T01:10:23.884368Z | 2012-07-26T01:10:25.387708Z |

| DD06 | 0.521397 | 20.373207 | 0.209006 | 0.779116 | 22.342863 | 0.113789 | 2012-07-26T01:10:24.653207Z | 2012-07-26T01:10:26.622863Z |

These picks are automatically read when calling BPMF.dataset.Event.plot. Before plotting the waveforms with the newly obtained picks, let’s re-read the waveforms in the same format as before for the sake of easier visualization.

[46]:

t1 = give_time()

for i in range(len(backprojection_events)):

backprojection_events[i].read_waveforms(

BPMF.cfg.TEMPLATE_LEN_SEC,

phase_on_comp=PHASE_ON_COMP,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

)

t2 = give_time()

print(

f"{t2-t1:.2f}sec to read the waveforms for all {len(backprojection_events):d} detections."

)

2.70sec to read the waveforms for all 17 detections.

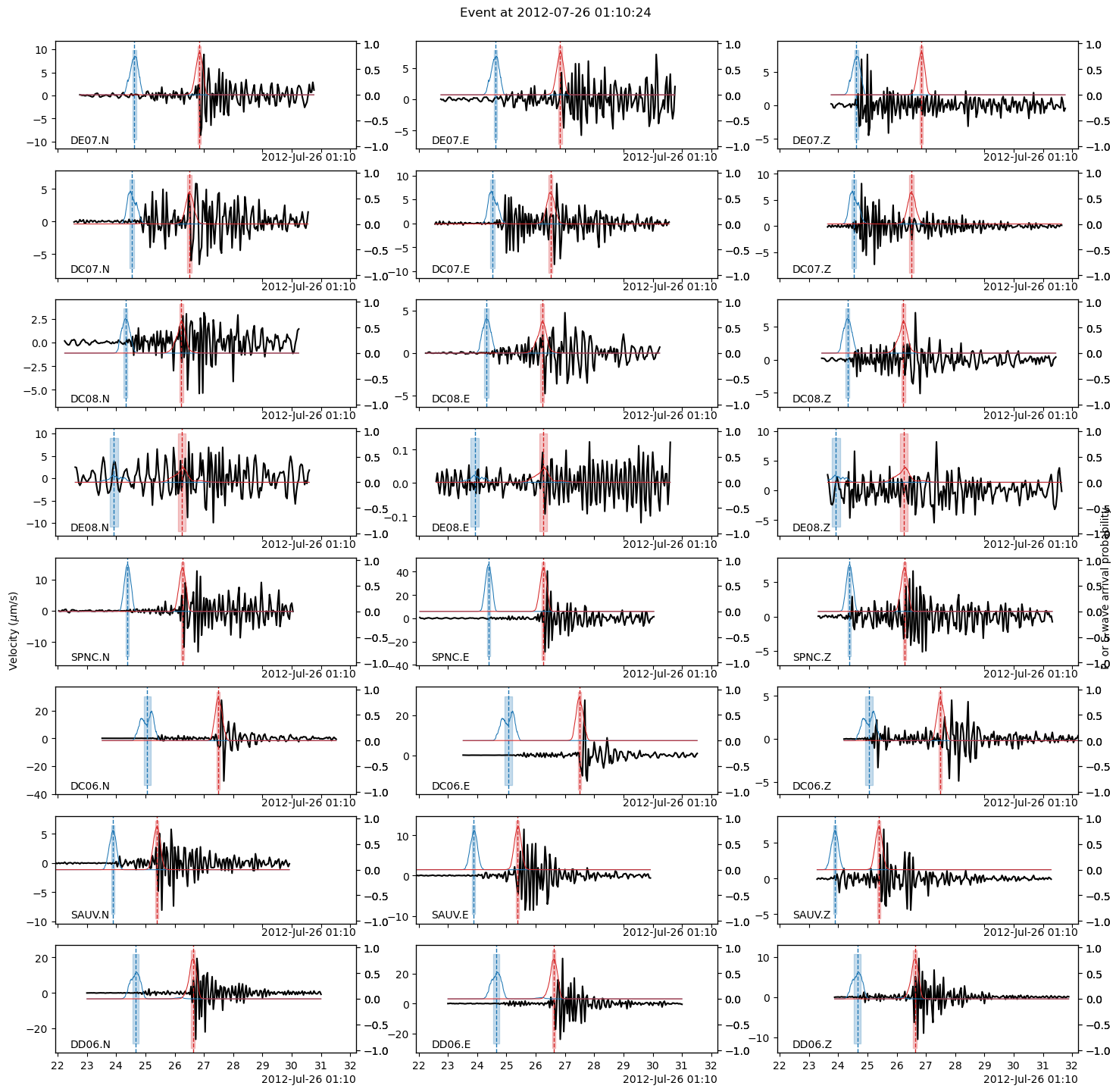

The following plot shows:

the P-/S-wave arrival continuous probabilities (blue=P-wave, red=S-wave) computed by PhaseNet,

the PhaseNet picks with dashed vertical lines (blue=P-wave, red=S-wave),

the arrival times predicted by the backprojection location with solid vertical lines (purple=P-wave, orange=S-wave).

See how, in this tutorial, the backprojection locations already do a good job at predicting the P- and S-wave times… given the limitations of the 1D velocity model.

Note: Try to run the phase picking again with USE_APRIORI_PICKS=False and see how the plot changes.

[47]:

# play with EVENT_IDX to plot different events

EVENT_IDX = 0

fig = backprojection_events[EVENT_IDX].plot(

figsize=(15, 15), plot_picks=True, plot_predicted_arrivals=False, plot_probabilities=True

)

We now are all set for using our favorite location software using PhaseNet’s picks. We can use NLLoc with one of BPMF.dataset.Event methods. See http://alomax.free.fr/nlloc/ for more info.

[48]:

# location parameters

LOCATION_ROUTINE = "NLLoc"

# NLLOC_METHOD: string that defines what loss function is used by NLLoc, see http://alomax.free.fr/nlloc/ for more info.

# Using some flavor of 'EDT' is important to obtain robust locations that are not sensitive to pick outliers.

NLLOC_METHOD = "EDT_OT_WT_ML"

# MINIMUM_NUM_STATIONS_W_PICKS: minimum number of stations with picks to even try relocation.

MINIMUM_NUM_STATIONS_W_PICKS = 3

[49]:

for i, ev in enumerate(backprojection_events):

# before relocating, let's store the old location for book-keeping

ev.set_aux_data(

{"backprojection_longitude": ev.longitude,

"backprojection_latitude": ev.latitude,

"backprojection_depth": ev.depth}

)

if len(ev.picks.dropna(how="any")) >= MINIMUM_NUM_STATIONS_W_PICKS:

print(f"Relocating event {i}")

# first relocation, insensitive to outliers

ev.relocate(

stations=net.stations, routine=LOCATION_ROUTINE, method=NLLOC_METHOD,

)

Relocating event 0

Relocating event 1

Relocating event 3

Relocating event 4

Relocating event 5

Relocating event 6

Relocating event 7

Relocating event 8

Relocating event 9

Relocating event 10

Relocating event 11

Relocating event 12

Relocating event 13

Relocating event 14

Relocating event 15

Relocating event 16

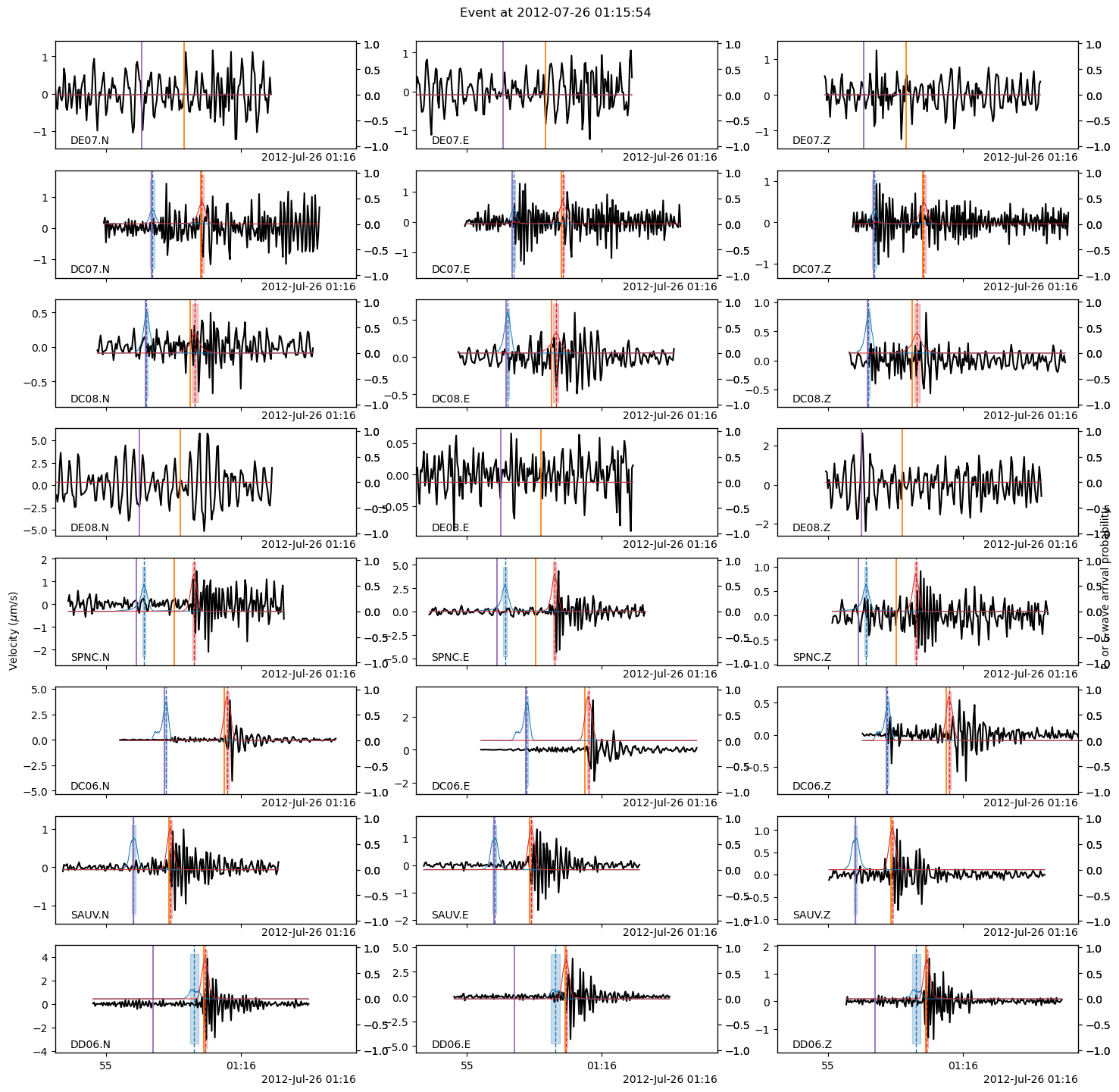

Let’s plot the arrival times predicted by NLLoc’s location! As you can see, a 1D velocity model cannot fit the picks very well. The stations are right in the heterogeneous fault zone.

[50]:

# play with EVENT_IDX to plot different events

EVENT_IDX = 1

fig = backprojection_events[EVENT_IDX].plot(

figsize=(15, 15), plot_picks=True, plot_probabilities=True, plot_predicted_arrivals=True

)

It doesn’t look like much has changed… But we now have the location uncertainties. The uncertainty information is encoded in the covariance matrix, which is stored at BPMF.dataset.Event.aux_data["cov_mat"].

[51]:

EVENT_IDX = 0

ev = backprojection_events[EVENT_IDX]

print(f"The maximum horizontal location uncertainty of event {EVENT_IDX} is {ev.hmax_unc:.2f}km.")

print(f"The azimuth of the maximum horizontal uncertainty is {ev.az_hmax_unc:.2f}degrees.")

print(f"The mainimumhorizontal location uncertainty of event {EVENT_IDX} is {ev.hmin_unc:.2f}km.")

print(f"The azimuth of the minimum horizontal uncertainty is {ev.az_hmin_unc:.2f}degrees.")

print(f"The maximum vertical location uncertainty is {ev.vmax_unc:.2f}km.")

The maximum horizontal location uncertainty of event 0 is 1.71km.

The azimuth of the maximum horizontal uncertainty is 150.39degrees.

The mainimumhorizontal location uncertainty of event 0 is 1.44km.

The azimuth of the minimum horizontal uncertainty is 60.39degrees.

The maximum vertical location uncertainty is 2.44km.

Before plotting the map with the new locations, let’s make sure our locations are not unnecessarily biased by aberrant pick outliers. Even though we used NonLinLoc’s EDT method, which is robust to outliers, we can easily identify outliers and remove them.

[52]:

# we set a maximum tolerable difference, in percentage, between the picked time and the predicted travel time

MAX_TIME_DIFFERENT_PICKS_PREDICTED_PERCENT = 25

for i, ev in enumerate(backprojection_events):

if "NLLoc_reloc" in ev.aux_data:

# this variable was inserted into ev.aux_data if NLLoc successfully located the event

# use predicted times to remove outlier picks

ev.remove_outlier_picks(max_diff_percent=MAX_TIME_DIFFERENT_PICKS_PREDICTED_PERCENT)

if len(ev.picks.dropna(how="any")) >= MINIMUM_NUM_STATIONS_W_PICKS:

print(f"Relocating event {i}")

# first relocation, insensitive to outliers

ev.relocate(

stations=net.stations, routine=LOCATION_ROUTINE, method=NLLOC_METHOD,

)

else:

# after removing the outlier picks, not enough picks were left

# revert to backprojection location

del ev.arrival_times

ev.longitude = ev.aux_data["backprojection_longitude"]

ev.latitude = ev.aux_data["backprojection_latitude"]

ev.depth = ev.aux_data["backprojection_depth"]

[53]:

# play with EVENT_IDX to plot different events

EVENT_IDX = 0

fig = backprojection_events[EVENT_IDX].plot(

figsize=(15, 15), plot_picks=True, plot_predicted_arrivals=True, plot_probabilities=False

)

Gather the new catalog, clean up possible multiples and plot the new locations

Just like before, we make a new instance of BPMF.dataset.Catalog to use the built-in plotting methods.

[54]:

# --- update event ids now that we have our final locations

for ev in backprojection_events:

ev.id = str(ev.origin_time)

# --- create a Catalog objet

nlloc_catalog = BPMF.dataset.Catalog.read_from_events(

backprojection_events,

extra_attributes=["hmax_unc", "hmin_unc", "az_hmax_unc", "vmax_unc"]

)

nlloc_catalog.catalog["az_hmax_unc"] = nlloc_catalog.catalog["az_hmax_unc"]%180.

nlloc_catalog.catalog

Class instance does not have a `cov_mat` attribute.

/home/ebeauce/software/Seismic_BPMF/BPMF/dataset.py:324: UserWarning: no explicit representation of timezones available for np.datetime64

np.asarray(origin_times, dtype="datetime64[ms]")

[54]:

| longitude | latitude | depth | origin_time | hmax_unc | hmin_unc | az_hmax_unc | vmax_unc | |

|---|---|---|---|---|---|---|---|---|

| event_id | ||||||||

| 2012-07-26T01:10:21.720000Z | 30.319141 | 40.728750 | 12.6000 | 2012-07-26 01:10:21.720 | 1.713889 | 1.438382 | 150.388474 | 2.443677 |

| 2012-07-26T01:15:54.200000Z | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:54.200 | 1.766185 | 0.957067 | 93.146858 | 2.177404 |

| 2012-07-26T01:57:57.600000Z | 30.210000 | 40.600000 | -1.5000 | 2012-07-26 01:57:57.600 | 15.000000 | 15.000000 | 0.000000 | 15.000000 |

| 2012-07-26T02:35:01.640000Z | 30.283008 | 40.669375 | 10.7000 | 2012-07-26 02:35:01.640 | 5.237969 | 2.169321 | 46.649498 | 3.042312 |

| 2012-07-26T03:00:38.760000Z | 30.314258 | 40.711875 | 11.9000 | 2012-07-26 03:00:38.760 | 1.722674 | 1.167362 | 88.518181 | 3.007942 |

| 2012-07-26T04:43:38.320000Z | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 04:43:38.320 | 5.539433 | 2.653419 | 131.163864 | 4.183529 |

| 2012-07-26T04:48:38.520000Z | 30.345996 | 40.720312 | 9.3500 | 2012-07-26 04:48:38.520 | 1.817742 | 0.925883 | 94.536827 | 2.592613 |

| 2012-07-26T08:08:25.760000Z | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 08:08:25.760 | 1.209022 | 0.942840 | 127.960640 | 2.648956 |

| 2012-07-26T10:07:23.680000Z | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 10:07:23.680 | 1.501076 | 0.950018 | 91.866226 | 2.387271 |

| 2012-07-26T11:55:36.000000Z | 30.287402 | 40.619687 | -0.7500 | 2012-07-26 11:55:36.000 | 4.696620 | 1.133235 | 144.824448 | 4.342819 |

| 2012-07-26T13:35:26.520000Z | 30.294238 | 40.794063 | -1.9500 | 2012-07-26 13:35:26.520 | 2.955352 | 0.966323 | 87.058800 | 3.966837 |

| 2012-07-26T13:48:32.400000Z | 30.312305 | 40.720625 | 12.3000 | 2012-07-26 13:48:32.400 | 1.546550 | 1.414732 | 21.012421 | 2.729270 |

| 2012-07-26T13:51:58.200000Z | 30.314258 | 40.711875 | 13.5000 | 2012-07-26 13:51:58.200 | 1.741413 | 1.491587 | 166.295975 | 3.198883 |

| 2012-07-26T13:53:31.360000Z | 30.312305 | 40.720625 | 12.1000 | 2012-07-26 13:53:31.360 | 1.578368 | 1.425255 | 31.847794 | 2.887139 |

| 2012-07-26T13:56:54.200000Z | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 13:56:54.200 | 2.007848 | 0.880852 | 86.483894 | 3.057234 |

| 2012-07-26T15:06:20.120000Z | 30.328052 | 40.600078 | -1.9875 | 2012-07-26 15:06:20.120 | 0.870097 | 0.518110 | 94.459953 | 0.358991 |

| 2012-07-26T17:28:21.640000Z | 30.310352 | 40.654375 | -0.5000 | 2012-07-26 17:28:21.640 | 4.602456 | 1.586548 | 63.806900 | 5.752606 |

Remove possible multiple detections:

The backprojection method does not have good inter-event time resolution because of the large uncertainties on the event origin times. There always exists a relatively large trade-off between the origin time and the location so it happens to detect the same event several times in a row if MINIMUM_INTEREVENT_TIME_SEC was not set large enough in 5_backprojection.ipynb. After relocation with NonLinLoc, it is easier to identify such multiple detections.

[55]:

# the criteria that determine whether two events are the same or not are of course somewhat arbitrary

# however, one can play with them and see what values effectively discard multiples

MINIMUM_INTEREVENT_TIME_SEC = 5.

MINIMUM_EPICENTRAL_DISTANCE_KM = 10.

[56]:

# ---------------------------------------------------------

# Remove multiple detections of same event

# ---------------------------------------------------------

# --- build a dictionary where each entry is an event, for explicit indexing

backprojection_events_dict = {ev.id : ev for ev in backprojection_events}

keep = np.ones(nlloc_catalog.n_events, dtype=bool)

for i in range(1, nlloc_catalog.n_events):

previous_ev = i - 1

while not keep[previous_ev]:

previous_ev -= 1

if previous_ev < 0:

break

if previous_ev < 0:

# there is not previous event to compare with

continue

# -----------------------------------------

# catalog row indexes and backprojection_events indexes do not always match

# because catalog is ordered chronologically and some events' chronology

# might have swapped during NLLoc's location process

# -----------------------------------------

event_id = nlloc_catalog.catalog.index[i]

det = backprojection_events_dict[event_id]

previous_event_id = nlloc_catalog.catalog.index[previous_ev]

previous_det = backprojection_events_dict[previous_event_id]

dt_sec = (

nlloc_catalog.catalog.origin_time[i]

- nlloc_catalog.catalog.origin_time[previous_ev]

).total_seconds()

if dt_sec < MINIMUM_INTEREVENT_TIME_SEC:

# test their epicentral distance

epi_distance = BPMF.utils.two_point_epicentral_distance(

nlloc_catalog.catalog.iloc[i]["longitude"],

nlloc_catalog.catalog.iloc[i]["latitude"],

nlloc_catalog.catalog.iloc[previous_ev]["longitude"],

nlloc_catalog.catalog.iloc[previous_ev]["latitude"],

)

if epi_distance > MINIMUM_EPICENTRAL_DISTANCE_KM:

# these two events are close in time but distant in space

continue

if (

"NLLoc_reloc" in det.aux_data

and "NLLoc_reloc" not in previous_det.aux_data

):

keep[previous_ev] = False

elif (

"NLLoc_reloc" not in det.aux_data

and "NLLoc_reloc" in previous_det.aux_data

):

keep[i] = False

elif det.hmax_unc < previous_det.hmax_unc:

keep[previous_ev] = False

elif det.hmax_unc > previous_det.hmax_unc:

keep[i] = False

else:

# if both det and previous_det were not relocated with NLLoc,

# then there origin times cannot be closer than MINIMUM_INTEREVENT_TIME_SEC

continue

nlloc_catalog.catalog = nlloc_catalog.catalog[keep]

nlloc_catalog.catalog["event_id"] = [f"bp_{i}" for i in range(len(nlloc_catalog.catalog))]

nlloc_catalog.catalog

/tmp/ipykernel_2935334/3001744156.py:30: FutureWarning: Series.__getitem__ treating keys as positions is deprecated. In a future version, integer keys will always be treated as labels (consistent with DataFrame behavior). To access a value by position, use `ser.iloc[pos]`

nlloc_catalog.catalog.origin_time[i]

/tmp/ipykernel_2935334/3001744156.py:31: FutureWarning: Series.__getitem__ treating keys as positions is deprecated. In a future version, integer keys will always be treated as labels (consistent with DataFrame behavior). To access a value by position, use `ser.iloc[pos]`

- nlloc_catalog.catalog.origin_time[previous_ev]

[56]:

| longitude | latitude | depth | origin_time | hmax_unc | hmin_unc | az_hmax_unc | vmax_unc | event_id | |

|---|---|---|---|---|---|---|---|---|---|

| event_id | |||||||||

| 2012-07-26T01:10:21.720000Z | 30.319141 | 40.728750 | 12.6000 | 2012-07-26 01:10:21.720 | 1.713889 | 1.438382 | 150.388474 | 2.443677 | bp_0 |

| 2012-07-26T01:15:54.200000Z | 30.340137 | 40.717813 | 9.1500 | 2012-07-26 01:15:54.200 | 1.766185 | 0.957067 | 93.146858 | 2.177404 | bp_1 |

| 2012-07-26T01:57:57.600000Z | 30.210000 | 40.600000 | -1.5000 | 2012-07-26 01:57:57.600 | 15.000000 | 15.000000 | 0.000000 | 15.000000 | bp_2 |

| 2012-07-26T02:35:01.640000Z | 30.283008 | 40.669375 | 10.7000 | 2012-07-26 02:35:01.640 | 5.237969 | 2.169321 | 46.649498 | 3.042312 | bp_3 |

| 2012-07-26T03:00:38.760000Z | 30.314258 | 40.711875 | 11.9000 | 2012-07-26 03:00:38.760 | 1.722674 | 1.167362 | 88.518181 | 3.007942 | bp_4 |

| 2012-07-26T04:43:38.320000Z | 30.323047 | 40.723750 | 10.2000 | 2012-07-26 04:43:38.320 | 5.539433 | 2.653419 | 131.163864 | 4.183529 | bp_5 |

| 2012-07-26T04:48:38.520000Z | 30.345996 | 40.720312 | 9.3500 | 2012-07-26 04:48:38.520 | 1.817742 | 0.925883 | 94.536827 | 2.592613 | bp_6 |

| 2012-07-26T08:08:25.760000Z | 30.322559 | 40.720937 | 8.8500 | 2012-07-26 08:08:25.760 | 1.209022 | 0.942840 | 127.960640 | 2.648956 | bp_7 |

| 2012-07-26T10:07:23.680000Z | 30.340137 | 40.717188 | 8.9500 | 2012-07-26 10:07:23.680 | 1.501076 | 0.950018 | 91.866226 | 2.387271 | bp_8 |

| 2012-07-26T11:55:36.000000Z | 30.287402 | 40.619687 | -0.7500 | 2012-07-26 11:55:36.000 | 4.696620 | 1.133235 | 144.824448 | 4.342819 | bp_9 |

| 2012-07-26T13:35:26.520000Z | 30.294238 | 40.794063 | -1.9500 | 2012-07-26 13:35:26.520 | 2.955352 | 0.966323 | 87.058800 | 3.966837 | bp_10 |

| 2012-07-26T13:48:32.400000Z | 30.312305 | 40.720625 | 12.3000 | 2012-07-26 13:48:32.400 | 1.546550 | 1.414732 | 21.012421 | 2.729270 | bp_11 |

| 2012-07-26T13:51:58.200000Z | 30.314258 | 40.711875 | 13.5000 | 2012-07-26 13:51:58.200 | 1.741413 | 1.491587 | 166.295975 | 3.198883 | bp_12 |

| 2012-07-26T13:53:31.360000Z | 30.312305 | 40.720625 | 12.1000 | 2012-07-26 13:53:31.360 | 1.578368 | 1.425255 | 31.847794 | 2.887139 | bp_13 |

| 2012-07-26T13:56:54.200000Z | 30.345996 | 40.717188 | 9.1500 | 2012-07-26 13:56:54.200 | 2.007848 | 0.880852 | 86.483894 | 3.057234 | bp_14 |

| 2012-07-26T15:06:20.120000Z | 30.328052 | 40.600078 | -1.9875 | 2012-07-26 15:06:20.120 | 0.870097 | 0.518110 | 94.459953 | 0.358991 | bp_15 |

| 2012-07-26T17:28:21.640000Z | 30.310352 | 40.654375 | -0.5000 | 2012-07-26 17:28:21.640 | 4.602456 | 1.586548 | 63.806900 | 5.752606 | bp_16 |

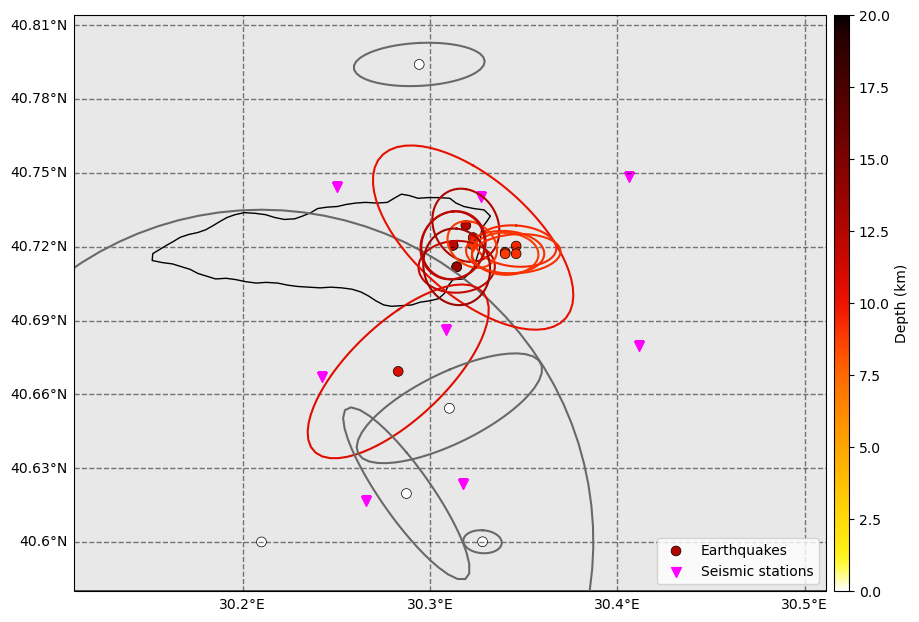

The NLLoc locations are able to resolve the location differences between events much better than the backprojection locations. However, limits remain due to:

Errors in picks.

Approximations in velocity model (1D model whereas the area is hghly heterogeneous!).

Earthquakes are located outside the network, so large uncertainties cannot be avoided.

[57]:

fig = nlloc_catalog.plot_map(

figsize=(10, 10), network=net, s=50, markersize_station=50, lat_margin=0.02, plot_uncertainties=True

)

ax = fig.get_axes()[0]

ax.set_facecolor("dimgrey")

ax.patch.set_alpha(0.15)

Save the relocated events

Save all the events that were not identified as multiples to a hdf5 database.

[58]:

nlloc_catalog.catalog.to_csv(

os.path.join(BPMF.cfg.OUTPUT_PATH, OUTPUT_CSV_FILENAME)

)

[59]:

for i in range(len(nlloc_catalog.catalog)):

evid = nlloc_catalog.catalog.index[i]

backprojection_events_dict[evid].write(

OUTPUT_DB_FILENAME,

db_path=BPMF.cfg.OUTPUT_PATH,

gid=backprojection_events_dict[evid].id

)

Relocation 2 (Bonus): Locating and estimating uncertainties with backprojection on a fine grid

The goal of this section is to explore an alternative to the PhaseNet + NonLinLoc workflow solely based on the use backprojection. If a “sparse” grid was used for the purpose of earthquake detection, then a fine grid can be used here.

In the following, we run a “vanilla” backprojection that backprojects the waveforms’ envelopes (as defined by the Hilbert transform).

[60]:

# PHASES: list of seismic phases to use for backprojection

PHASES = ["P", "S"]

# TT_FILENAME: name of the file with the travel times

TT_FILENAME = "tts.h5"

# N_MAX_STATIONS: the maximum number of stations used for stacking (e.g. the closest to a given grid point)

# note: this parameter is irrelevant in this example with only 8 stations, but it is important when

# using large seismic networks

N_MAX_STATIONS = 10

For backprojection, we need to load the travel time grid and set up the BPMF.template_search.Beamformer instance.

[61]:

tts = BPMF.template_search.TravelTimes(

TT_FILENAME,

tt_folder_path=BPMF.cfg.MOVEOUTS_PATH

)

tts.read(

PHASES, source_indexes=None, read_coords=True, stations=net.stations

)

tts.convert_to_samples(BPMF.cfg.SAMPLING_RATE_HZ, remove_tt_seconds=True)

[62]:

EVENT_IDX = 0

# initialize a new beamformer because, in general, you might want to use a different

# travel-time table for this beamformer (e.g. denser)

bf_loc = BPMF.template_search.Beamformer(

phases=PHASES,

moveouts_relative_to_first=True,

)

# here, we need to "trick" the Beamformer instance and give it something similar to the Data instance in 5_backprojection

bf_loc.set_data(backprojection_events[EVENT_IDX])

# attach the Network instance

bf_loc.set_network(net)

# attach the TravelTimes instance

bf_loc.set_travel_times(tts)

# attach weights (the waveform features are the waveforms' envelopes)

# weights_phases = np.ones((net.n_stations, net.n_components, len(PHASES)), dtype=np.float32)

# weights_phases[:, :2, 0] = 0. # P-wave weight = 0 for horizontal components

# weights_phases[:, 2, 1] = 0. # S-wave weight = 0 for vertical components

# uncomment this section to perform the backprojection-based relocation

# with ML waveform features (PhaseNet)

weights_phases = np.ones((net.n_stations, 2, 2), dtype=np.float32)

weights_phases[:, 0, 1] = 0.0 # S-wave weights to zero for channel 0

weights_phases[:, 1, 0] = 0.0 # P-wave weights to zero for channel 1

bf_loc.set_weights_sources(N_MAX_STATIONS)

bf_loc.set_weights(weights_phases=weights_phases)

[63]:

# relocation parameters

LOCATION_ROUTINE = "beam"

RESTRICTED_DOMAIN_FOR_UNC_KM = 100.

DATA_FOLDER = "preprocessed_2_12"

[64]:

# uncomment this section to perform the backprojection-based relocation

# with ML waveform features (PhaseNet)

import torch

import seisbench.models as sbm

beam_relocated_event = copy.deepcopy(backprojection_events[EVENT_IDX])

ml_detector = sbm.PhaseNet.from_pretrained("original")

ml_detector.eval()

beam_relocated_event.read_waveforms(

120., offset_ot=60., time_shifted=False,

data_folder="preprocessed_2_12", data_reader=data_reader_mseed

)

data_arr = beam_relocated_event.get_np_array(net.stations)

data_arr_n = BPMF.utils.normalize_batch(data_arr)

closest_pow2 = int(np.log2(data_arr_n.shape[-1])) + 1

diff = 2**closest_pow2 - data_arr_n.shape[-1]

left = diff//2

right = diff//2 + diff%2

data_arr_n = np.pad(

data_arr_n,

((0, 0), (0, 0), (left, right)),

mode="reflect"

)

with torch.no_grad():

PN_probas = ml_detector(

torch.from_numpy(data_arr_n).float()

)

# remove channel 0 that is probability of noise

waveform_features = PN_probas[:, 1:, :].detach().numpy()

[65]:

beam_relocated_event = copy.deepcopy(backprojection_events[EVENT_IDX])

# uncomment this section if you are using envelopes (modulus of analytical signal) as waveform features

# beam_relocated_event.relocate(

# routine=LOCATION_ROUTINE,

# duration=120.,

# offset_ot=40.,

# beamformer=bf_loc,

# data_folder=DATA_FOLDER,

# restricted_domain_side_km=RESTRICTED_DOMAIN_FOR_UNC_KM,

# data_reader=data_reader_mseed,

# )

# uncomment this section if you are using ML waveform features

beam_relocated_event.relocate(

routine=LOCATION_ROUTINE,

beamformer=bf_loc,

data_folder=DATA_FOLDER,

restricted_domain_side_km=RESTRICTED_DOMAIN_FOR_UNC_KM,

data_reader=data_reader_mseed,

waveform_features=waveform_features

)

[66]:

dummy_catalog = BPMF.dataset.Catalog.read_from_events(

[backprojection_events[EVENT_IDX]],

extra_attributes=["hmax_unc", "hmin_unc", "az_hmax_unc", "vmax_unc"]

)

dummy_catalog.catalog["az_hmax_unc"] = dummy_catalog.catalog["az_hmax_unc"]%180.

dummy_catalog.catalog

/home/ebeauce/software/Seismic_BPMF/BPMF/dataset.py:324: UserWarning: no explicit representation of timezones available for np.datetime64

np.asarray(origin_times, dtype="datetime64[ms]")

[66]:

| longitude | latitude | depth | origin_time | hmax_unc | hmin_unc | az_hmax_unc | vmax_unc | |

|---|---|---|---|---|---|---|---|---|

| event_id | ||||||||

| 2012-07-26T01:10:21.720000Z | 30.319141 | 40.72875 | 12.6 | 2012-07-26 01:10:21.720 | 1.713889 | 1.438382 | 150.388474 | 2.443677 |

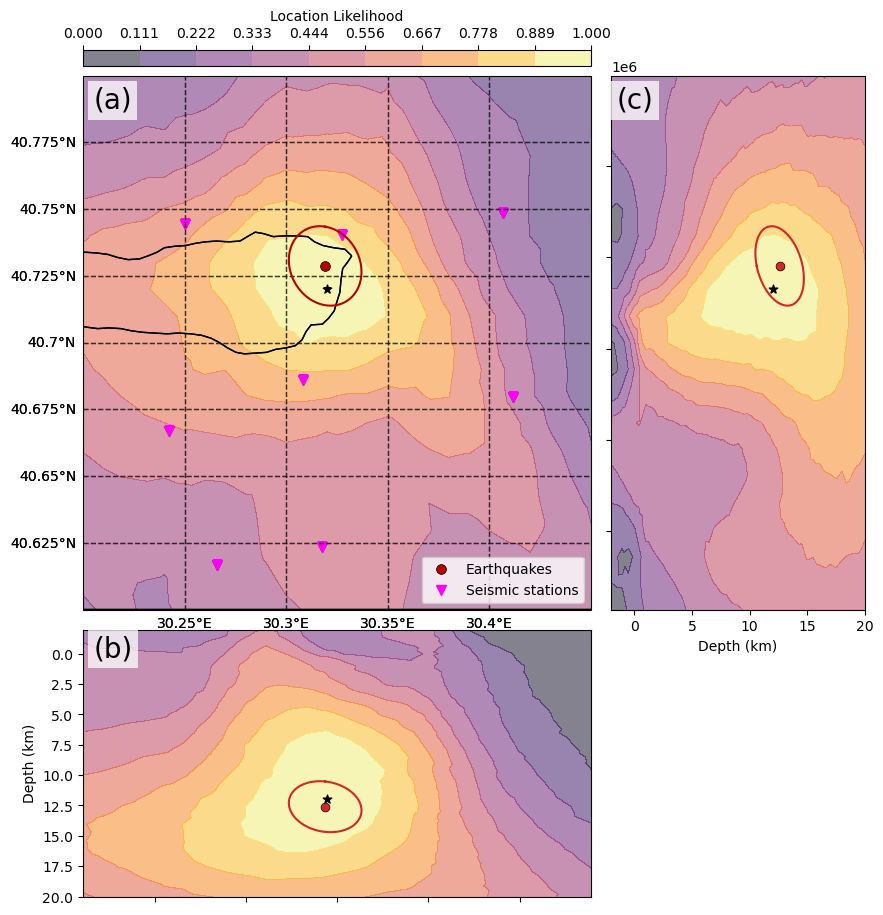

The difference between this backprojection and the “detection backprojection” of 5_backprojection is that, here, we stored the value of each beam of the 4D (x, y, z, time) grid! Below, we plot 3 slices of the (x, y, z, time=time of max beam) volume.

[67]:

from cartopy.crs import PlateCarree

scatter_kwargs = {}

scatter_kwargs["edgecolor"] = "k"

scatter_kwargs["linewidths"] = 0.5

scatter_kwargs["s"] = 40

scatter_kwargs["zorder"] = 3

scatter_kwargs["color"] = "C3"

# when using the beam-based relocation routine, the entire grid of beams is stored in memory

fig_beam_reloc = bf_loc.plot_likelihood(figsize=(15, 11), likelihood=bf_loc.likelihood)

axes = fig_beam_reloc.get_axes()

fig_beam_reloc = dummy_catalog.plot_map(

ax=axes[0], network=net, s=50, markersize_station=50, lat_margin=0.02, plot_uncertainties=True, depth_colorbar=False

)

# the following is a bit cumbersome and is just to plot the uncertainty ellipses on the depth cross-sections

ax = axes[0]

data_coords = PlateCarree()

projected_coords_nlloc = ax.projection.transform_points(

data_coords,

np.array([backprojection_events[EVENT_IDX].longitude]),

np.array([backprojection_events[EVENT_IDX].latitude]),

)

projected_coords_backproj = ax.projection.transform_points(

data_coords,

np.array([beam_relocated_event.longitude]),

np.array([beam_relocated_event.latitude]),

)

ax.scatter(

beam_relocated_event.longitude,

beam_relocated_event.latitude,

marker="*",

s=40,

color="k",

label="Initial backprojection location",

transform=data_coords

)

axes[1].scatter(

projected_coords_nlloc[0, 0], backprojection_events[EVENT_IDX].depth, **scatter_kwargs

)

axes[1].scatter(

projected_coords_backproj[0, 0],

initial_catalog.catalog.loc[EVENT_IDX, "depth"],

marker="*",

s=40,

color="k",

)

ew_ellipse_lon, ew_ellipse_lat, ew_ellipse_dep = BPMF.plotting_utils.vertical_uncertainty_ellipse(

backprojection_events[EVENT_IDX].aux_data["cov_mat"],

backprojection_events[EVENT_IDX].longitude,

backprojection_events[EVENT_IDX].latitude,

backprojection_events[EVENT_IDX].depth,

horizontal_direction="longitude"

)

projected_ellipse_coords = ax.projection.transform_points(

data_coords,

ew_ellipse_lon,

ew_ellipse_lat,

)

axes[1].plot(

projected_ellipse_coords[:, 0], ew_ellipse_dep, color=scatter_kwargs["color"]

)

axes[2].scatter(

backprojection_events[EVENT_IDX].depth, projected_coords_nlloc[0, 1], **scatter_kwargs

)

axes[2].scatter(

initial_catalog.catalog.loc[EVENT_IDX, "depth"],

projected_coords_backproj[0, 1],

marker="*",

s=40,

color="k",

)

ns_ellipse_lon, ns_ellipse_lat, ns_ellipse_dep = BPMF.plotting_utils.vertical_uncertainty_ellipse(

backprojection_events[EVENT_IDX].aux_data["cov_mat"],

backprojection_events[EVENT_IDX].longitude,

backprojection_events[EVENT_IDX].latitude,

backprojection_events[EVENT_IDX].depth,

horizontal_direction="latitude"

)

projected_ellipse_coords = ax.projection.transform_points(

data_coords,

ns_ellipse_lon,

ns_ellipse_lat,

)

axes[2].plot(

ns_ellipse_dep, projected_ellipse_coords[:, 1], color=scatter_kwargs["color"]

)

axes[1].set_ylim(20., axes[1].get_ylim()[1])

axes[2].set_xlim(axes[2].get_xlim()[0], 20.)

for i, ax in enumerate(axes[:-1]):

ax.text(0.02, 0.98, f'({string.ascii_lowercase[i]})', va='top',

fontsize=20, ha='left', bbox={"facecolor": "white", "edgecolor": "none", "alpha": 0.75},

transform=ax.transAxes)

The current way of estimating location uncertainties with the backprojection method is to compute a weighted average distance between the maximum beam grid point (indexed by \(k^*\)) and all other grid points. To avoid the result to be grid-size dependent, we limit the weighted average to a restricted rectangular domain taken around the maximum beam grid point.

where \(e_{i,k^*}\) is the epicentral distance between grid points \(i\) and \(k^*\) and \(\Delta d_{i,k^*}\) is the depth difference between grid points \(i\) and \(k^*\).

In this case, we assume the uncertainty ellipse to be isotropic, that is, \(h_{max,unc} = h_{min,unc}\).

[68]:

EVENT_IDX = 0

ev = backprojection_events[EVENT_IDX]

print(f"The maximum horizontal location uncertainty of event {EVENT_IDX} is {ev.hmax_unc:.2f}km.")

print(f"The azimuth of the maximum horizontal uncertainty is {ev.az_hmax_unc:.2f}degrees.")

print(f"The mainimum horizontal location uncertainty of event {EVENT_IDX} is {ev.hmin_unc:.2f}km.")

print(f"The azimuth of the minimum horizontal uncertainty is {ev.az_hmin_unc:.2f}degrees.")

print(f"The maximum vertical location uncertainty is {ev.vmax_unc:.2f}km with {ev._pl_vmax_unc%90:.2f}degrees plunge.")

The maximum horizontal location uncertainty of event 0 is 1.71km.

The azimuth of the maximum horizontal uncertainty is 150.39degrees.

The mainimum horizontal location uncertainty of event 0 is 1.44km.

The azimuth of the minimum horizontal uncertainty is 60.39degrees.

The maximum vertical location uncertainty is 2.44km with 29.33degrees plunge.