Reminders on Earthquake Magnitudes:Seismic Moment, Moment Magnitude and Local Magnitude

I. Introduction

There exist several magnitude scales that measure the size of an earthquake. The local magnitude \(M_\ell\), or Richter’s magnitude, is often used for small events because it is easy to calculate (however, uncertainties are often large!). The moment magnitude \(M_w\) is the only magnitude that relates unambiguously to some physical earthquake parameter, the seismic moment \(M_0\). Estimating the moment magnitude \(M_w\) requires to observe the low frequencies of the radiated

waves, which is often challenging for small events for which low frequencies are under the noise level. In this notebook, we will see how to use tools from the BPMF.spectrum module to estimate moment and local magnitudes.

II.Moment Magnitude \(M_w\)

For events that were accurately relocated (\(h_{max,unc} < 5\) km) with NonLinLoc, we can try to estimate their seismic moment \(M_0\) and moment magnitude \(M_w\) by fitting their network-averaged, path-corrected displacement spectrum with the omega-square model (Brune or Boatwright model).

We recall that (note that \(\log\) is the base 10 logarithm):

with \(M_0\) in N.m.

Models of displacement spectra in the far-field predict the shape:

Equation (2) is a general expression where \(f_c\) is the corner frequency and \(n\) controls the high-frequency fall-off rate of the spectrum (typically \(n \approx 2\)) and \(\gamma\) controls the sharpness of the spectrum’s corner. The pre-exponential term is the source term and the exponential term is attenuation. \(\tau^{P/S}(x, \xi)\) is the P/S travel time from source location \(\xi\) to receiver location \(x\), and \(Q^{P/S}\) is the P/S attenuation factor. Two special cases of (2) are:

The Brune spectrum (\(n=2\), \(\gamma = 1\)):

\[S_{\mathrm{Brune}}(f) = \dfrac{\Omega_0}{1 + (f/f_c)^{2}} \exp \left( - \dfrac{\pi \tau^{P/S}(x, \xi) f}{Q^{P/S}} \right)\quad (3)\]Boatwright’s spectrum (\(n=2\), \(\gamma=2\)):

\[S_{\mathrm{Boatwright}}(f) = \dfrac{\Omega_0}{\left( 1 + (f/f_c)^{2 n} \right)^{1/2}} \exp \left( - \dfrac{\pi \tau^{P/S}(x, \xi) f}{Q^{P/S}} \right)\quad (4)\]

The low-frequency plateau \(\Omega_0\) is proportional to the seismic moment \(M_0\).

In Equation (5), the P/S superscript is for P and S wave, \(x\) is the receiver location and \(\xi\) is the source location. \(R^{P/S}\) is the average radiation pattern (\(R^P = \sqrt{4/15}\) and \(R^S = \sqrt{2/5}\), see Aki and Richards, 2002), \(\rho\) and \(v_{P/S}\) are the medium density and P/S-wave velocity at the source, respectively, \(\mathcal{R}_{P/S}(x, \xi)\) is the P/S ray length (which can be approximated by \(r\), the source-receiver distance). More details in Aki and Richards, 2002 and Boatwright, 1978.

Given \(u(f)\) is the observed displacement spectrum, the propagation-corrected spectrum, \(\tilde{u}(f)\), is thus:

and the low-frequency values of \(\tilde{u}(f)\) directly reads as the seismic moment, \(M_0\).

III. Local Magnitude \(M_L\)

The local magnitude was initially introduced by Richter (1935) as the log ratio of peak displacement amplitudes between two earthquakes:

which results in an empirical formula correcting for the source-receiver epicentral distance \(X\):

where \(\alpha\) and \(\beta\) and region-specific parameters (see Peter Shearer’s Introduction to Seismology book, Equations 9.68 and 9.69). \(\alpha \log X\) is an empirical correction for attenuation and geometrical spreading. The issue with the traditional definition of local magnitude is that it applies to a fixed frequency band whereas 1) attenuation is frequency-dependent and 2) as detectable magnitude thresholds keep decreasing, one must choose the frequency band where signal-to-noise ratio (SNR) is above 1, otherwise the magnitude estimate is meaningless. Richter himself was concerned about choosing a consistent seismic phase with consistent dominant frequency across earthquakes. It must also be said that Richter’s pioneering work was done in the Western US with sparse seismic networks. Therefore, epicentral distances were usually much greater than depths and it made sense to consider epicentral rather than hypocentral distances, especially given the large uncertainties in depth estimates.

These issues make it difficult to relate local magnitudes to seismic moments, which is crucial if one wants to interpret the exponential distribution of magnitudes (Gutenberg-Richter law) in terms of a power-law distribution of seismic moments. It appears that the local magnitude scale may not be the most appropriate scale for small earthquakes.

IV. Approximate Moment Magnitude \(M_{w^*}\)

To address the above‐mentioned issues, we need to know at which frequency peak displacement is measured and apply the appropriate attenuation correction for this frequency. In fact, the displacement spectrum \(u(f)\) is nothing but the value of peak displacement per unit bandwidth at a given frequency \(f\). We can therefore use the propagation-corrected spectrum \(\tilde{u}(f)\) as a measure of peak displacement corrected for geometrical spreading and attenuation. Any value taken below the corner frequency (\(f < f_c\)) estimates the seismic moment \(M_0\). With small magnitude earthquakes, the signal-to-noise ratio of \(u(f)\) is typically too low to observe the displacement spectrum over a wide enough bandwidth to be able to fit the Brune or Boatwright model. However, a few frequency bins may be above the noise level, and we propose to focus on those to estimate the seismic moment. We therefore introduce an approximate moment magnitude \(M_{w^*}\).

Among these above-noise frequency bins, \(f_{\mathrm{valid}}\), we use the logarithm of the lowest frequency bin, \(f_{\mathrm{valid}}^{-}\), of the propagation-corrected displacement spectrum (Eq. (6)), \(\log \tilde{u}(f_{\mathrm{valid}}^{-})\), as the basis for our local magnitude \(M_{w^*}\):

Why the lowest frequency bin? Because it has more chances to verify \(f_{\mathrm{valid}}^{-} < f_c\) and, thus, to yield a correct estimate of the seismic moment.

\(f_{\mathrm{valid}}^{-}\) may still be above \(f_c\), leading to moment saturation. However, SNR issues are likely with small events, which are those events that have a large corner frequency \(f_c\).

The value of \(A\) determines the magnitude-moment scaling.

To match the moment magnitude-seismic moment scaling (see Eq. (1)), we choose \(A = 2/3\) and \(B = -\frac{2}{3} \times 9.1 = -6.0666\).

References

Aki, K., & Richards, P. G. (2002). Quantitative seismology.

Boatwright, J. (1978). Detailed spectral analysis of two small New York State earthquakes. Bulletin of the Seismological Society of America, 68(4), 1117-1131.

Richter, Charles F (1935). An instrumental earthquake magnitude scale. Bulletin of the seismological society of America.

Shearer, Peter M. Introduction to seismology. Cambridge university press, 2019.

Magnitude Computation

[47]:

%reload_ext autoreload

%autoreload 2

import os

# choose the number of threads you want to limit the computation to

n_CPUs = 24

os.environ["OMP_NUM_THREADS"] = str(n_CPUs)

import BPMF

import h5py as h5

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from BPMF.data_reader_examples import data_reader_mseed

from time import time as give_time

[48]:

INPUT_DB_FILENAME = "final_catalog.h5"

NETWORK_FILENAME = "network.csv"

[49]:

net = BPMF.dataset.Network(NETWORK_FILENAME)

net.read()

Load the metadata of the previously detected events

This is similar to what we did in 6_relocate.

[50]:

events = []

with h5.File(os.path.join(BPMF.cfg.OUTPUT_PATH, INPUT_DB_FILENAME), mode="r") as fin:

for group_id in fin.keys():

events.append(

BPMF.dataset.Event.read_from_file(

INPUT_DB_FILENAME,

db_path=BPMF.cfg.OUTPUT_PATH,

gid=group_id,

data_reader=data_reader_mseed

)

)

# set the source-receiver distance as this is an important parameter

# to know to correct for geometrical spreading

events[-1].set_source_receiver_dist(net)

if not hasattr(events[-1], "arrival_times"):

events[-1].set_arrival_times_from_moveouts(verbose=0)

# set the default moveouts to the theoretical times, that is, those computed in the velocity models

events[-1].set_moveouts_to_theoretical_times()

# when available, set the moveouts to the values defined by the empirical (PhaseNet) picks

events[-1].set_moveouts_to_empirical_times()

print(f"Loaded {len(events)} events.")

Loaded 53 events.

[51]:

for i, ev in enumerate(events):

print(i, ev.origin_time)

0 2012-07-26T01:15:54.200000Z

1 2012-07-26T01:16:30.040000Z

2 2012-07-26T01:18:32.920000Z

3 2012-07-26T01:39:55.520000Z

4 2012-07-26T01:52:36.480000Z

5 2012-07-26T02:24:36.560000Z

6 2012-07-26T03:00:38.720000Z

7 2012-07-26T01:02:53.320000Z

8 2012-07-26T13:48:32.440000Z

9 2012-07-26T13:50:18.520000Z

10 2012-07-26T13:53:31.320000Z

11 2012-07-26T01:03:46.960000Z

12 2012-07-26T01:10:21.760000Z

13 2012-07-26T01:12:32.920000Z

14 2012-07-26T01:15:14.040000Z

15 2012-07-26T02:35:01.200000Z

16 2012-07-26T01:35:15.200000Z

17 2012-07-26T17:28:20.200000Z

18 2012-07-26T14:38:50.520000Z

19 2012-07-26T04:43:38.320000Z

20 2012-07-26T04:46:49.240000Z

21 2012-07-26T04:51:06.560000Z

22 2012-07-26T14:49:28.440000Z

23 2012-07-26T04:48:38.480000Z

24 2012-07-26T01:52:28.040000Z

25 2012-07-26T03:08:33.640000Z

26 2012-07-26T03:10:55.160000Z

27 2012-07-26T04:30:33.640000Z

28 2012-07-26T05:22:04.560000Z

29 2012-07-26T05:45:46.080000Z

30 2012-07-26T05:46:59.320000Z

31 2012-07-26T05:57:16.560000Z

32 2012-07-26T08:08:25.560000Z

33 2012-07-26T10:16:45.880000Z

34 2012-07-26T10:53:46.680000Z

35 2012-07-26T11:02:23.680000Z

36 2012-07-26T09:21:39.320000Z

37 2012-07-26T09:28:48.320000Z

38 2012-07-26T10:07:23.680000Z

39 2012-07-26T16:26:52.520000Z

40 2012-07-26T16:33:57.640000Z

41 2012-07-26T11:55:35.560000Z

42 2012-07-26T13:35:24.400000Z

43 2012-07-26T00:58:11.000000Z

44 2012-07-26T00:58:16.560000Z

45 2012-07-26T02:22:49.800000Z

46 2012-07-26T04:45:03.880000Z

47 2012-07-26T13:51:58.200000Z

48 2012-07-26T13:54:54.160000Z

49 2012-07-26T13:56:54.280000Z

50 2012-07-26T01:09:25.880000Z

51 2012-07-26T01:10:43.920000Z

52 2012-07-26T15:06:20.080000Z

Moment magnitude estimation

In this section, we will demonstrate the workflow we adopt to compute the moment magnitude of each event. It consists in:

Extracting short windows around the P and S waves, as well as a noise window taken before the P wave.

Correcting for instrument response and integrating velocity to obtain the displacement seismograms.

Computing the displacement noise/P/S spectra on each channel using the traditional vs advanced technique.

Correcting for the path effects (geometrical spreading and attenuation).

Averaging the displacement spectrum over the network.

Fitting the displacement spectrum with a model of our choice (here, the Boatwright model).

Conventional method: Example with a single event

[52]:

# waveform extraction parameters

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP_S = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "S"}

PHASE_ON_COMP_P = {"N": "P", "1": "P", "E": "P", "2": "P", "Z": "P"}

# BUFFER_SEC: duration, in sec, of time window taken before and after the window of interest

# which we need to avoid propagating the pre-filtering taper operation into our

# amplitude readings

BUFFER_SEC = 3.0

# OFFSET_PHASE: dictionary defining the time offset taken before a given phase

# for example OFFSET_PHASE["P"] = 1.0 means that we extract the window

# 1 second before the predicted P arrival time

OFFSET_PHASE = {"P": 0.5 + BUFFER_SEC, "S": 0.5 + BUFFER_SEC}

DURATION_SEC = 3.0 + 2.0 * BUFFER_SEC

OFFSET_OT_SEC_NOISE = DURATION_SEC

TIME_SHIFTED = True

DATA_FOLDER = "raw"

DATA_READER = data_reader_mseed

ATTACH_RESPONSE = True

# spectral inversion parameters

SPECTRAL_MODEL = "boatwright"

RHO_SOURCE_KGM3 = 2700.0

VS_SOURCE_MS = 3500.0

VP_SOURCE_MS = VS_SOURCE_MS * 1.72

RHO_RECEIVER_KGM3 = 2600.0

VS_RECEIVER_MS = 2800.0

VP_RECEIVER_MS = VS_RECEIVER_MS * 1.72

FREQ_MIN_HZ = 0.5

FREQ_MAX_HZ = 20.

NUM_FREQS = 100

SNR_THRESHOLD = 10.

MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN = 5

MIN_FRACTION_VALID_POINTS_BELOW_FC = 0.20

MAX_RELATIVE_DISTANCE_ERR_PCT = 33.

NUM_CHANNEL_WEIGHTED_FIT = True

[53]:

EVENT_IDX = 12

event = events[EVENT_IDX]

print(f"The maximum horizontal location uncertainty of event {EVENT_IDX} is {event.hmax_unc:.2f}km.")

print(f"The minimum horizontal location uncertainty of event {EVENT_IDX} is {event.hmin_unc:.2f}km.")

print(f"The maximum vertical location uncertainty is {event.vmax_unc:.2f}km.")

The maximum horizontal location uncertainty of event 12 is 1.72km.

The minimum horizontal location uncertainty of event 12 is 1.49km.

The maximum vertical location uncertainty is 2.48km.

In the following, we define 3 obspy.Stream instances with the noise, P-wave and S-wave traces. Here, it is important to identify the clipped waveforms and set them to zero so that we don’t use them in the future. The identification of clipped waveforms is based on a simple kurtosis test (kurtosis is low for clipped waveforms because the extremum values are often reached).

[54]:

# extract waveforms

# first, read short extract before signal as an estimate of noise

event.read_waveforms(

DURATION_SEC,

time_shifted=False,

data_folder=DATA_FOLDER,

offset_ot=OFFSET_OT_SEC_NOISE,

attach_response=ATTACH_RESPONSE,

)

noise = event.traces.copy()

# then, read signal

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_P,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

p_wave = event.traces.copy()

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_S,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

s_wave = event.traces.copy()

# correct for instrument response and integrate to get displacement seismograms

for st in [noise, p_wave, s_wave]:

for tr in st:

fnyq = tr.stats.sampling_rate / 2.

pre_filt = [

1. / DURATION_SEC,

1.05 / DURATION_SEC,

0.95 * fnyq,

0.98 * fnyq

]

tr.detrend("constant")

tr.detrend("linear")

tr.taper(0.25, type="cosine")

tr.remove_response(

pre_filt=pre_filt,

zero_mean=False,

taper=False,

#taper_fraction=0.25,

output="DISP",

plot=False,

)

tr.trim(

starttime=tr.stats.starttime + BUFFER_SEC,

endtime=tr.stats.endtime - BUFFER_SEC

)

We can now compute the velocity spectra on each channel.

[55]:

spectrum = BPMF.spectrum.Spectrum(event=event)

spectrum.compute_spectrum(noise, "noise", alpha=0.15)

spectrum.compute_spectrum(p_wave, "p", alpha=0.15)

spectrum.compute_spectrum(s_wave, "s", alpha=0.15)

spectrum.set_target_frequencies(FREQ_MIN_HZ, FREQ_MAX_HZ, NUM_FREQS)

spectrum.resample(spectrum.frequencies, spectrum.phases)

spectrum.compute_signal_to_noise_ratio("p")

spectrum.compute_signal_to_noise_ratio("s")

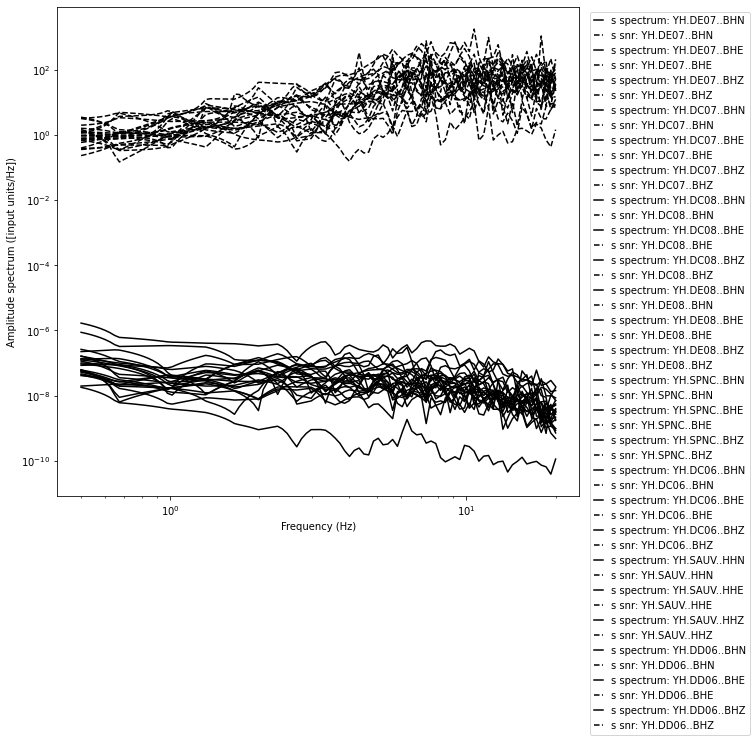

Let’s plot the velocity spectra and the signal-to-noise ratio (SNR) spectra.

[56]:

phase_to_plot = "s"

fig = spectrum.plot_spectrum(phase_to_plot, plot_snr=True)

Before correcting for geometrical spreading, we need to build an attenuation model. In general, body-wave attenuation in the lithosphere is described by he quality factor \(Q\), which is related to frequency by (Aki, 1980):

To build a simple model of attenuation, we assume that \(Q^P = Q^S = Q_0 f^n\) (this is a very crude approximation!!). We search for an adequate value of \(Q_0\) and \(n\) in the literature. \(Q_0 = 33\) and \(n = 0.75\) reproduce reasonably well the curves shown in Izgi et al., 2020.

References:

Aki, K. (1980). Attenuation of shear-waves in the lithosphere for frequencies from 0.05 to 25 Hz. Physics of the Earth and Planetary Interiors, 21(1), 50-60.

Izgi, G., Eken, T., Gaebler, P., Eulenfeld, T., & Taymaz, T. (2020). Crustal seismic attenuation parameters in the western region of the North Anatolian Fault Zone. Journal of Geodynamics, 134, 101694.

[57]:

Q_1Hz = 33.

n = 0.75

Q = Q_1Hz * np.power(spectrum.frequencies, n)

[58]:

spectrum.set_Q_model(Q, spectrum.frequencies)

spectrum.compute_correction_factor(

RHO_SOURCE_KGM3, RHO_RECEIVER_KGM3,

VP_SOURCE_MS, VP_RECEIVER_MS,

VS_SOURCE_MS, VS_RECEIVER_MS

)

[59]:

source_parameters = {}

for phase_for_mag in ["p", "s"]:

spectrum.compute_network_average_spectrum(

phase_for_mag,

SNR_THRESHOLD,

min_num_valid_channels_per_freq_bin=MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN,

max_relative_distance_err_pct=MAX_RELATIVE_DISTANCE_ERR_PCT,

verbose=1

)

# spectrum.integrate(phase_for_mag, average=True)

spectrum.fit_average_spectrum(

phase_for_mag,

model=SPECTRAL_MODEL,

# min_fraction_valid_points_below_fc=MIN_FRACTION_VALID_POINTS_BELOW_FC,

min_fraction_valid_points_below_fc=0.,

weighted=NUM_CHANNEL_WEIGHTED_FIT,

)

if spectrum.inversion_success:

rel_M0_err = 100.*spectrum.M0_err/spectrum.M0

rel_fc_err = 100.*spectrum.fc_err/spectrum.fc

if rel_M0_err > 10. or spectrum.fc < 0. or spectrum.fc > 25.:

continue

print(f"Relative error on M0: {rel_M0_err:.2f}%")

print(f"Relative error on fc: {rel_fc_err:.2f}%")

# event.set_aux_data({f"Mw_{phase_for_mag}": spectrum.Mw})

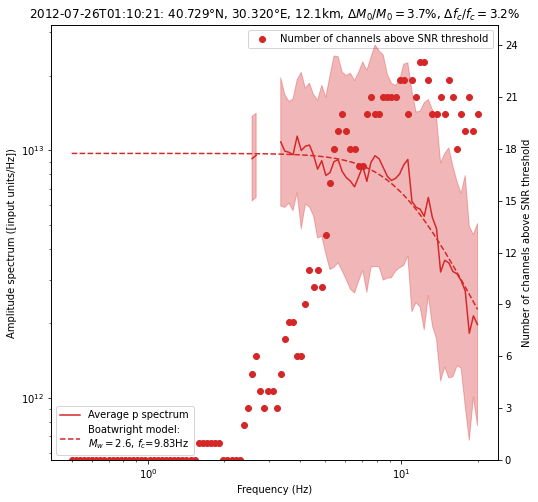

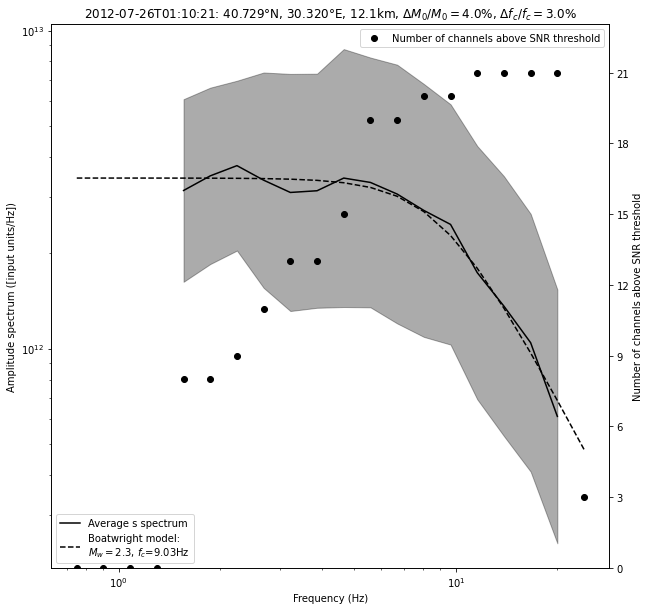

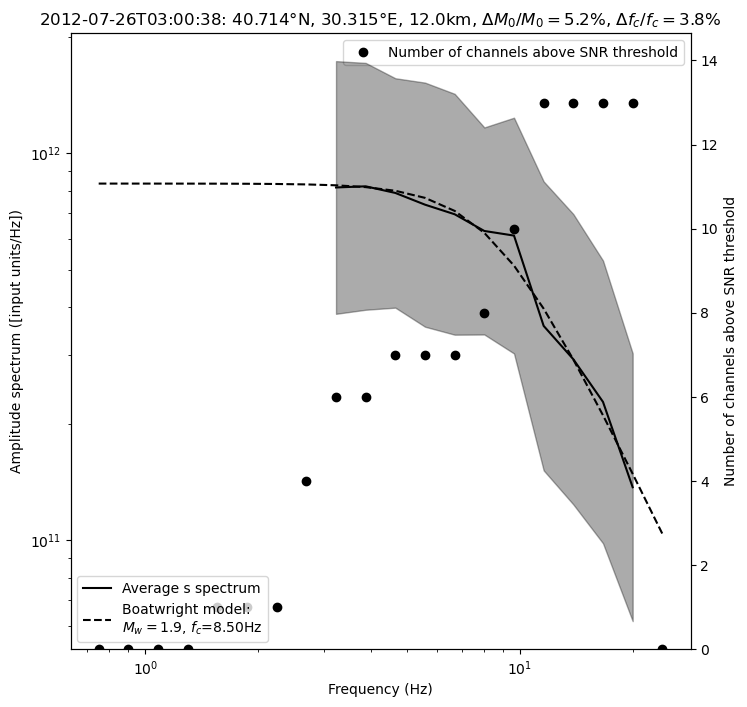

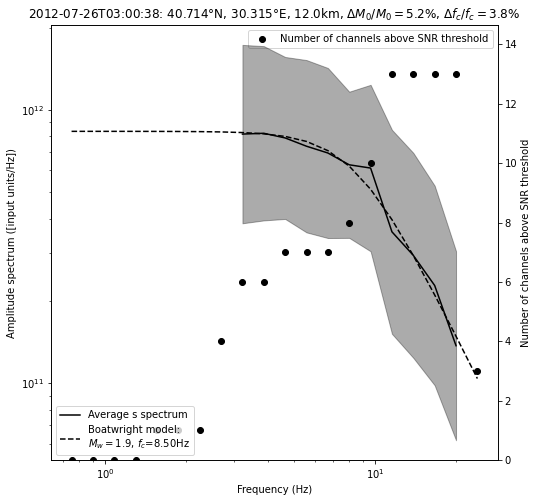

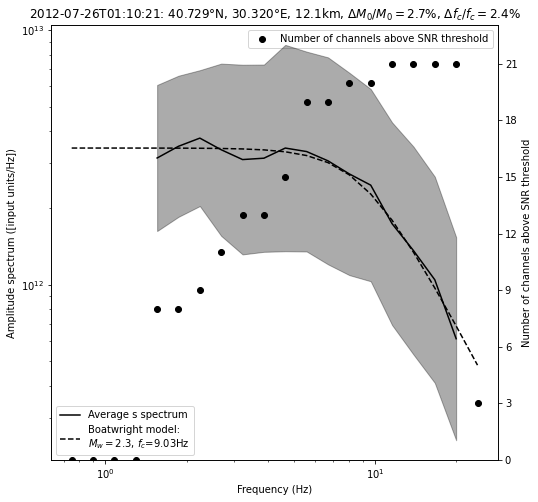

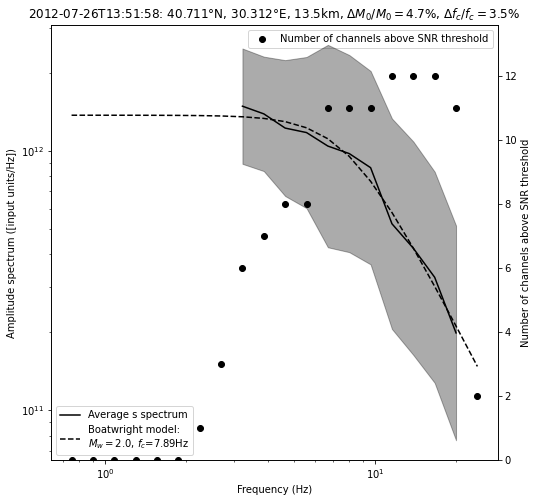

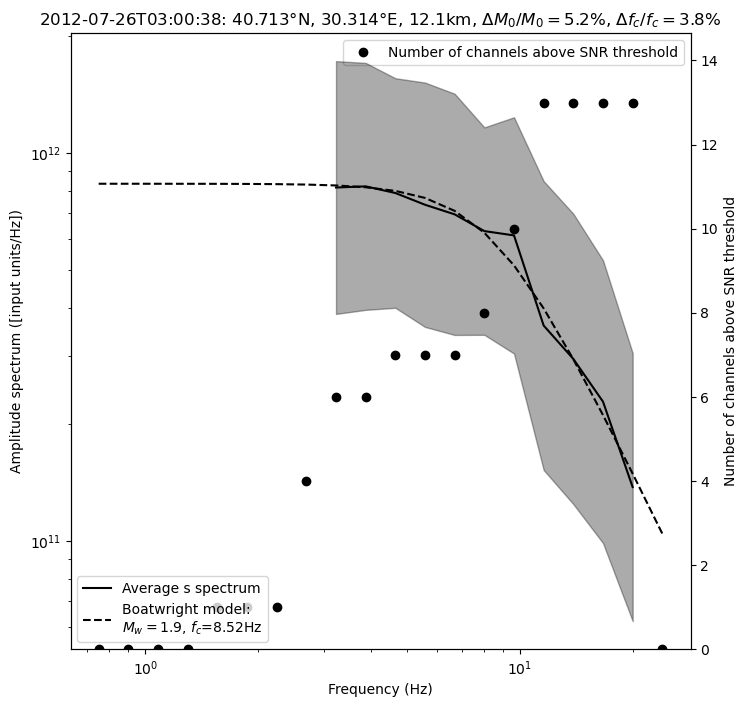

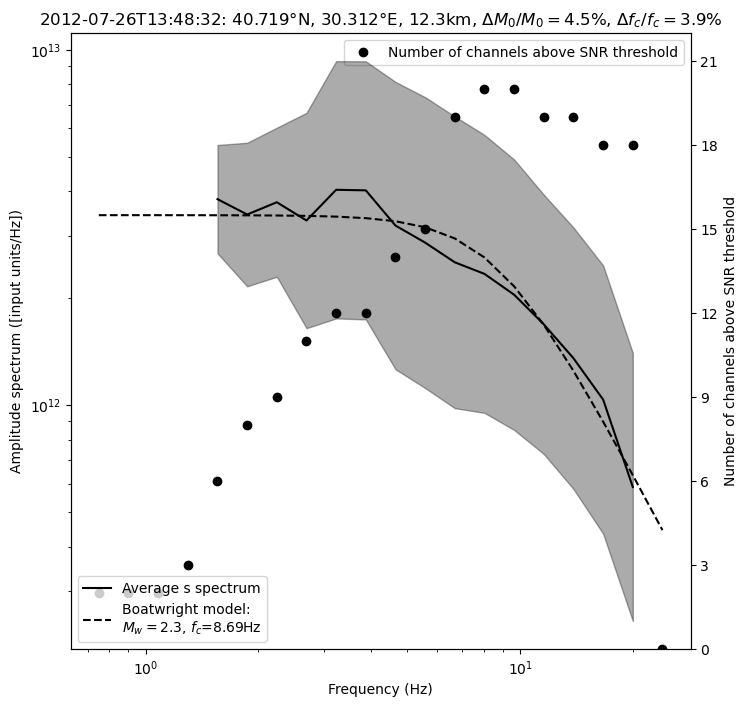

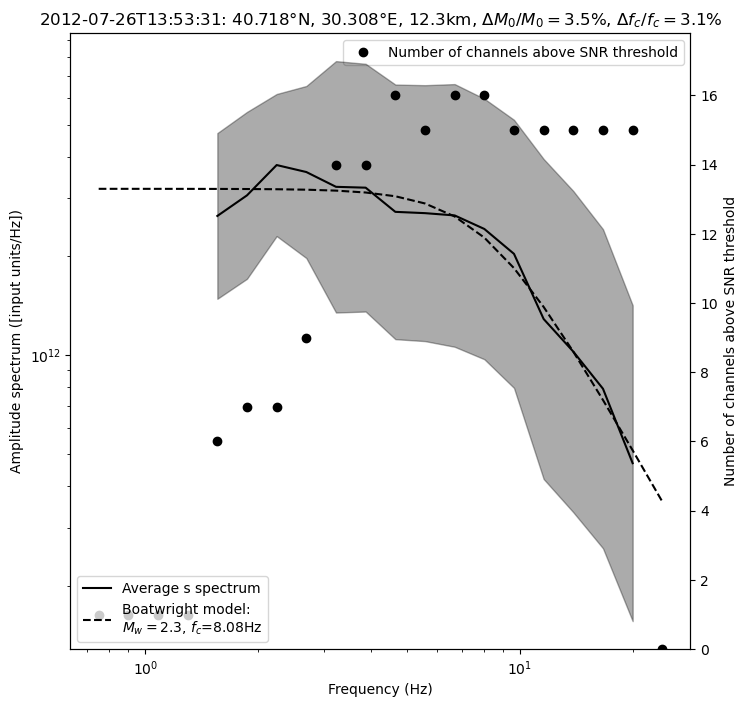

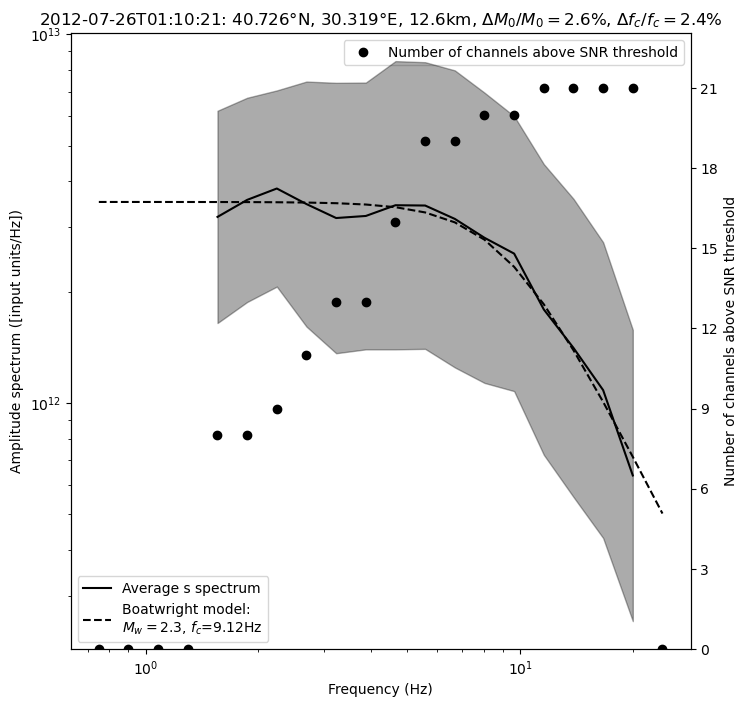

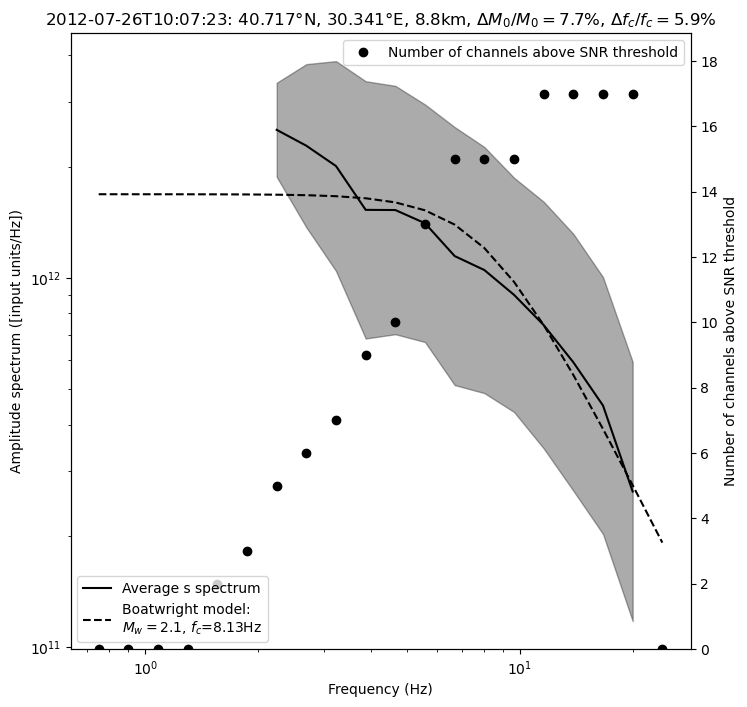

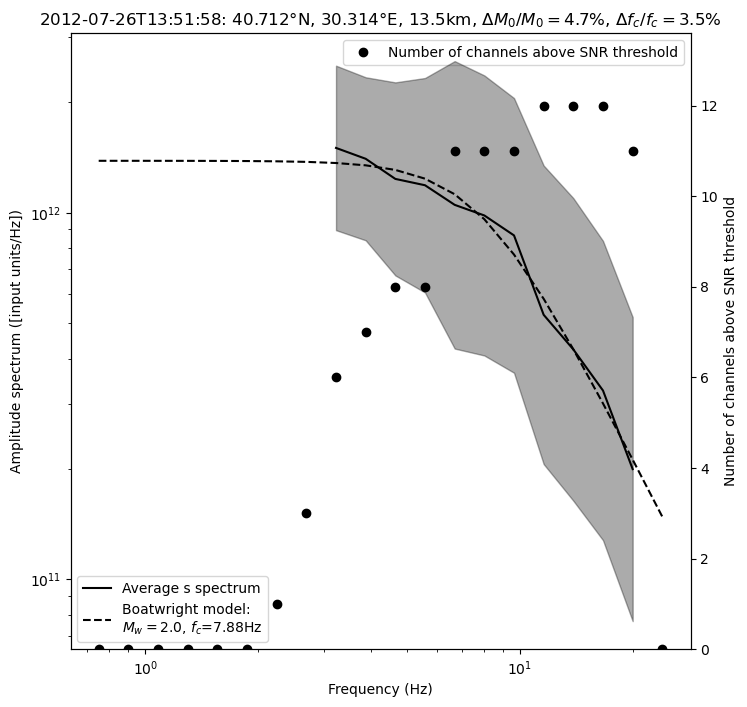

figtitle = (f"{event.origin_time.strftime('%Y-%m-%dT%H:%M:%S')}: "

f"{event.latitude:.3f}""\u00b0"

f"N, {event.longitude:.3f}""\u00b0"

f"E, {event.depth:.1f}km, "

r"$\Delta M_0 / M_0=$"f"{rel_M0_err:.1f}%, "

r"$\Delta f_c / f_c=$"f"{rel_fc_err:.1f}%")

source_parameters[f"M0_{phase_for_mag}"] = spectrum.M0

source_parameters[f"Mw_{phase_for_mag}"] = spectrum.Mw

source_parameters[f"fc_{phase_for_mag}"] = spectrum.fc

source_parameters[f"M0_err_{phase_for_mag}"] = spectrum.M0_err

source_parameters[f"fc_err_{phase_for_mag}"] = spectrum.fc_err

fig = spectrum.plot_average_spectrum(

phase_for_mag,

plot_fit=True,

figname=f"{phase_for_mag}_spectrum_{EVENT_IDX}",

figtitle=figtitle,

figsize=(8, 8),

plot_std=True,

plot_num_valid_channels=True,

)

Not enough valid points! (Only 49.00%)

Relative error on M0: 3.95%

Relative error on fc: 2.99%

The significant discrepancy between the P- and S-wave estimates probably comes from the difficulty of separating P and S waves at short distances. Thus, it’s better to simply use the S-wave estimate.

In general, if one had good S-wave and P-wave spectra, one could produce a single final estimate of the moment magnitude \(M_w\) by averaging the P- and S-wave estimates:

Using the definition of the moment magnitude, we can derive the following formula for the magnitude error:

Method from Al-Ismal et al., 2022: Example with a single event

The Al-Ismail et al., 2022, study shows that a higher SNR displacement spectrum can estimated from the peak amplitude of the displacement waveform filtered in multiple frequency bands. This technique is especially interesting for the small magnitude earthquakes we are dealing with.

Reference:

Al‐Ismail, Fatimah, William L. Ellsworth, and Gregory C. Beroza. (2022) “A Time‐Domain Approach for Accurate Spectral Source Estimation with Application to Ridgecrest, California, Earthquakes.” Bulletin of the Seismological Society of America.

[65]:

# waveform extraction parameters

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP_S = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "S"}

PHASE_ON_COMP_P = {"N": "P", "1": "P", "E": "P", "2": "P", "Z": "P"}

# BUFFER_SEC: duration, in sec, of time window taken before and after the window of interest

# which we need to avoid propagating the pre-filtering taper operation into our

# amplitude readings

BUFFER_SEC = 6.0

# OFFSET_PHASE: dictionary defining the time offset taken before a given phase

# for example OFFSET_PHASE["P"] = 1.0 means that we extract the window

# 1 second before the predicted P arrival time

OFFSET_PHASE = {"P": 0.5 + BUFFER_SEC, "S": 0.5 + BUFFER_SEC}

DURATION_SEC = 3.0 + 2.0 * BUFFER_SEC

OFFSET_OT_SEC_NOISE = DURATION_SEC

TIME_SHIFTED = True

DATA_FOLDER = "raw"

DATA_READER = data_reader_mseed

ATTACH_RESPONSE = True

# multi-band-filtering parameters

FREQUENCY_BANDS = {

"0.5Hz-1.0Hz": [0.5, 1.0],

"1.0Hz-2.0Hz": [1.0, 2.0],

"2.0Hz-4.0Hz": [2.0, 4.0],

"4.0Hz-8.0Hz": [4.0, 8.0],

"8.0Hz-16.0Hz": [8.0, 16.0],

"16.0Hz-32.0Hz": [16.0, 32.0],

}

FILTER_ORDER = 4

USED_COMPONENTS = "[N,E,1,2,Z]"

CENTER_FREQUENCIES = [

0.5

* (

FREQUENCY_BANDS[band][0] + FREQUENCY_BANDS[band][1]

)

for band in FREQUENCY_BANDS

]

# spectral inversion parameters

SPECTRAL_MODEL = "boatwright"

RHO_SOURCE_KGM3 = 2700.0

VS_SOURCE_MS = 3500.0

VP_SOURCE_MS = VS_SOURCE_MS * 1.72

RHO_RECEIVER_KGM3 = 2600.0

VS_RECEIVER_MS = 2800.0

VP_RECEIVER_MS = VS_RECEIVER_MS * 1.72

NUM_FREQS = 20

SNR_THRESHOLD = 10.

MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN = 5

MIN_FRACTION_VALID_POINTS_BELOW_FC = 0.20

MAX_RELATIVE_DISTANCE_ERR_PCT = 33.

NUM_CHANNEL_WEIGHTED_FIT = True

# attenuation model

Q_1HZ = 33.

N = 0.75

[66]:

def plot_filtered_traces(spectrum, station_name, noise_spectrum=None):

import fnmatch

tr_ids = fnmatch.filter(

list(spectrum.keys()), f"*{station_name}*"

)

num_channels = len(tr_ids)

if num_channels == 0:

print(f"Could not find {station_name}")

return

num_bands = len(spectrum[tr_ids[0]]["filtered_traces"])

fig, axes = plt.subplots(

num=f"filtered_traces_{station_name}",

ncols=num_channels,

nrows=num_bands,

figsize=(16, 3*num_bands)

)

for c, trid in enumerate(tr_ids):

axes[0, c].set_title(trid)

for i, band in enumerate(spectrum[tr_ids[0]]["filtered_traces"].keys()):

tr = spectrum[trid]["filtered_traces"][band]

axes[i, c].plot(

tr.times(),

tr.data,

)

axes[i, c].text(

0.02, 0.05, band, transform=axes[i, c].transAxes

)

if noise_spectrum is not None:

tr_n = noise_spectrum[trid]["filtered_traces"][band]

axes[i, c].plot(

tr_n.times(),

tr_n.data,

color="grey",

ls="--",

zorder=0.1,

)

axes[i, c].set_xlabel("Time (s)")

return fig

[67]:

EVENT_IDX = 12

event = events[EVENT_IDX]

print(f"The maximum horizontal location uncertainty of event {EVENT_IDX} is {event.hmax_unc:.2f}km.")

print(f"The minimum horizontal location uncertainty of event {EVENT_IDX} is {event.hmin_unc:.2f}km.")

print(f"The maximum vertical location uncertainty is {event.vmax_unc:.2f}km.")

The maximum horizontal location uncertainty of event 12 is 1.72km.

The minimum horizontal location uncertainty of event 12 is 1.49km.

The maximum vertical location uncertainty is 2.48km.

[68]:

# extract waveforms

# first, read short extract before signal as an estimate of noise

event.read_waveforms(

DURATION_SEC,

time_shifted=False,

data_folder=DATA_FOLDER,

offset_ot=OFFSET_OT_SEC_NOISE,

attach_response=ATTACH_RESPONSE,

)

noise = event.traces.copy()

# then, read signal

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_P,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

p_wave = event.traces.copy()

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_S,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

s_wave = event.traces.copy()

# correct for instrument response and integrate to get displacement seismograms

for st in [noise, p_wave, s_wave]:

for tr in st:

fnyq = tr.stats.sampling_rate / 2.

pre_filt = [

1. / DURATION_SEC,

1.05 / DURATION_SEC,

0.95 * fnyq,

0.98 * fnyq

]

tr.detrend("constant")

tr.detrend("linear")

tr.taper(0.25, type="cosine")

tr.remove_response(

pre_filt=pre_filt,

zero_mean=False,

taper=False,

#taper_fraction=0.25,

output="DISP",

plot=False,

)

[69]:

# -----------------------------------------

# now, compute multi-band displacement spectra

# -----------------------------------------

spectrum = BPMF.spectrum.Spectrum(event=event)

spectrum.set_frequency_bands(FREQUENCY_BANDS)

spectrum.compute_multi_band_spectrum(

noise.select(component=USED_COMPONENTS), "noise", BUFFER_SEC,

dev_mode=True

)

spectrum.compute_multi_band_spectrum(

s_wave.select(component=USED_COMPONENTS), "s", BUFFER_SEC,

dev_mode=True

)

spectrum.compute_multi_band_spectrum(

p_wave.select(component=USED_COMPONENTS), "p", BUFFER_SEC,

dev_mode=True

)

# attenuation model

Q = Q_1HZ * np.power(spectrum.frequencies, N)

spectrum.set_Q_model(Q, spectrum.frequencies)

spectrum.compute_correction_factor(

RHO_SOURCE_KGM3,

RHO_RECEIVER_KGM3,

VP_SOURCE_MS,

VP_RECEIVER_MS,

VS_SOURCE_MS,

VS_RECEIVER_MS,

)

spectrum.set_target_frequencies(

CENTER_FREQUENCIES[0],

CENTER_FREQUENCIES[-1],

NUM_FREQS

)

spectrum.resample(spectrum.frequencies, spectrum.phases)

for phase_for_mag in ["p", "s"]:

spectrum.compute_signal_to_noise_ratio(phase_for_mag)

spectrum.compute_network_average_spectrum(

phase_for_mag,

SNR_THRESHOLD,

min_num_valid_channels_per_freq_bin=MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN,

max_relative_distance_err_pct=MAX_RELATIVE_DISTANCE_ERR_PCT,

)

spectrum.fit_average_spectrum(

phase_for_mag,

model=SPECTRAL_MODEL,

min_fraction_valid_points_below_fc=MIN_FRACTION_VALID_POINTS_BELOW_FC,

weighted=NUM_CHANNEL_WEIGHTED_FIT,

)

fig = spectrum.plot_average_spectrum(

phase_for_mag,

plot_fit=True,

figname=f"{phase_for_mag}_spectrum_{event.id}",

figsize=(10, 10),

figtitle=figtitle,

plot_std=True,

plot_num_valid_channels=True,

)

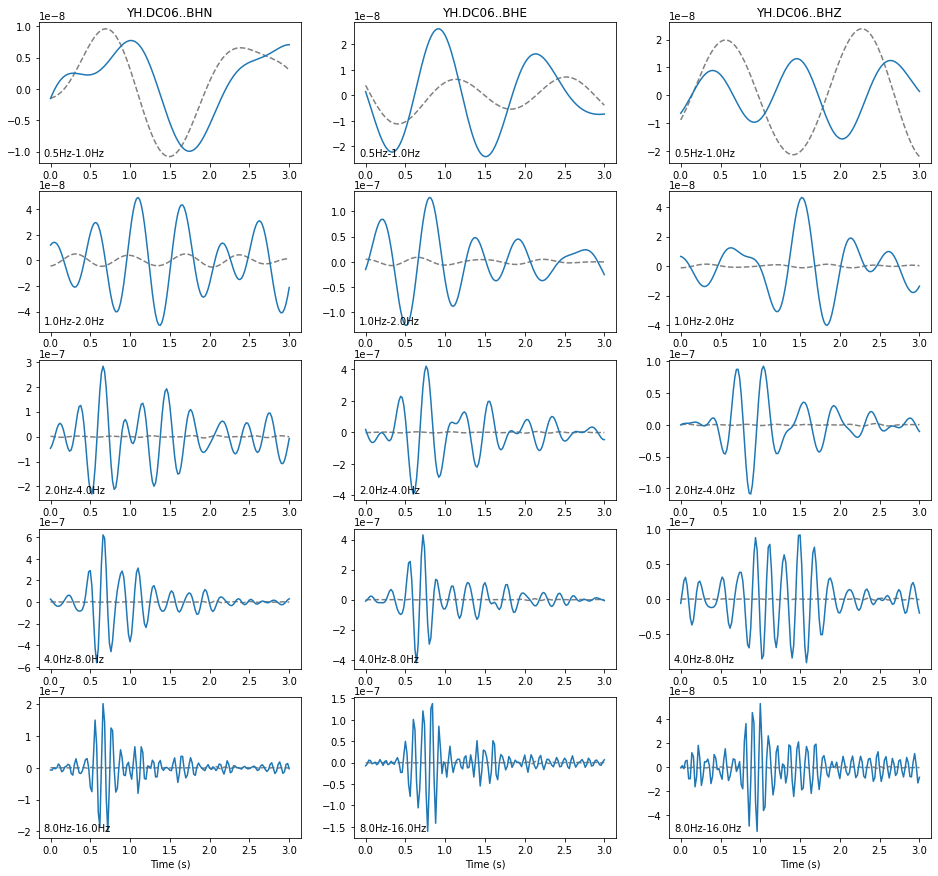

Have a look at the bandpass filtered displacement seismograms from which the spectrum was built:

[70]:

fig = plot_filtered_traces(

spectrum.s_spectrum, "DC06", noise_spectrum=spectrum.noise_spectrum

)

Using the Al-Ismail et al., 2022, technique procudes network-averaged displacement spectra that follows the Boatwright model very closely, therefore we prefer it, in general. However, note that the modeled S-wave spectra are very similar with both techniques.

Estimate a moment magnitude for every event: Al-Ismail method (preferred)

[71]:

# waveform extraction parameters

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP_S = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "S"}

PHASE_ON_COMP_P = {"N": "P", "1": "P", "E": "P", "2": "P", "Z": "P"}

# BUFFER_SEC: duration, in sec, of time window taken before and after the window of interest

# which we need to avoid propagating the pre-filtering taper operation into our

# amplitude readings

BUFFER_SEC = 6.0

# OFFSET_PHASE: dictionary defining the time offset taken before a given phase

# for example OFFSET_PHASE["P"] = 1.0 means that we extract the window

# 1 second before the predicted P arrival time

OFFSET_PHASE = {"P": 0.5 + BUFFER_SEC, "S": 0.5 + BUFFER_SEC}

DURATION_SEC = 3.0 + 2.0 * BUFFER_SEC

OFFSET_OT_SEC_NOISE = DURATION_SEC

TIME_SHIFTED = True

DATA_FOLDER = "raw"

DATA_READER = data_reader_mseed

ATTACH_RESPONSE = True

# multi-band-filtering parameters

FREQUENCY_BANDS = {

"0.5Hz-1.0Hz": [0.5, 1.0],

"1.0Hz-2.0Hz": [1.0, 2.0],

"2.0Hz-4.0Hz": [2.0, 4.0],

"4.0Hz-8.0Hz": [4.0, 8.0],

"8.0Hz-16.0Hz": [8.0, 16.0],

"16.0Hz-32.0Hz": [16.0, 32.0],

}

FILTER_ORDER = 4

USED_COMPONENTS = "[N,E,1,2,Z]"

CENTER_FREQUENCIES = [

0.5

* (

FREQUENCY_BANDS[band][0] + FREQUENCY_BANDS[band][1]

)

for band in FREQUENCY_BANDS

]

# spectral inversion parameters

SPECTRAL_MODEL = "boatwright"

RHO_SOURCE_KGM3 = 2700.0

VS_SOURCE_MS = 3500.0

VP_SOURCE_MS = VS_SOURCE_MS * 1.72

RHO_RECEIVER_KGM3 = 2600.0

VS_RECEIVER_MS = 2800.0

VP_RECEIVER_MS = VS_RECEIVER_MS * 1.72

NUM_FREQS = 20

SNR_THRESHOLD = 10.

MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN = 5

MIN_FRACTION_VALID_POINTS_BELOW_FC = 0.20

MAX_RELATIVE_DISTANCE_ERR_PCT = 33.

NUM_CHANNEL_WEIGHTED_FIT = True

# attenuation model

Q_1HZ = 33.

N = 0.75

[72]:

for i, event in enumerate(events):

print("========================")

print(f"Processing event {i}")

# extract waveforms

# first, read short extract before signal as an estimate of noise

event.read_waveforms(

DURATION_SEC,

time_shifted=False,

data_folder=DATA_FOLDER,

offset_ot=OFFSET_OT_SEC_NOISE,

attach_response=ATTACH_RESPONSE,

)

noise = event.traces.copy()

# then, read signal

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_P,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

p_wave = event.traces.copy()

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_S,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

s_wave = event.traces.copy()

# correct for instrument response and integrate to get displacement seismograms

for st in [noise, p_wave, s_wave]:

for tr in st:

fnyq = tr.stats.sampling_rate / 2.

pre_filt = [

1. / DURATION_SEC,

1.05 / DURATION_SEC,

0.95 * fnyq,

0.98 * fnyq

]

tr.detrend("constant")

tr.detrend("linear")

tr.taper(0.25, type="cosine")

tr.remove_response(

pre_filt=pre_filt,

zero_mean=False,

taper=False,

#taper_fraction=0.25,

output="DISP",

plot=False,

)

# -----------------------------------------

# now, compute multi-band displacement spectra

# -----------------------------------------

spectrum = BPMF.spectrum.Spectrum(event=event)

spectrum.set_frequency_bands(FREQUENCY_BANDS)

spectrum.compute_multi_band_spectrum(

noise.select(component=USED_COMPONENTS), "noise", BUFFER_SEC,

dev_mode=False

)

spectrum.compute_multi_band_spectrum(

s_wave.select(component=USED_COMPONENTS), "s", BUFFER_SEC,

dev_mode=False

)

spectrum.compute_multi_band_spectrum(

p_wave.select(component=USED_COMPONENTS), "p", BUFFER_SEC,

dev_mode=False

)

Q = Q_1HZ * np.power(spectrum.frequencies, N)

spectrum.set_Q_model(Q, spectrum.frequencies)

spectrum.compute_correction_factor(

RHO_SOURCE_KGM3,

RHO_RECEIVER_KGM3,

VP_SOURCE_MS,

VP_RECEIVER_MS,

VS_SOURCE_MS,

VS_RECEIVER_MS,

)

spectrum.set_target_frequencies(

CENTER_FREQUENCIES[0],

CENTER_FREQUENCIES[-1],

NUM_FREQS

)

spectrum.resample(spectrum.frequencies, spectrum.phases)

source_parameters = {}

for phase_for_mag in ["s"]:

spectrum.compute_signal_to_noise_ratio(phase_for_mag)

spectrum.compute_network_average_spectrum(

phase_for_mag,

SNR_THRESHOLD,

min_num_valid_channels_per_freq_bin=MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN,

max_relative_distance_err_pct=MAX_RELATIVE_DISTANCE_ERR_PCT,

)

if not phase_for_mag in spectrum.average_spectra:

continue

spectrum.fit_average_spectrum(

phase_for_mag,

model=SPECTRAL_MODEL,

min_fraction_valid_points_below_fc=MIN_FRACTION_VALID_POINTS_BELOW_FC,

weighted=NUM_CHANNEL_WEIGHTED_FIT,

)

if spectrum.inversion_success:

rel_M0_err = 100.*spectrum.M0_err/spectrum.M0

rel_fc_err = 100.*spectrum.fc_err/spectrum.fc

if rel_M0_err > 10. or spectrum.fc < 0. or spectrum.fc > 25.:

continue

# event.set_aux_data({f"Mw_{phase_for_mag}": spectrum.Mw})

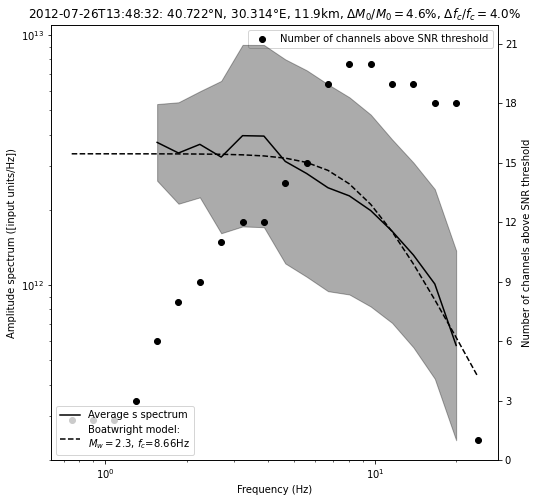

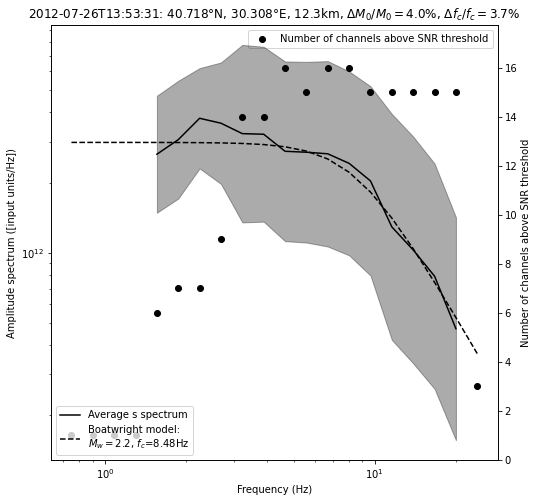

figtitle = (f"{event.origin_time.strftime('%Y-%m-%dT%H:%M:%S')}: "

f"{event.latitude:.3f}""\u00b0"

f"N, {event.longitude:.3f}""\u00b0"

f"E, {event.depth:.1f}km, "

r"$\Delta M_0 / M_0=$"f"{rel_M0_err:.1f}%, "

r"$\Delta f_c / f_c=$"f"{rel_fc_err:.1f}%")

source_parameters[f"M0_{phase_for_mag}"] = spectrum.M0

source_parameters[f"Mw_{phase_for_mag}"] = spectrum.Mw

source_parameters[f"fc_{phase_for_mag}"] = spectrum.fc

source_parameters[f"M0_err_{phase_for_mag}"] = spectrum.M0_err

source_parameters[f"fc_err_{phase_for_mag}"] = spectrum.fc_err

fig = spectrum.plot_average_spectrum(

phase_for_mag,

plot_fit=True,

figname=f"{phase_for_mag}_spectrum_{event.id}",

figtitle=figtitle,

figsize=(8, 8),

plot_std=True,

plot_num_valid_channels=True,

)

Mw_exists = False

norm = 0.

Mw = 0.

Mw_err = 0.

for ph in ["p", "s"]:

if f"Mw_{ph}" in source_parameters:

Mw += source_parameters[f"Mw_{ph}"]

Mw_err += 2./3. * source_parameters[f"M0_err_{ph}"]/source_parameters[f"M0_{ph}"]

norm += 1

Mw_exists = True

if Mw_exists:

Mw /= norm

Mw_err /= norm

source_parameters["Mw"] = Mw

source_parameters["Mw_err"] = Mw_err

else:

Mw = np.nan

Mw_err = np.nan

print(f"The P-S averaged moment magnitude is {Mw:.2f} +/- {Mw_err:.2f}")

# save all this new information in BPMF.dataset.Event.aux_data

event.set_aux_data(source_parameters)

========================

Processing event 0

Not enough valid points! (Only 35.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 1

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 2

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 3

Not enough valid points! (Only 30.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 4

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 5

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 6

The P-S averaged moment magnitude is 1.88 +/- 0.03

========================

Processing event 7

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 8

The P-S averaged moment magnitude is 2.29 +/- 0.03

========================

Processing event 9

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 10

The P-S averaged moment magnitude is 2.27 +/- 0.02

========================

Processing event 11

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 12

The P-S averaged moment magnitude is 2.30 +/- 0.02

========================

Processing event 13

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 14

Not enough valid points! (Only 5.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 15

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 16

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 17

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 18

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 19

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 20

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 21

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 22

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 23

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 24

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 25

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 26

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 27

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 28

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 29

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 30

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 31

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 32

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 33

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 34

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 35

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 36

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 37

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 38

The P-S averaged moment magnitude is 2.08 +/- 0.05

========================

Processing event 39

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 40

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 41

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 42

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 43

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 44

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 45

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 46

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 47

The P-S averaged moment magnitude is 2.03 +/- 0.03

========================

Processing event 48

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 49

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 50

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 51

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 52

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

Estimate a moment magnitude for every event: Traditional method (un-comment if you want to run it)

[73]:

# waveform extraction parameters

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP_S = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "S"}

PHASE_ON_COMP_P = {"N": "P", "1": "P", "E": "P", "2": "P", "Z": "P"}

# BUFFER_SEC: duration, in sec, of time window taken before and after the window of interest

# which we need to avoid propagating the pre-filtering taper operation into our

# amplitude readings

BUFFER_SEC = 3.0

# OFFSET_PHASE: dictionary defining the time offset taken before a given phase

# for example OFFSET_PHASE["P"] = 1.0 means that we extract the window

# 1 second before the predicted P arrival time

OFFSET_PHASE = {"P": 0.5 + BUFFER_SEC, "S": 0.5 + BUFFER_SEC}

DURATION_SEC = 3.0 + 2.0 * BUFFER_SEC

OFFSET_OT_SEC_NOISE = DURATION_SEC

TIME_SHIFTED = True

DATA_FOLDER = "raw"

DATA_READER = data_reader_mseed

ATTACH_RESPONSE = True

# spectral inversion parameters

SPECTRAL_MODEL = "boatwright"

RHO_SOURCE_KGM3 = 2700.0

VS_SOURCE_MS = 3500.0

VP_SOURCE_MS = VS_SOURCE_MS * 1.72

RHO_RECEIVER_KGM3 = 2600.0

VS_RECEIVER_MS = 2800.0

VP_RECEIVER_MS = VS_RECEIVER_MS * 1.72

FREQ_MIN_HZ = 0.5

FREQ_MAX_HZ = 20.

NUM_FREQS = 100

SNR_THRESHOLD = 10.

MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN = 5

MIN_FRACTION_VALID_POINTS_BELOW_FC = 0.20

MAX_RELATIVE_DISTANCE_ERR_PCT = 33.

NUM_CHANNEL_WEIGHTED_FIT = True

# attenuation model

Q_1HZ = 33.

N = 0.75

Let’s run the magnitude computation code for every event. If fitting the network-averaged displacement spectrum is not possible because of poor SNR or location, or if the inverted parameters’ errors are too large then the moment magnitude cannot be estimated and the returned value is nan.

[74]:

for i, event in enumerate(events):

print("========================")

print(f"Processing event {i}")

# extract waveforms

# first, read short extract before signal as an estimate of noise

event.read_waveforms(

DURATION_SEC,

time_shifted=False,

data_folder=DATA_FOLDER,

offset_ot=OFFSET_OT_SEC_NOISE,

attach_response=ATTACH_RESPONSE,

)

noise = event.traces.copy()

# then, read signal

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_P,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

p_wave = event.traces.copy()

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_S,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

s_wave = event.traces.copy()

# correct for instrument response and integrate to get displacement seismograms

for st in [noise, p_wave, s_wave]:

for tr in st:

fnyq = tr.stats.sampling_rate / 2.

pre_filt = [

1. / DURATION_SEC,

1.05 / DURATION_SEC,

0.95 * fnyq,

0.98 * fnyq

]

tr.detrend("constant")

tr.detrend("linear")

tr.taper(0.25, type="cosine")

tr.remove_response(

pre_filt=pre_filt,

zero_mean=False,

taper=False,

#taper_fraction=0.25,

output="DISP",

plot=False,

)

tr.trim(

starttime=tr.stats.starttime + BUFFER_SEC,

endtime=tr.stats.endtime - BUFFER_SEC

)

spectrum = BPMF.spectrum.Spectrum(event=event)

spectrum.compute_spectrum(noise, "noise")

spectrum.compute_spectrum(p_wave, "p")

spectrum.compute_spectrum(s_wave, "s")

spectrum.set_target_frequencies(FREQ_MIN_HZ, FREQ_MAX_HZ, NUM_FREQS)

spectrum.resample(spectrum.frequencies, spectrum.phases)

spectrum.compute_signal_to_noise_ratio("p")

spectrum.compute_signal_to_noise_ratio("s")

Q = Q_1HZ * np.power(spectrum.frequencies, N)

spectrum.set_Q_model(Q, spectrum.frequencies)

spectrum.compute_correction_factor(

RHO_SOURCE_KGM3, RHO_RECEIVER_KGM3,

VP_SOURCE_MS, VP_RECEIVER_MS,

VS_SOURCE_MS, VS_RECEIVER_MS

)

source_parameters = {}

for phase_for_mag in ["s"]:

spectrum.compute_network_average_spectrum(

phase_for_mag,

SNR_THRESHOLD,

min_num_valid_channels_per_freq_bin=MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN,

max_relative_distance_err_pct=MAX_RELATIVE_DISTANCE_ERR_PCT

)

if not phase_for_mag in spectrum.average_spectra:

continue

spectrum.integrate(phase_for_mag, average=True)

spectrum.fit_average_spectrum(

phase_for_mag,

model=SPECTRAL_MODEL,

min_fraction_valid_points_below_fc=MIN_FRACTION_VALID_POINTS_BELOW_FC,

weighted=NUM_CHANNEL_WEIGHTED_FIT

)

if spectrum.inversion_success:

rel_M0_err = 100.*spectrum.M0_err/spectrum.M0

rel_fc_err = 100.*spectrum.fc_err/spectrum.fc

if rel_M0_err > 10. or spectrum.fc < 0. or spectrum.fc > 25.:

continue

# event.set_aux_data({f"Mw_{phase_for_mag}": spectrum.Mw})

figtitle = (f"{event.origin_time.strftime('%Y-%m-%dT%H:%M:%S')}: "

f"{event.latitude:.3f}""\u00b0"

f"N, {event.longitude:.3f}""\u00b0"

f"E, {event.depth:.1f}km, "

r"$\Delta M_0 / M_0=$"f"{rel_M0_err:.1f}%, "

r"$\Delta f_c / f_c=$"f"{rel_fc_err:.1f}%")

source_parameters[f"M0_{phase_for_mag}"] = spectrum.M0

source_parameters[f"Mw_{phase_for_mag}"] = spectrum.Mw

source_parameters[f"fc_{phase_for_mag}"] = spectrum.fc

source_parameters[f"M0_err_{phase_for_mag}"] = spectrum.M0_err

source_parameters[f"fc_err_{phase_for_mag}"] = spectrum.fc_err

fig = spectrum.plot_average_spectrum(

phase_for_mag,

plot_fit=True,

figname=f"{phase_for_mag}_spectrum_{i}",

figtitle=figtitle,

figsize=(8, 8),

plot_std=True,

plot_num_valid_channels=True,

)

Mw_exists = False

norm = 0.

Mw = 0.

Mw_err = 0.

for ph in ["p", "s"]:

if f"Mw_{ph}" in source_parameters:

Mw += source_parameters[f"Mw_{ph}"]

Mw_err += 2./3. * source_parameters[f"M0_err_{ph}"]/source_parameters[f"M0_{ph}"]

norm += 1

Mw_exists = True

if Mw_exists:

Mw /= norm

Mw_err /= norm

source_parameters["Mw"] = Mw

source_parameters["Mw_err"] = Mw_err

else:

Mw = np.nan

Mw_err = np.nan

print(f"The P-S averaged moment magnitude is {Mw:.2f} +/- {Mw_err:.2f}")

source_parameters["Mw"] = Mw

source_parameters["Mw_err"] = Mw_err

# save all this new information in BPMF.dataset.Event.aux_data

event.set_aux_data(source_parameters)

========================

Processing event 0

Not enough valid points! (Only 3.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 1

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 2

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 3

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 4

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 5

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 6

Not enough valid points! (Only 9.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 7

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 8

Not enough valid points below corner frequency (only 6.0%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 9

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 10

Not enough valid points below corner frequency (only 0.0%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 11

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 12

Not enough valid points below corner frequency (only 7.0%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 13

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 14

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 15

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 16

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 17

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 18

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 19

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 20

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 21

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 22

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 23

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 24

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 25

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 26

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 27

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 28

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 29

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 30

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 31

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 32

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 33

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 34

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 35

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 36

Not enough valid points! (Only 1.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 37

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 38

Not enough valid points! (Only 39.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 39

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 40

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 41

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 42

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 43

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 44

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 45

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 46

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 47

Not enough valid points! (Only 15.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 48

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 49

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 50

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 51

Not enough valid points! (Only 2.00%)

The P-S averaged moment magnitude is nan +/- nan

========================

Processing event 52

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan

Approximate moment magnitude estimation

To compute the approximate moment magnitude \(M_{w^*}\) of an event, as we defined at the beginning of this notebook, we actually just need to add a block of code in the same processing that we used to estimate moment magnitudes. Thus, it usually makes sense to compute both at the same time. While most events do not produce a valid moment magnitude estimate, we get an approximate moment magnitude for each event.

[75]:

# waveform extraction parameters

# PHASE_ON_COMP: dictionary defining which moveout we use to extract the waveform

PHASE_ON_COMP_S = {"N": "S", "1": "S", "E": "S", "2": "S", "Z": "S"}

PHASE_ON_COMP_P = {"N": "P", "1": "P", "E": "P", "2": "P", "Z": "P"}

# BUFFER_SEC: duration, in sec, of time window taken before and after the window of interest

# which we need to avoid propagating the pre-filtering taper operation into our

# amplitude readings

BUFFER_SEC = 6.0

# OFFSET_PHASE: dictionary defining the time offset taken before a given phase

# for example OFFSET_PHASE["P"] = 1.0 means that we extract the window

# 1 second before the predicted P arrival time

OFFSET_PHASE = {"P": 0.5 + BUFFER_SEC, "S": 0.5 + BUFFER_SEC}

DURATION_SEC = 3.0 + 2.0 * BUFFER_SEC

OFFSET_OT_SEC_NOISE = DURATION_SEC

TIME_SHIFTED = True

DATA_FOLDER = "raw"

DATA_READER = data_reader_mseed

ATTACH_RESPONSE = True

# multi-band-filtering parameters

FREQUENCY_BANDS = {

"0.5Hz-1.0Hz": [0.5, 1.0],

"1.0Hz-2.0Hz": [1.0, 2.0],

"2.0Hz-4.0Hz": [2.0, 4.0],

"4.0Hz-8.0Hz": [4.0, 8.0],

"8.0Hz-16.0Hz": [8.0, 16.0],

"16.0Hz-32.0Hz": [16.0, 32.0],

}

FILTER_ORDER = 4

USED_COMPONENTS = "[N,E,1,2,Z]"

CENTER_FREQUENCIES = [

0.5

* (

FREQUENCY_BANDS[band][0] + FREQUENCY_BANDS[band][1]

)

for band in FREQUENCY_BANDS

]

# spectral inversion parameters

SPECTRAL_MODEL = "boatwright"

RHO_SOURCE_KGM3 = 2700.0

VS_SOURCE_MS = 3500.0

VP_SOURCE_MS = VS_SOURCE_MS * 1.72

RHO_RECEIVER_KGM3 = 2600.0

VS_RECEIVER_MS = 2800.0

VP_RECEIVER_MS = VS_RECEIVER_MS * 1.72

NUM_FREQS = 20

SNR_THRESHOLD = 10.

MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN = 5

MIN_FRACTION_VALID_POINTS_BELOW_FC = 0.20

MAX_RELATIVE_DISTANCE_ERR_PCT = 33.

NUM_CHANNEL_WEIGHTED_FIT = True

PHASE_FOR_MAG = "s"

NUM_AVERAGING_BANDS = 3

LOW_SNR_FREQ_MIN_HZ = 2.0

A_MOMENT_MAGNITUDE_SCALING = 2. /3.

B_MOMENT_MAGNITUDE_SCALING = -9.1 * A_MOMENT_MAGNITUDE_SCALING

# attenuation model

Q_1HZ = 33.

N = 0.75

[76]:

def snr_based_weights(snr, snr_threshold, weight_max=3.0, max_num_bad_measurements=9):

# allow some numerical noise (?)

snr_clipped = np.minimum(snr, 1.001 * snr_threshold)

# linear function of snr

weights = snr_clipped

# clip weights

weights = np.minimum(weights, weight_max)

if np.sum(snr >= snr_threshold) >= max_num_bad_measurements:

# set weights of bad measurements to 0

weights[snr < snr_threshold] = 0.

else:

ordered_indexes = np.argsort(snr)

# set to 0 all but the `max_num_bad_measurements` least bad meas.

weights[ordered_indexes[:-max_num_bad_measurements]] = 0.

return weights

[78]:

for i, event in enumerate(events):

print("========================")

print(f"Processing event {i}")

source_parameters = {}

# extract waveforms

# first, read short extract before signal as an estimate of noise

event.read_waveforms(

DURATION_SEC,

time_shifted=False,

data_folder=DATA_FOLDER,

offset_ot=OFFSET_OT_SEC_NOISE,

attach_response=ATTACH_RESPONSE,

)

noise = event.traces.copy()

event.read_waveforms(

DURATION_SEC,

phase_on_comp=PHASE_ON_COMP_S,

offset_phase=OFFSET_PHASE,

time_shifted=TIME_SHIFTED,

data_folder=DATA_FOLDER,

attach_response=ATTACH_RESPONSE,

)

event.zero_out_clipped_waveforms(kurtosis_threshold=-1)

s_wave = event.traces.copy()

# correct for instrument response and integrate to get displacement seismograms

for st in [noise, s_wave]:

for tr in st:

fnyq = tr.stats.sampling_rate / 2.

pre_filt = [

1. / DURATION_SEC,

1.05 / DURATION_SEC,

0.95 * fnyq,

0.98 * fnyq

]

tr.detrend("constant")

tr.detrend("linear")

tr.taper(0.25, type="cosine")

tr.remove_response(

pre_filt=pre_filt,

zero_mean=False,

taper=False,

#taper_fraction=0.25,

output="DISP",

plot=False,

)

# -----------------------------------------

# now, compute multi-band displacement spectra

# -----------------------------------------

spectrum = BPMF.spectrum.Spectrum(event=event)

spectrum.set_frequency_bands(FREQUENCY_BANDS)

spectrum.compute_multi_band_spectrum(

noise.select(component=USED_COMPONENTS), "noise", BUFFER_SEC,

dev_mode=False

)

spectrum.compute_multi_band_spectrum(

s_wave.select(component=USED_COMPONENTS), "s", BUFFER_SEC,

dev_mode=False

)

Q = Q_1HZ * np.power(spectrum.frequencies, N)

spectrum.set_Q_model(Q, spectrum.frequencies)

spectrum.compute_correction_factor(

RHO_SOURCE_KGM3,

RHO_RECEIVER_KGM3,

VP_SOURCE_MS,

VP_RECEIVER_MS,

VS_SOURCE_MS,

VS_RECEIVER_MS,

)

# -----------------------------------------------------

# measurement for local magnitude

# -----------------------------------------------------

spectrum.compute_signal_to_noise_ratio(PHASE_FOR_MAG)

if len(spectrum.s_spectrum) == 0:

print(f"Could not compute a single spectrum!")

source_parameters["Me"] = np.nan

source_parameters["Mw"] = np.nan

source_parameters["Mw_err"] = np.nan

source_parameters["Ml"] = np.nan

print(f"The P-S averaged moment magnitude {np.nan} +/- {np.nan}")

# save all this new information in BPMF.dataset.Event.aux_data

event.set_aux_data(source_parameters)

continue

# store multi-band, geometrical-spreading-corrected displacement

# spectra in a pandas.DataFrame

# 1) fetch raw displacement spectra

s_spectra = pd.DataFrame(

columns=list(spectrum.s_spectrum.keys()),

#index=list(FREQUENCY_BANDS.keys()),

index=CENTER_FREQUENCIES,

data=np.stack(

[spectrum.s_spectrum[trid]["spectrum"] for trid in spectrum.s_spectrum],

axis=1,

),

)

# 2) apply geometrical spreading correction

for trid in s_spectra.columns:

station = trid.split(".")[1]

s_spectra.loc[:, trid] *= spectrum.correction_factor.loc[

station, "correction_S"

]

# 3) apply attenuation correction?

for trid in s_spectra.columns:

station = trid.split(".")[1]

s_spectra.loc[:, trid] *= spectrum.attenuation_factor.loc[

station, "attenuation_S"

]

# store snr in a pandas.DataFrame

snr_spectra = pd.DataFrame(

columns=list(spectrum.snr_s_spectrum.keys()),

#index=list(FREQUENCY_BANDS.keys()),

index=CENTER_FREQUENCIES,

data=np.stack(

[spectrum.snr_s_spectrum[trid]["snr"] for trid in spectrum.snr_s_spectrum],

axis=1,

),

)

geo_corrected_peaks = pd.Series(index=list(s_spectra.columns), dtype=np.float32)

num_cha = len(s_spectra.columns)

#weights = np.zeros(num_cha, dtype=np.float32)

_snr = np.zeros(num_cha, dtype=np.float32)

#try:

for j, idx in enumerate(geo_corrected_peaks.index):

station = idx.split(".")[1]

multi_band_peaks = s_spectra.loc[:, idx]

multi_band_snr = snr_spectra.loc[:, idx]

valid_bands = multi_band_snr.loc[

multi_band_snr > SNR_THRESHOLD

].index

if len(valid_bands) > 0:

# peak amplitude is taken from the lowest-frequency,

# valid frequency band (reflect physical seismic moment)

valid_bands = np.sort(valid_bands)

selected_bands = valid_bands[

:min(len(valid_bands), NUM_AVERAGING_BANDS)

]

if len(selected_bands) > 1:

geo_corrected_peaks.loc[idx] = np.median(

multi_band_peaks.loc[selected_bands].values

)

else:

geo_corrected_peaks.loc[idx] = multi_band_peaks.loc[

selected_bands

].values[0]

_snr[j] = SNR_THRESHOLD

else:

# peak amplitude is taken from the highest snr frequency

# band (implies error on magnitude estimation)

high_freq = multi_band_snr.index > LOW_SNR_FREQ_MIN_HZ

freq_idx = multi_band_snr[high_freq].index[

multi_band_snr[high_freq].argmax()

]

w_ = multi_band_snr.loc[high_freq]

p_ = multi_band_peaks.loc[high_freq]

sum_ = w_.sum()

sum_ = 1. if sum_ == 0. else sum_

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

_snr[j] = (w_ * multi_band_snr.loc[high_freq]).sum() / sum_

_snr[geo_corrected_peaks == 0.] = 0.

weights = snr_based_weights(_snr, SNR_THRESHOLD)

weighted_log_peaks = np.zeros(len(weights), dtype=np.float64)

weighted_log_peaks[weights > 0.] = (

np.log10(geo_corrected_peaks[weights > 0.]) * weights[weights > 0.]

)

estimated_log10_M0 = weighted_log_peaks.sum() / weights.sum()

Mw_approx = (

A_MOMENT_MAGNITUDE_SCALING * estimated_log10_M0

+ B_MOMENT_MAGNITUDE_SCALING

)

source_parameters["Mw*"] = Mw_approx

spectrum.set_target_frequencies(

CENTER_FREQUENCIES[0],

CENTER_FREQUENCIES[-1],

NUM_FREQS

)

spectrum.resample(spectrum.frequencies, spectrum.phases)

spectrum.compute_signal_to_noise_ratio(PHASE_FOR_MAG)

spectrum.compute_network_average_spectrum(

PHASE_FOR_MAG,

SNR_THRESHOLD,

min_num_valid_channels_per_freq_bin=MIN_NUM_VALID_CHANNELS_PER_FREQ_BIN,

max_relative_distance_err_pct=MAX_RELATIVE_DISTANCE_ERR_PCT,

)

if PHASE_FOR_MAG in spectrum.average_spectra:

spectrum.fit_average_spectrum(

PHASE_FOR_MAG,

model=SPECTRAL_MODEL,

min_fraction_valid_points_below_fc=MIN_FRACTION_VALID_POINTS_BELOW_FC,

weighted=NUM_CHANNEL_WEIGHTED_FIT,

)

if hasattr(spectrum, "inversion_success") and spectrum.inversion_success:

rel_M0_err = 100.*spectrum.M0_err/spectrum.M0

rel_fc_err = 100.*spectrum.fc_err/spectrum.fc

if not (rel_M0_err > 10. or spectrum.fc < 0.):

figtitle = (f"{event.origin_time.strftime('%Y-%m-%dT%H:%M:%S')}: "

f"{event.latitude:.3f}""\u00b0"

f"N, {event.longitude:.3f}""\u00b0"

f"E, {event.depth:.1f}km, "

r"$\Delta M_0 / M_0=$"f"{rel_M0_err:.1f}%, "

r"$\Delta f_c / f_c=$"f"{rel_fc_err:.1f}%")

source_parameters[f"M0_{PHASE_FOR_MAG}"] = spectrum.M0

source_parameters[f"Mw_{PHASE_FOR_MAG}"] = spectrum.Mw

source_parameters[f"fc_{PHASE_FOR_MAG}"] = spectrum.fc

source_parameters[f"M0_err_{PHASE_FOR_MAG}"] = spectrum.M0_err

source_parameters[f"fc_err_{PHASE_FOR_MAG}"] = spectrum.fc_err

fig = spectrum.plot_average_spectrum(

PHASE_FOR_MAG,

plot_fit=True,

figname=f"{PHASE_FOR_MAG}_spectrum_{event.id}",

figtitle=figtitle,

figsize=(8, 8),

plot_std=True,

plot_num_valid_channels=True,

)

Mw_exists = False

norm = 0.

Mw = 0.

Mw_err = 0.

for ph in ["p", "s"]:

if f"Mw_{ph}" in source_parameters:

Mw += source_parameters[f"Mw_{ph}"]

Mw_err += 2./3. * source_parameters[f"M0_err_{ph}"]/source_parameters[f"M0_{ph}"]

norm += 1

Mw_exists = True

if Mw_exists:

Mw /= norm

Mw_err /= norm

source_parameters["Mw"] = Mw

source_parameters["Mw_err"] = Mw_err

else:

Mw = np.nan

Mw_err = np.nan

print(

f"The P-S averaged moment magnitude is {Mw:.2f} +/- {Mw_err:.2f} "

f"and the approximate moment magnitude is {Mw_approx:.2f}"

)

# save all this new information in BPMF.dataset.Event.aux_data

event.set_aux_data(source_parameters)

========================

Processing event 0

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '772102363365.0879' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Not enough valid points! (Only 35.00%)

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.60

========================

Processing event 1

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '532003897767.10736' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.26

========================

Processing event 2

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '507970486098.99194' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.27

========================

Processing event 3

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '470299061311.84174' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Not enough valid points! (Only 30.00%)

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.63

========================

Processing event 4

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '883987226585.5594' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.42

========================

Processing event 5

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '734711564057.551' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.11

========================

Processing event 6

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '450200394033.2132' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

The P-S averaged moment magnitude is 1.88 +/- 0.03 and the approximate moment magnitude is 1.66

========================

Processing event 7

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '87810684912.02907' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.25

========================

Processing event 8

/tmp/ipykernel_2939483/2646755398.py:145: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '2122986642993.463' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = np.median(

The P-S averaged moment magnitude is 2.29 +/- 0.03 and the approximate moment magnitude is 2.19

========================

Processing event 9

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '228627489345.9734' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.39

========================

Processing event 10

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '2758345527945.0947' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

The P-S averaged moment magnitude is 2.27 +/- 0.02 and the approximate moment magnitude is 2.15

========================

Processing event 11

/tmp/ipykernel_2939483/2646755398.py:164: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '450602480096.8624' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.

geo_corrected_peaks.loc[idx] = (w_ * p_).sum() / sum_

Spectrum is below SNR threshold everywhere, cannot fit it.

The P-S averaged moment magnitude is nan +/- nan and the approximate moment magnitude is 1.18

========================

Processing event 12

/tmp/ipykernel_2939483/2646755398.py:145: FutureWarning: Setting an item of incompatible dtype is deprecated and will raise an error in a future version of pandas. Value '2628277051024.041' has dtype incompatible with float32, please explicitly cast to a compatible dtype first.